Course Conclusion

These notes were developed using lectures/material/transcripts from the DeepLearning.AI & AWS - Generative AI with Large Language Models course

Notes

Responsible AI by Dr. Nashlie Sephus

- Special Challenges of Responsible Generative AI

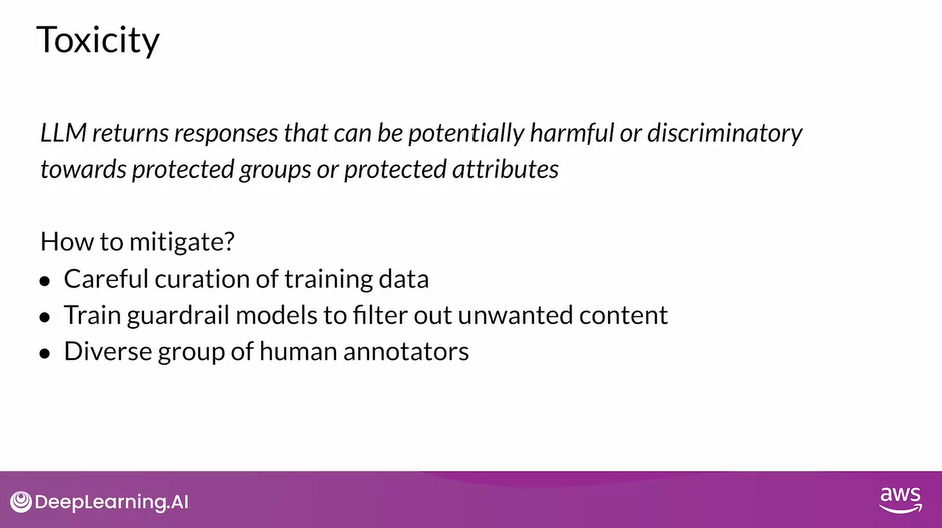

- Toxicity - generates responses that can be potentially harmful

Ways to overcome:

- Careful curation of training data

- Train guardrail models to filter out unwanted content

- Diverse group of human annotators

- Hallucinations - generates factually incorrect content

Ways to overcome:

- Educate users about how Generative AI works

- Add disclaimers

- Augment LLMs with independent, verified citation databases

- Define intended/unintended use cases

- Intellectual Property

Ways to overcome:

- Mix of technology, policy and legal mechanisms

- “Machine Unlearning”

- Filtering and blocking approaches

- Toxicity - generates responses that can be potentially harmful

Ways to overcome:

- Responsibly Build and Use Generative AI Models

- Define use cases: the more specific/narrow, the better

- Assess risks for each use case

- Evaluate performance for each use case

- Iterate over entire AI lifecycle

- Exciting Research

- Water marking and fingerprinting which are ways to include almost like a stamp or a signature in a piece of content or data so that we can always trace back

- Creating models that help determine if content was created with Generative AI

- Special Challenges of Responsible Generative AI

Course Conclusion

- Ongoing research

- Techniques to align models with human values and preferences, increase model interpretability, and implement efficient model governance

- Need more scalable techniques for human oversight, such as constitutional AI

- Explore scaling laws for all steps of the project lifecycle, including techniques that better predict model performance so that you can make sure resources are used efficiently

- Working on model optimizations for small device and edge deployments

- llama.cpp is a C++ implementation of the LLaMA model using four bit integer quantization to run on a laptop

- Develop more efficient techniques for pre-training, finetuning, and reinforcement learning

- Developing models that support longer prompts and contexts

- Supporting 100k token context window

- Support multi-modality across language, images, video, audio, etc

- Learn more about LLM reasoning and are exploring LLMs that combine structured knowledge and symbolic methods

- Ongoing research

Responsible AI

By Dr. Nashlie Sephus

Special Challenges of Responsible Generative AI

Toxicity

Hallucinations

Intellectual Property

Responsibly Build and Use Generative AI Models

Course Conclusion

- Along with responsible AI, researchers are looking into techniques to align models with human values and preferences, increase model interpretability, and implement efficient model governance

- As model capabilities increase, we’ll also need more scalable techniques for human oversight, such as constitutional AI, which I discussed in a previous lesson.

- Researchers continue to explore scaling laws for all steps of the project lifecycle, including techniques that better predict model performance so that you can make sure resources are used efficiently, for example, through simulations

- And scale doesn’t always mean bigger, research teams are working on model optimizations for small device and edge deployments. For example, llama.cpp is a C++ implementation of the LLaMA model using four bit integer quantization to run on a laptop

- Similarly, I’m confident that we’ll see advancements and efficiencies across the whole model development lifecycle

- Especially, more efficient techniques for pre-training, finetuning, and reinforcement learning. We’ll see increased and emergent LLM capabilities

- For example, researchers are looking into developing models that support longer prompts and contexts. For example, to summarize entire books. In fact, during the development of this course, we’ve seen the first announcement of a model supporting 100,000 token context window. This corresponds roughly to 75,000 words and hundreds of pages

- Models will also increasingly support multi-modality across language, images, video, audio, etc. This will unlock new applications and use cases and change how we interact with models. We’ve seen the first amazing results of this with the latest generation of text to image models, where natural language becomes the user interface to create visual content.

- Researchers are also trying to learn more about LLM reasoning and are exploring LLMs that combine structured knowledge and symbolic methods

- This research field of neurosymbolic AI explores the model’s abilities to learn from experience and the ability to reason from what has been learned

Asking AI What the Future Holds