Week 2 Part 1 - Fine-tuning LLMs with Instruction

These notes were developed using lectures/material/transcripts from the DeepLearning.AI & AWS - Generative AI with Large Language Models course

Notes

Instruction Fine-Tuning

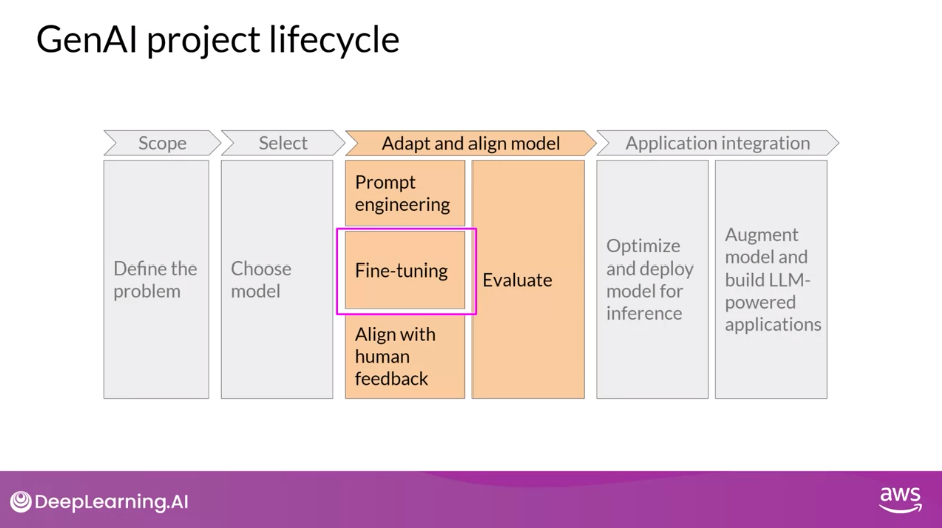

- Stage 2 of Generative AI Project Lifecycle - Adapt and Align Model

- Prompt Engineering

- Fine-tuning

- Align with Human Feedback

- Evaluate

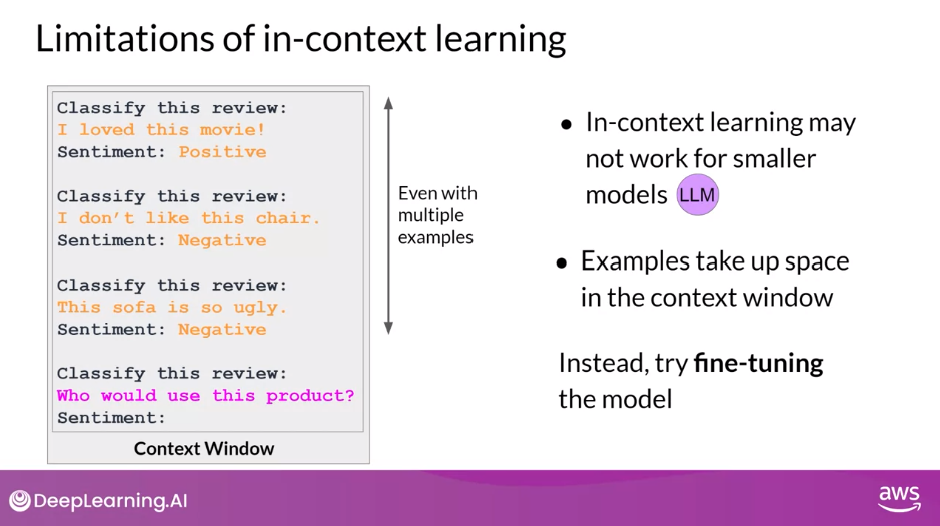

- Limitation of In-Context Learning (ICL)

- Does not work for smaller models

- Examples take up valuable space in the context window

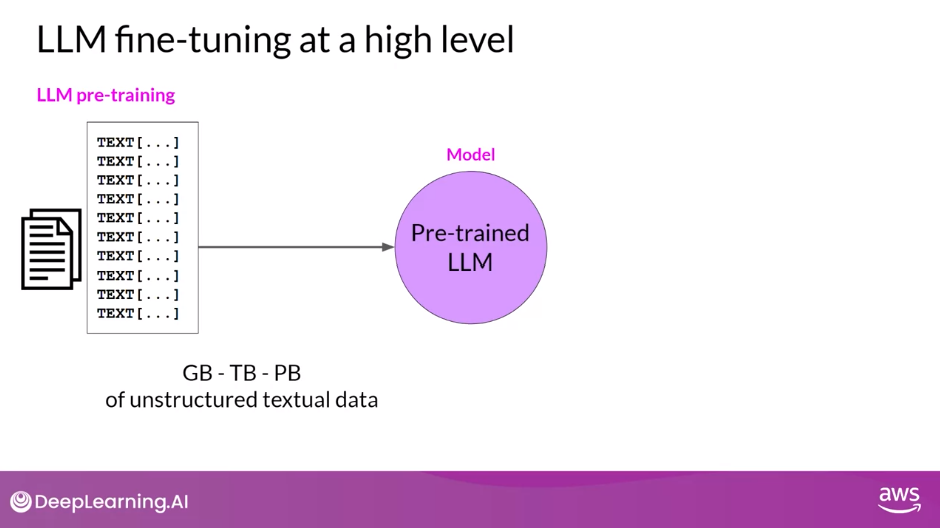

- Pre-training Recap

- Train LLM using vast amount (GB, TB, PB) on unstructured textual data via self-supervised learning

- Fine-tuning - supervised learning process where you use a dataset (GB, TB) of labeled examples (prompt completion pairs)

- Full Fine-tuning - updates all of the model’s weights

- Requires enough memory and compute budget to store and process all the gradients, optimizers and other components that are being updated during training

- Full Fine-tuning - updates all of the model’s weights

- Instruction Fine-tuning - trains the model using examples that demonstrate how it should respond to a specific instruction

- Data Preparation - prepare instruction dataset

- Prompt template libraries

- Classification

- Text Generation

- Text Summarization

- Prompt template libraries

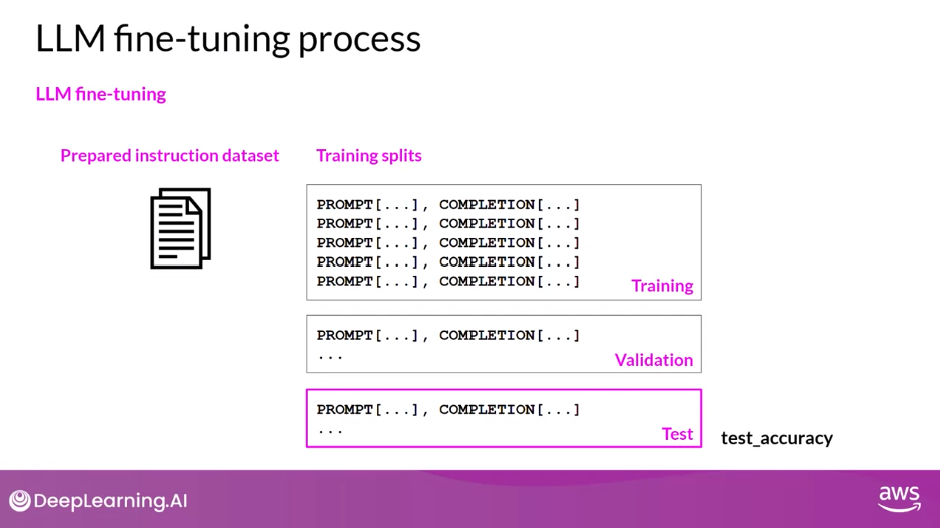

- LLM Fine-tuning Process

- Divide into training, validation and test splits

- Validation accuracy - Measure LLM performance using the validation dataset

- Test accuracy - Final performance evaluation using the holdout test dataset

- Select prompts from your training data set and pass them to the LLM, which then generates completions

- Compare the distribution of the completion and that of the training label and use the standard Cross-Entropy function to calculate loss between the two token distributions

- Use the calculated loss to update the model weights using Backpropagation

- This is repeated for many batches of prompt completion pairs and over several epochs, update the weights so that the model’s performance on the task improves

- Results in a new version of the base model called an instruct model

- Divide into training, validation and test splits

- Data Preparation - prepare instruction dataset

- Stage 2 of Generative AI Project Lifecycle - Adapt and Align Model

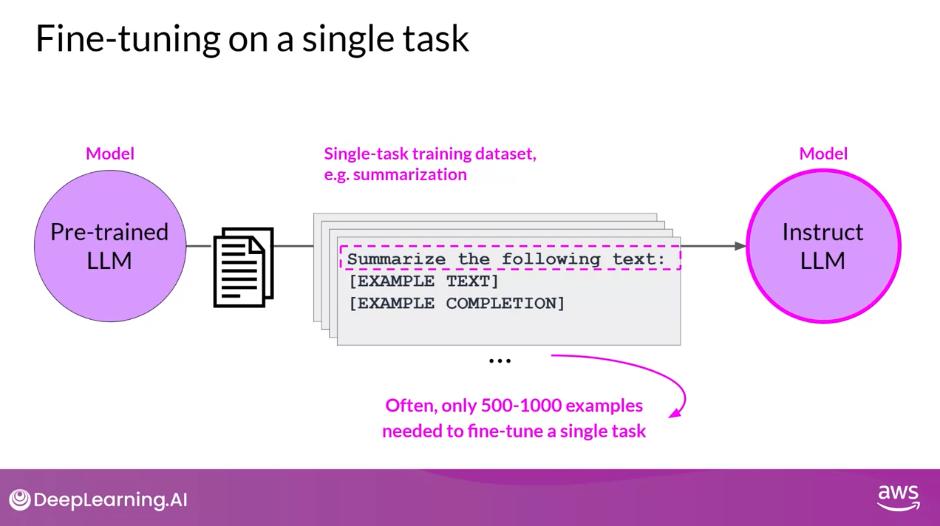

Fine-tuning on a Single Task

- Training on 5-1k labeled examples can result in good performance

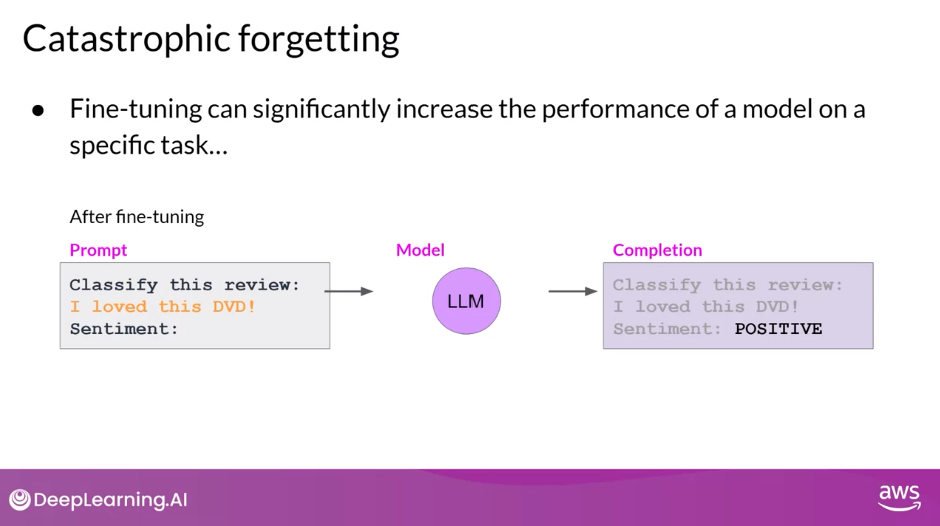

- Downside: the process may lead to Catastrophic Forgetting

- How to Avoid Catastrophic Forgetting?

- Fine-tune on multiple tasks at the same time

- Consider Parameter Efficient Fine-tuning (PEFT)

Multi-task Instruction Fine-tuning

- An extension of single task fine-tuning, where the training dataset is comprised of example inputs and outputs for multiple tasks: Summarization, Review Rating, Code Translation, Entity Recognition

- Avoids the issue of Catastrophic Forgetting

- Drawback: may need 50-100k examples in your training set

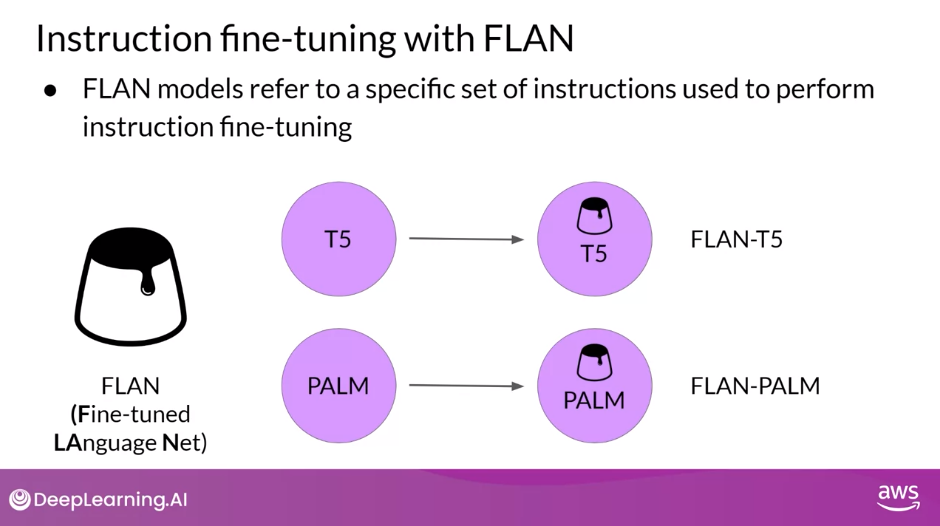

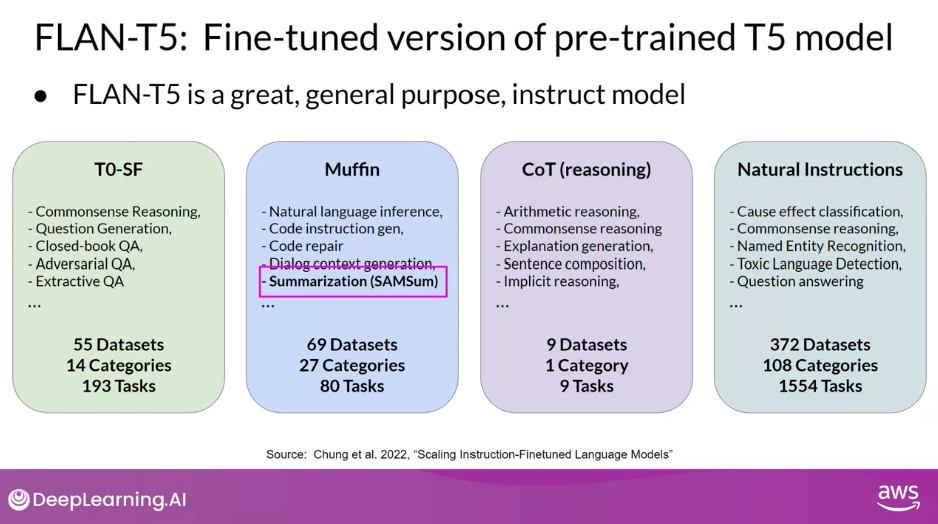

- FLAN (Fine-tuned LAnguage Net) is a specific set of instructions used to fine-tune different models (last step of the training process)

- FLAN-T5 - Fine-tuned version of pre-trained T5 model

- FLAN-PALM - Fine-tuned version of pre-trained PALM model

- Improving Summarization Capabilities

Reading: Scaling Instruct Models

- Introduces FLAN (Fine-tuned LAnguage Net), an instruction finetuning method, and presents the results of its application

- The study demonstrates that by fine-tuning the 540B PaLM model on 1836 tasks while incorporating Chain-of-Thought Reasoning data, FLAN achieves improvements in generalization, human usability, and zero-shot reasoning over the base model

Model Evaluation

- With LLMs, the output is non-deterministic therefore much more challenging to evaluate

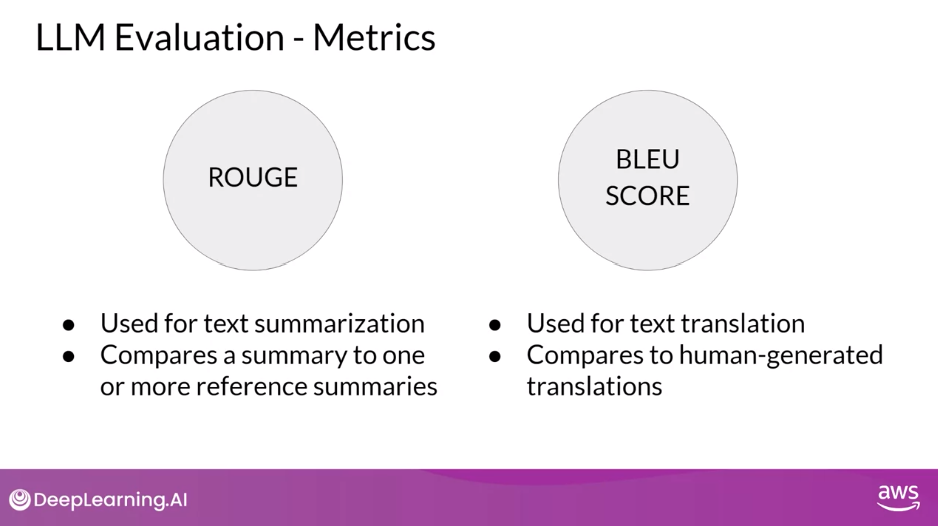

- ROUGE (The Recall-Oriented Understudy for Gisting Evaluation) - use for diagnostic evaluation of summarization tasks

- Compares a summary to one or more reference summaries

- BLEU (Bilingual Evaluation Understudy) - use for diagnostic evaluation of translation tasks

- Compares to human-generated translations

Benchmarks - evaluating its performance on data that it hasn’t seen before

- GLUE - General Language Understanding Evaluation

- SuperGLUE

Benchmarks for Massive Models

- MMLU - Massive Multi-task Language Understanding

- BIG-bench - Beyond the Imitation Game Benchmark

- HELM - Holistic Evaluation of Language Models

- Also include metrics for:

- Accuracy

- Calibration

- Robustness

- Fairness

- Bias

- Toxicity

- Efficiency

- Also include metrics for:

Introduction - Week 2

- Take a look at instruction fine-tuning, so when you have your base model, the thing that’s initially pretrained, it’s encoded a lot of really good information, usually about the world. So it knows about things, but it doesn’t necessarily know how to be able to respond to our prompts, our questions. So when we instruct it to do a certain task, it doesn’t necessarily know how to respond. And so instruction fine-tuning helps it to be able to change its behavior to be more helpful for us

- Because by learning off general text off the Internet and other sources, you learn to predict the next word. By predicting what’s the next word on the Internet is not the same as following instructions. I thought it’s amazing you can take a large language model, train it on hundreds of billions of words off the Internet. And then fine-tune it with a much smaller data set on following instructions and just learn to do that

- have to watch out for, of course, is catastrophic forgetting and this is something that we talk about in the course. So that’s where you train the model on some extra data in this insane instruct fine-tuning. And then it forgets all of that stuff that it had before, or a big chunk of that data that it had before. And so there are some techniques that we’ll talk about in the course to help combat that. Such as doing instruct fine-tuning across a really broad range of different instruction types. So it’s not just a case of just tuning it on just the thing you want it to do. You might have to be a little bit broader than that as well, but we talk about it in the course

- One of the problems with fine-tuning is you take a giant model and you fine-tune every single parameter in that model. You have this big thing to store around and deploy, and it’s actually very compute and memory expansive

- talk about parameter efficient fine-tuning or PEFT for short, as a set of methods that can allow you to mitigate some of those concerns, right? So we have a lot of customers that do want to be able to tune for very specific tasks, very specific domains. And parameter efficient fine-tuning is a great way to still achieve similar performance results on a lot of tasks that you can with full fine-tuning. But then actually take advantage of techniques that allow you to freeze those original model weights. Or add adaptive layers on top of that with a much smaller memory footprint, So that you can train for multiple tasks

- one of the techniques that I know you’ve used a lot is LoRA

- see a lot of excitement demand around LoRA because of the performance results of using those low rank matrices as opposed to full fine-tuning, right? So you’re able to get really good performance results with minimal compute and memory requirements

- many developers will often start off with prompting, and sometimes that gives you good enough performance and that’s great. And sometimes prompting hits a ceiling in performance and then this type of fine-tuning with LoRA or other PEFT technique is really critical for unlocking that extra level performance. And then the other thing I’m seeing among a lot of OM developers is a discussion debate about the cost of using a giant model, which is a lot of benefits versus for your application fine-tuning a smaller model

- full fine tuning can be cost prohibitive, right? To say the least so the ability to actually be able to use techniques like PEFT to put fine-tuning generative AI models kind of in the hands of everyday users. That do have those cost constraints and they’re cost conscious, which is pretty much everyone in the real world

Instruction Fine-Tuning

Stage 2 of Generative AI Project Lifecycle - Adapt and Align Model

- You’ll learn about methods that you can use to improve the performance of an existing model for your specific use case.

- You’ll also learn about important metrics that can be used to evaluate the performance of your finetuned LLM and quantify its improvement over the base model you started with

Limitations of In-Context Learning

- Limitations of in-context learning

- First, for smaller models, it doesn’t always work, even when five or six examples are included.

- Second, any examples you include in your prompt take up valuable space in the context window, reducing the amount of room you have to include other useful information.

- Luckily, another solution exists, you can take advantage of a process known as fine-tuning to further train a base model

Pre-training Recap

- You train the LLM using vast amounts of unstructured textual data via self- supervised learning

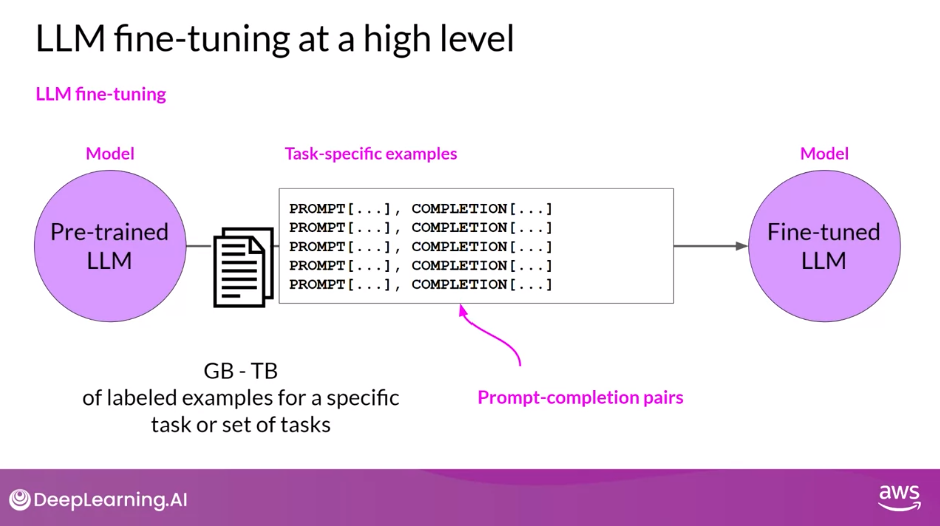

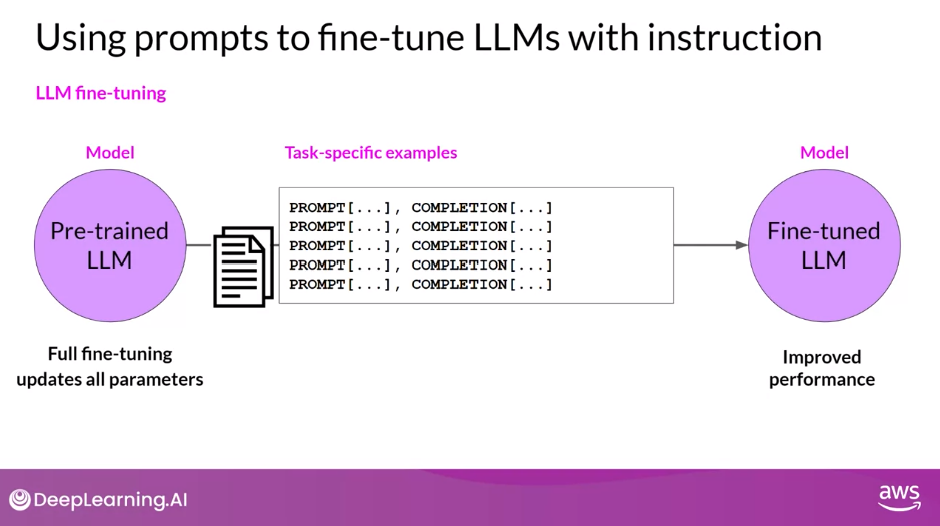

Fine-tuning at a High Level

- Fine-tuning is a supervised learning process where you use a data set of labeled examples to update the weights of the LLM.

- The labeled examples are prompt completion pairs, the fine-tuning process extends the training of the model to improve its ability to generate good completions for a specific task

- One strategy, known as instruction fine-tuning, is particularly good at improving a model’s performance on a variety of tasks

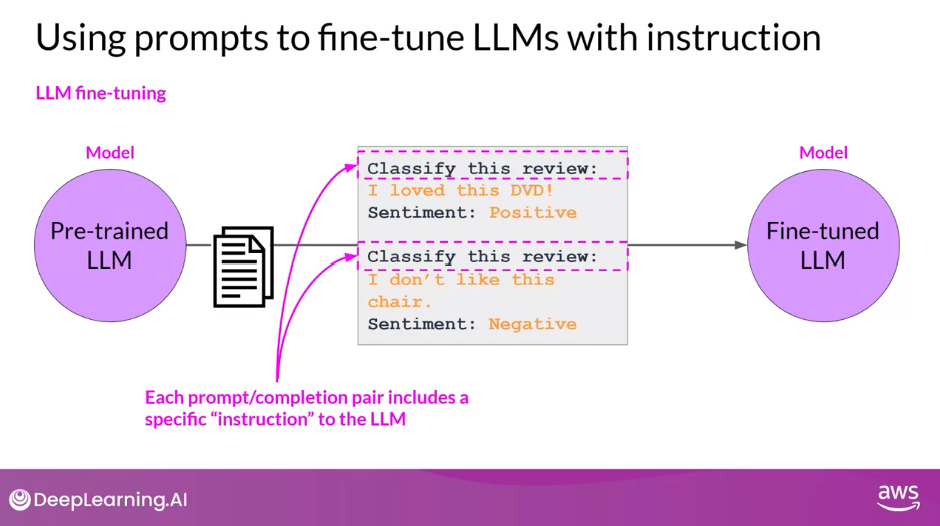

Instruction Fine-tuning

- Instruction fine-tuning trains the model using examples that demonstrate how it should respond to a specific instruction

- Here are a couple of example prompts to demonstrate this idea.

- The instruction in both examples is classify this review, and the desired completion is a text string that starts with sentiment followed by either positive or negative.

- The dataset you use for training includes many pairs of prompt completion examples for the task you’re interested in, each of which includes an instruction.

- For example, if you want to fine-tune your model to improve its summarization ability, you’d build up a data set of examples that begin with the instruction “summarize the following text” or a similar phrase.

- And if you are improving the model’s translation skills, your examples would include instructions like “translate this sentence”.

- These prompt completion examples allow the model to learn to generate responses that follow the given instructions

- Instruction fine-tuning, where all of the model’s weights are updated is known as Full Fine-tuning.

- The process results in a new version of the model with updated weights.

- It is important to note that just like pre-training, full fine-tuning requires enough memory and compute budget to store and process all the gradients, optimizers and other components that are being updated during training.

- So you can benefit from the memory optimization and parallel computing strategies that you learned about last week

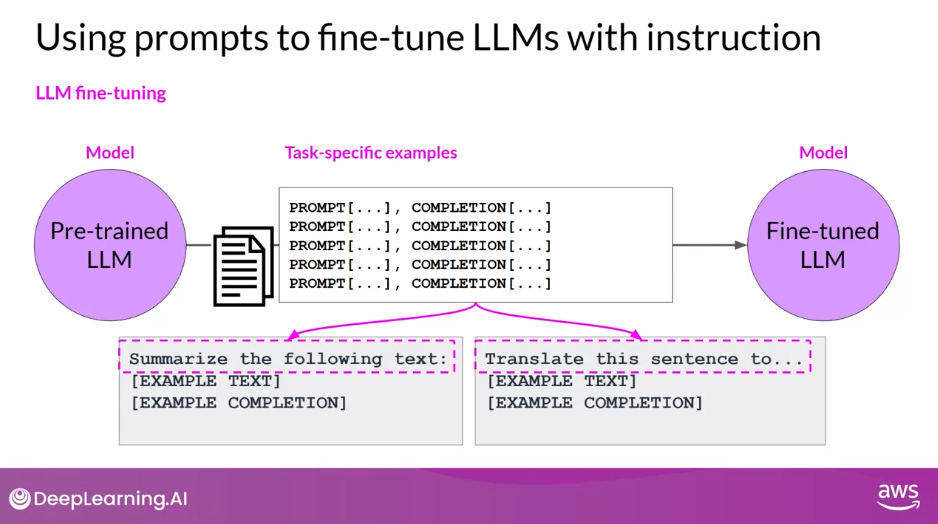

How do you actually go about Instruction Fine-tuning an LLM?

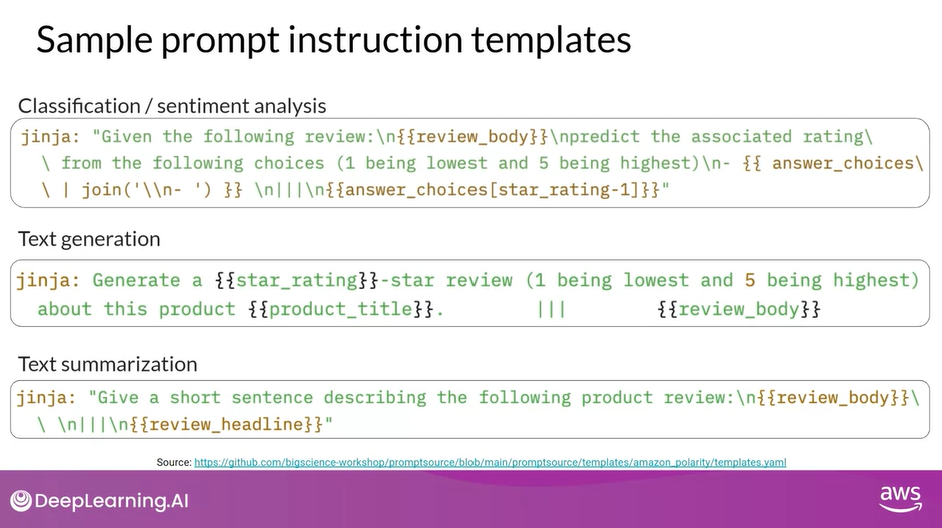

Dataset Preparation: Instruction Fine-tuning

There are many publicly available datasets that have been used to train earlier generations of language models, although most of them are not formatted as instructions.

Luckily, developers have assembled prompt template libraries that can be used to take existing datasets, for example, the large data set of Amazon product reviews and turn them into instruction prompt datasets for fine-tuning.

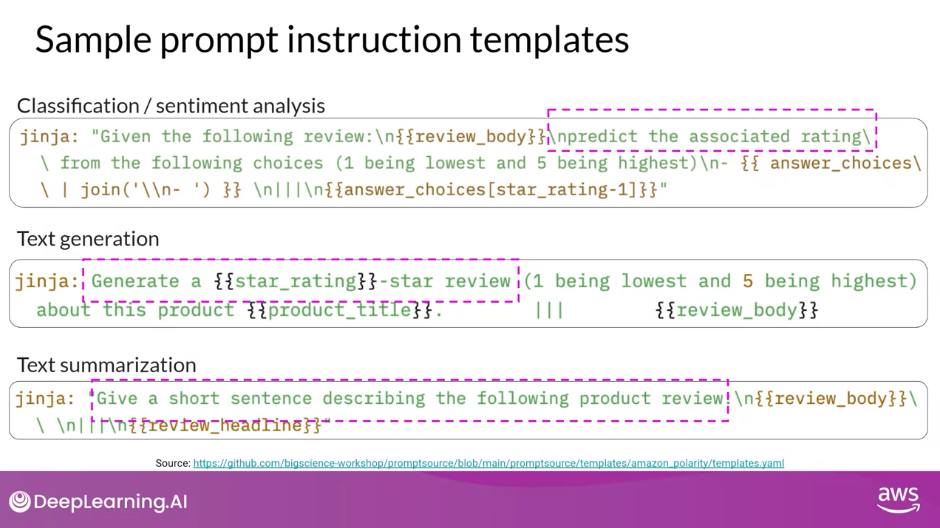

Here are three prompts that are designed to work with the Amazon reviews dataset and that can be used to fine tune models for

- classification,

- text generation and

- text summarization tasks

You can see that in each case you pass the original review, here called review_body, to the template, where it gets inserted into the text that starts with an instruction like predict the associated rating, generate a star review, or give a short sentence describing the following product review.

The result is a prompt that now contains both an instruction and the example from the data set

LLM Fine-tuning Process

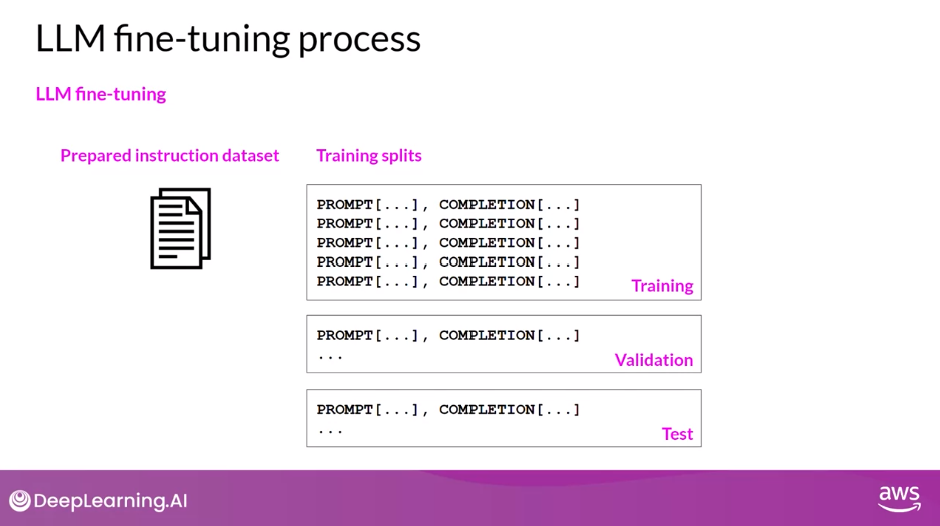

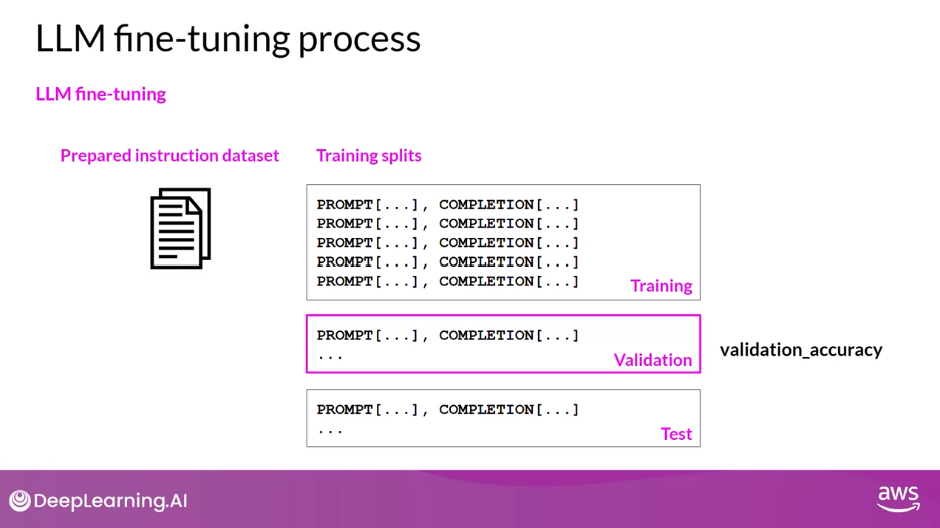

- Once you have your instruction data set ready, as with standard supervised learning, you divide the data set into

- training,

- validation and

- test splits

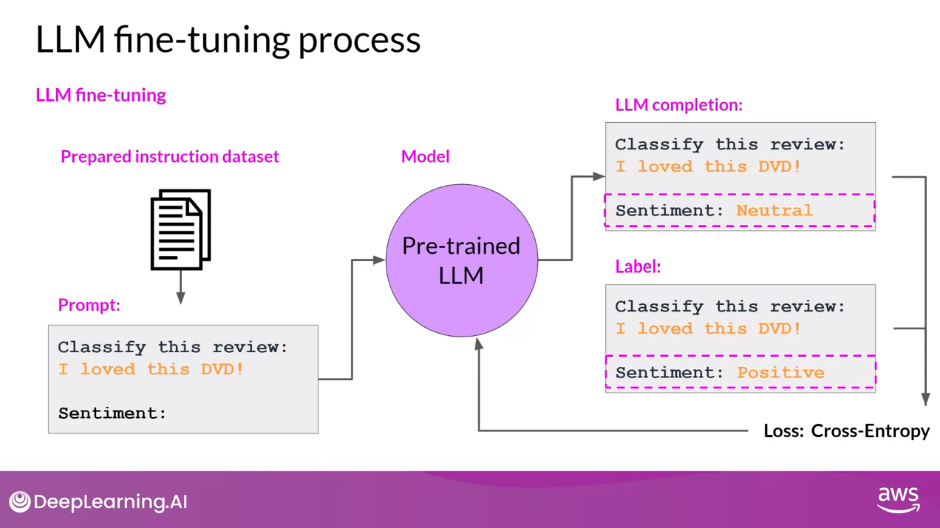

- During fine-tuning, you select prompts from your training data set and pass them to the LLM, which then generates completions.

- Next, you compare the LLM completion with the response specified in the training data.

- You can see here that the model didn’t do a great job, it classified the review as neutral, which is a bit of an understatement. The review is clearly very positive.

- Remember that the output of an LLM is a probability distribution across tokens. So you can compare the distribution of the completion and that of the training label and use the standard Cross-Entropy function to calculate loss between the two token distributions.

- And then use the calculated loss to update your model weights in standard Backpropagation.

- You’ll do this for many batches of prompt completion pairs and over several epochs, update the weights so that the model’s performance on the task improves

- As in standard supervised learning, you can define separate evaluation steps to measure your LLM performance using the holdout validation data set. This will give you the validation accuracy

- After you’ve completed your fine-tuning, you can perform a final performance evaluation using the holdout test data set. This will give you the test accuracy.

- The fine-tuning process results in a new version of the base model, often called an instruct model that is better at the tasks you are interested in

- Fine-tuning with instruction prompts is the most common way to fine-tune LLMs these days.

- From this point on, when you hear or see the term fine-tuning, you can assume that it always means Instruction Fine-tuning

Fine-Tuning on a Single Task

- LLMs have become famous for their ability to perform many different language tasks within a single model, your application may only need to perform a single task

- You can fine-tune a pre-trained model to improve performance on only the task that is of interest to you

- For example, summarization using a dataset of examples for that task. Interestingly, good results can be achieved with relatively few examples.

- Often just 500-1,000 examples can result in good performance in contrast to the billions of pieces of texts that the model saw during pre-training.

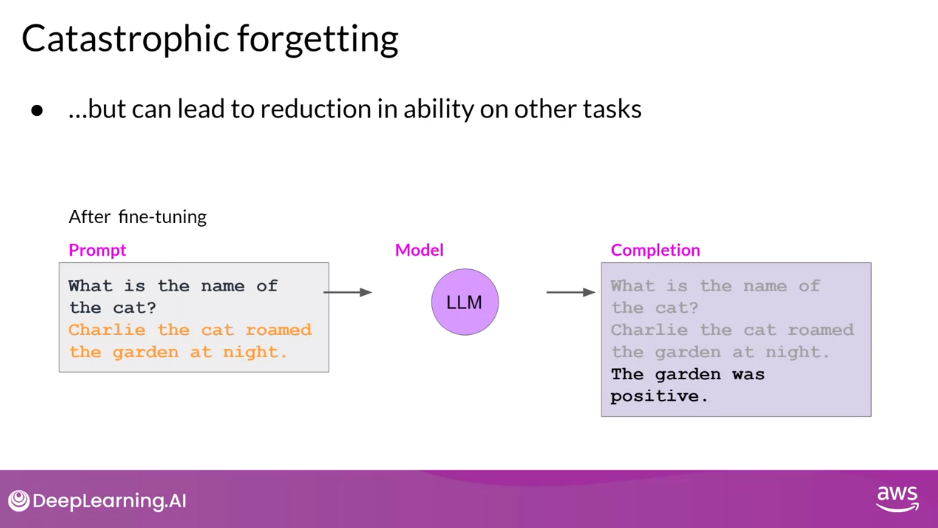

- However, there is a potential downside to fine-tuning on a single task. The process may lead to a phenomenon called Catastrophic Forgetting.

Catastrophic Forgetting

- Catastrophic forgetting happens because the full fine-tuning process modifies the weights of the original LLM.

- While this leads to great performance on the single fine-tuning task, it can degrade performance on other tasks.

- For example, while fine-tuning can improve the ability of a model to perform sentiment analysis on a review and result in a quality completion, the model may forget how to do other tasks.

- This model knew how to carry out named entity recognition before fine-tuning correctly identifying Charlie as the name of the cat in the sentence.

- But after fine-tuning, the model can no longer carry out this task, confusing both the entity it is supposed to identify and exhibiting behavior related to the new task

How to Avoid Catastrophic Forgetting

- First of all, it’s important to decide whether catastrophic forgetting actually impacts your use case. If all you need is reliable performance on the single task you fine-tuned on, it may not be an issue that the model can’t generalize to other tasks. If you do want or need the model to maintain its multitask generalized capabilities, you can perform fine-tuning on multiple tasks at one time. Good multitask fine-tuning may require 50-100,000 examples across many tasks, and so will require more data and compute to train. Will discuss this option in more detail shortly.

- Our second option is to perform parameter efficient fine-tuning, or PEFT for short instead of full fine-tuning. PEFT is a set of techniques that preserves the weights of the original LLM and trains only a small number of task-specific adapter layers and parameters. PEFT shows greater robustness to catastrophic forgetting since most of the pre-trained weights are left unchanged. PEFT is an exciting and active area of research that we will cover later this week

Question:

Which of the following are true in respect to Catastrophic Forgetting? Select all that apply.

Catastrophic forgetting occurs when a machine learning model forgets previously learned information as it learns new information.

Correct

The assertion is true, and this process is especially problematic in sequential learning scenarios where the model is trained on multiple tasks over time.

One way to mitigate catastrophic forgetting is by using regularization techniques to limit the amount of change that can be made to the weights of the model during training.

Correct

One way to mitigate catastrophic forgetting is by using regularization techniques to limit the amount of change that can be made to the weights of the model during training. This can help to preserve the information learned during earlier training phases and prevent overfitting to the new data.

Catastrophic forgetting is a common problem in machine learning, especially in deep learning models.

Correct

This assertion is true because these models typically have many parameters, which can lead to overfitting and make it more difficult to retain previously learned information.

Multi-Task Instruction Fine-Tuning

- Multitask fine-tuning is an extension of single task fine-tuning, where the training dataset is comprised of example inputs and outputs for multiple tasks.

- Here, the dataset contains examples that instruct the model to carry out a variety of tasks, including summarization, review rating, code translation, and entity recognition.

- You train the model on this mixed dataset so that it can improve the performance of the model on all the tasks simultaneously, thus avoiding the issue of catastrophic forgetting.

- Over many epochs of training, the calculated losses across examples are used to update the weights of the model, resulting in an instruction tuned model that is learned how to be good at many different tasks simultaneously.

- One drawback to multitask fine-tuning is that it requires a lot of data. You may need as many as 50-100,000 examples in your training set.

- However, it can be really worthwhile and worth the effort to assemble this data.

- The resulting models are often very capable and suitable for use in situations where good performance at many tasks is desirable

Let’s take a look at one family of models that have been trained using multitask instruction fine-tuning

Instruction Fine-tuning with FLAN

- Instruct model variance differ based on the datasets and tasks used during fine-tuning.

- One example is the FLAN family of models.

- FLAN (Fine-tuned LAnguage Net) is a specific set of instructions used to fine-tune different models.

- Because they’re FLAN fine-tuning is the last step of the training process the authors of the original paper called it “the metaphorical dessert to the main course of pre-training”.

- FLAN-T5, the FLAN instruct version of the T5 foundation model while FLAN-PALM is the FLAN instruct version of the palm foundation model

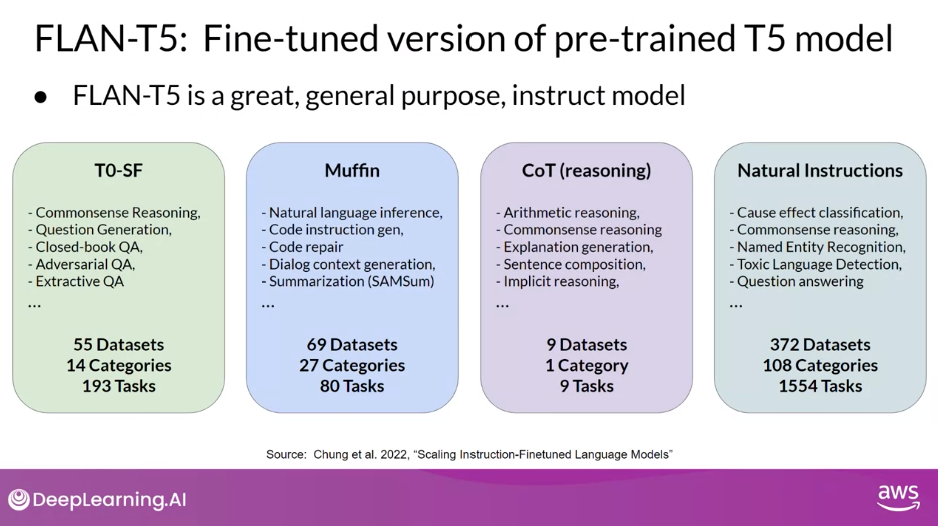

FLAN-T5

- FLAN-T5 is a great general purpose instruct model.

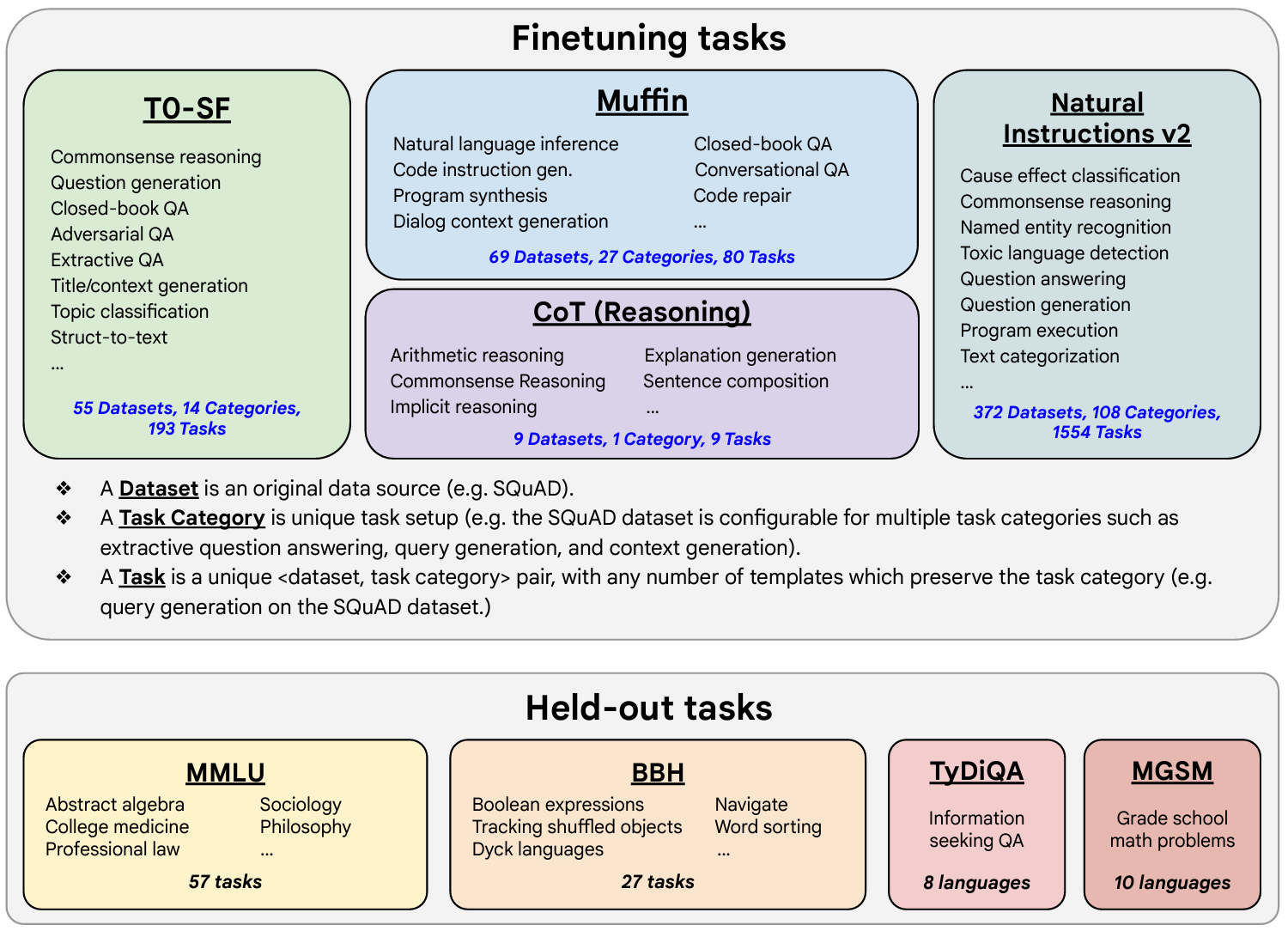

- In total, it’s been fine tuned on 473 datasets across 146 task categories.

- Those datasets are chosen from other models and papers as shown here

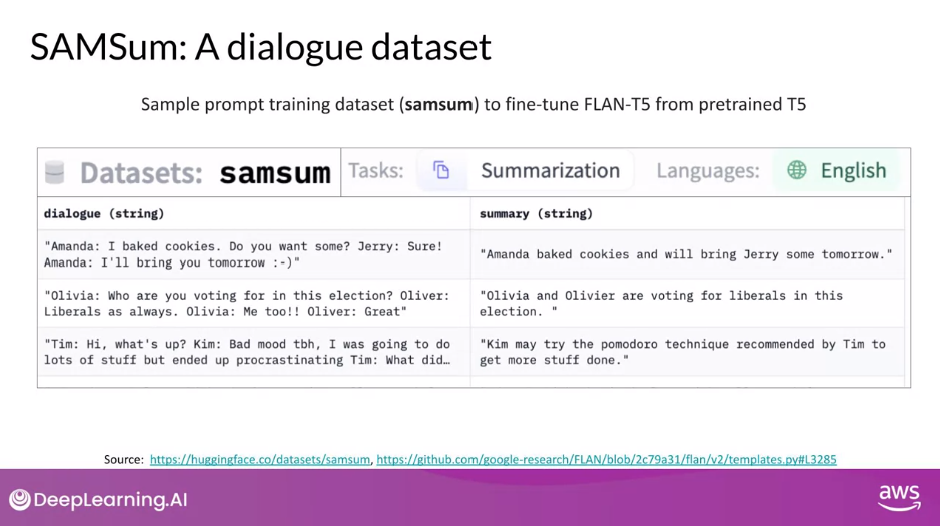

- One example of a prompt dataset used for summarization tasks in FLAN-T5 is SAMSum.

- It’s part of the muffin collection of tasks and datasets and is used to train language models to summarize dialogue

SAMSum: Dialogue Dataset

- SAMSum is a dataset with 16,000 messenger like conversations with summaries. Three examples are shown here with the dialogue on the left and the summaries on the right.

- The dialogues and summaries were crafted by linguists for the express purpose of generating a high-quality training dataset for language models.

- The linguists were asked to create conversations similar to those that they would write on a daily basis, reflecting their proportion of topics of their real life messenger conversations.

- Although language experts then created short summaries of those conversations that included important pieces of information and names of the people in the dialogue

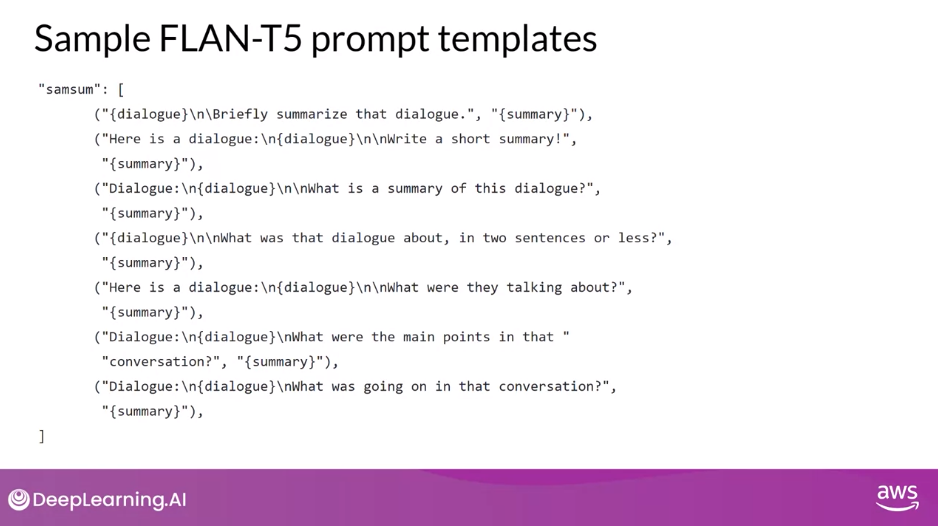

Sample FLAN-T5 Prompt Templates

- Here is a prompt template designed to work with this SAMSum dialogue summary dataset.

- The template is actually comprised of several different instructions that all basically ask the model to do this same thing.

- Summarize a dialogue.

- For example, briefly summarize that dialogue.

- What is a summary of this dialogue?

- What was going on in that conversation?

- Including different ways of saying the same instruction helps the model generalize and perform better.

- Just like the prompt templates you saw earlier, you see that in each case, the dialogue from the SAMSum dataset is inserted into the template wherever the dialogue field appears.

- The summary is used as the label.

- After applying this template to each row in the SAMSum dataset, you can use it to fine tune a dialogue summarization task

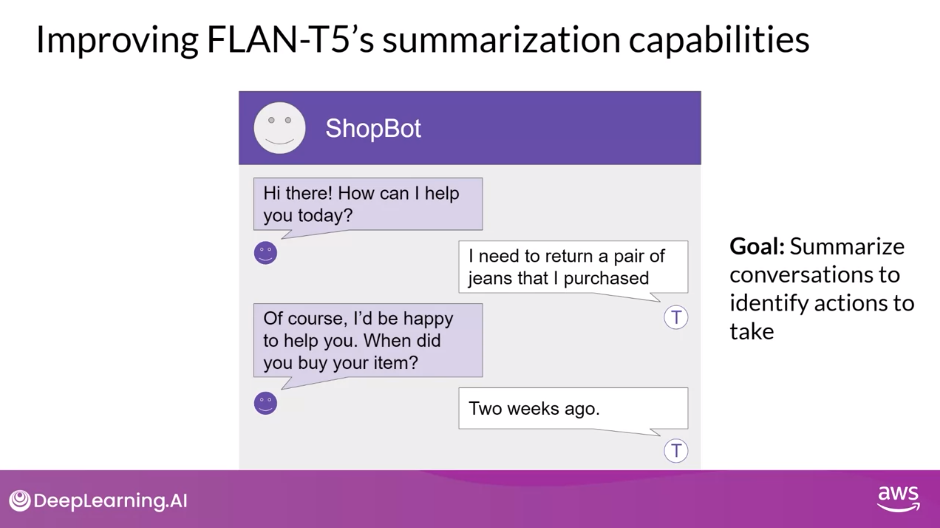

While FLAN-T5 is a great general use model that shows good capability in many tasks. You may still find that it has room for improvement on tasks for your specific use case

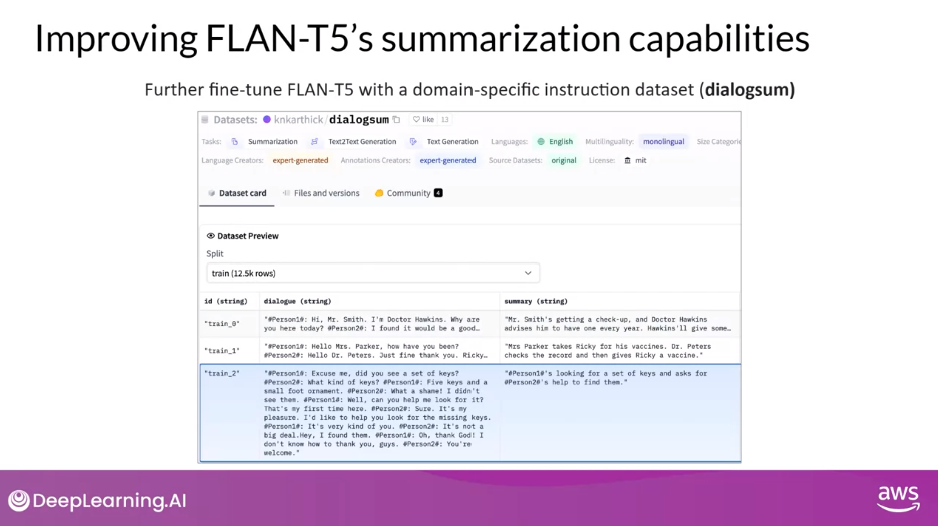

Improving FLAN-T5’s Summarization Capabilities

- For example, imagine you’re a data scientist building an app to support your customer service team, process requests received through a chat bot, like the one shown here.

- Your customer service team needs a summary of every dialogue to identify the key actions that the customer is requesting and to determine what actions should be taken in response

- The SAMSum dataset gives FLAN-T5 some abilities to summarize conversations.

- However, the examples in the dataset are mostly conversations between friends about day-to-day activities and don’t overlap much with the language structure observed in customer service chats.

- You can perform additional fine-tuning of the FLAN-T5 model using a dialogue dataset that is much closer to the conversations that happened with your bot.

dialogsum Dataset

- This is the exact scenario that you’ll explore in the lab this week

- You’ll make use of an additional domain specific summarization dataset called dialogsum to improve FLAN-T5’s is ability to summarize support chat conversations.

- This dataset consists of over 13,000 support chat dialogues and summaries.

- The dialogsum dataset is not part of the FLAN-T5 training data, so the model has not seen these conversations before

- Let’s take a look at example from dialogsum and discuss how a further round of fine-tuning can improve the model.

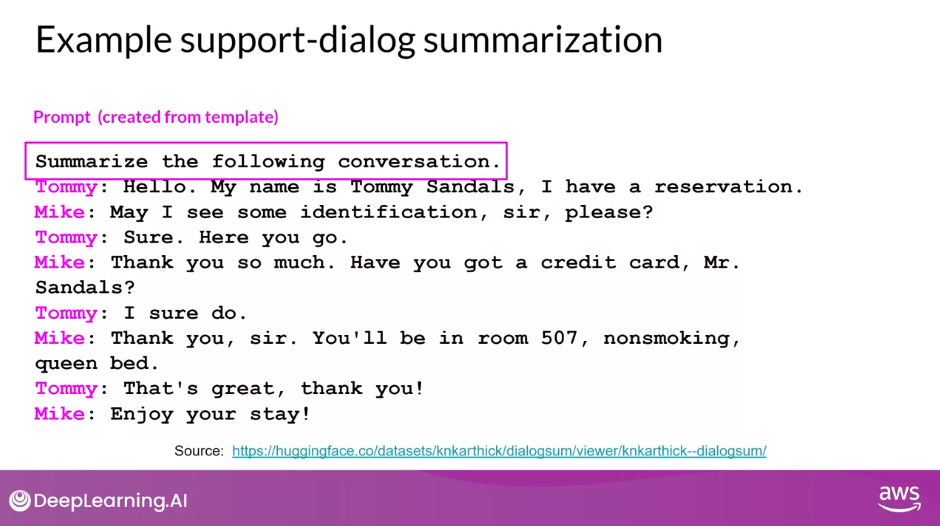

- This is a support chat that is typical of the examples in the dialogsum dataset.

- The conversation is between a customer and a staff member at a hotel check-in desk.

- The chat has had a template applied so that the instruction to summarize the conversation is included at the start of the text

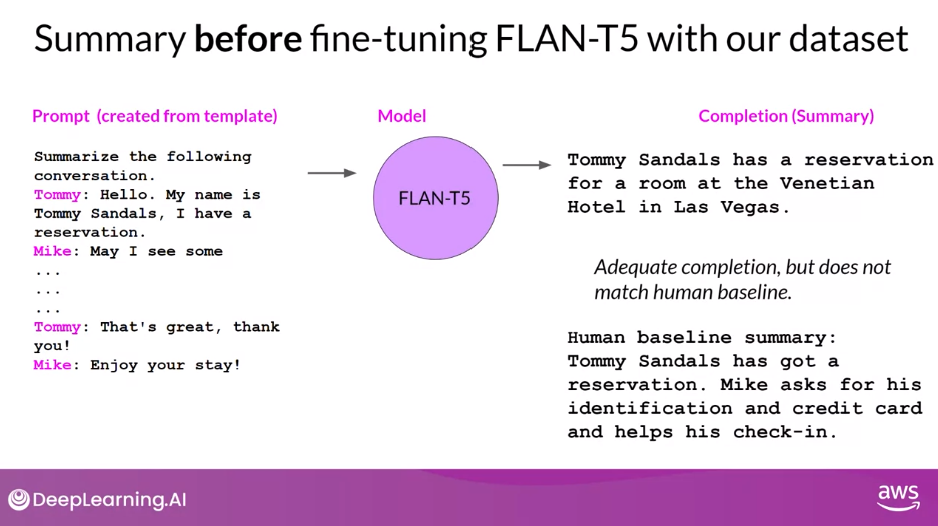

Now, let’s take a look at how FLAN-T5 responds to this prompt before doing any additional fine-tuning

Summary Before Fine-tuning

- Note that the prompt is now condensed on the left to give you more room to examine the completion of the model.

- Here is the model’s response to the instruction.

- You can see that the model does as it’s able to identify that the conversation was about a reservation for Tommy.

- However, it does not do as well as the human-generated baseline summary, which includes important information such as Mike asking for information to facilitate check-in and the models completion has also invented information that was not included in the original conversation, specifically the name of the hotel and the city it was located in

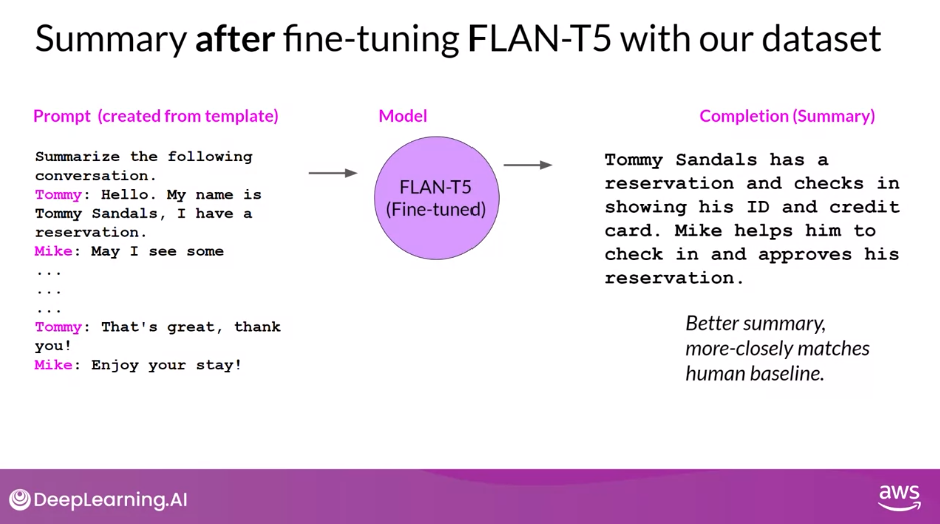

Summary After Fine-tuning

- Hopefully, you will agree that this is closer to the human-produced summary.

- There is no fabricated information and the summary includes all of the important details, including the names of both people participating in the conversation

This example, use the public dialogsum dataset to demonstrate fine-tuning on custom data

Fine-tuning with Your Own Data

- In practice, you’ll get the most out of fine-tuning by using your company’s own internal data.

- For example, the support chat conversations from your customer support application.

- This will help the model learn the specifics of how your company likes to summarize conversations and what is most useful to your customer service colleagues

Question: What is the purpose of fine-tuning with prompt datasets?

To improve the performance and adaptability of a pre-trained language model for specific tasks.

Correct

This option accurately describes the purpose of fine-tuning with prompt datasets. It aims to improve the performance and adaptability of a pre-trained language model by training it on specific tasks using instruction prompts.

Reading: Scaling Instruct Models

FLAN - Fine-tuned LAnguage Net

This paper introduces FLAN (Fine-tuned LAnguage Net), an instruction finetuning method, and presents the results of its application. The study demonstrates that by fine-tuning the 540B PaLM model on 1836 tasks while incorporating Chain-of-Thought Reasoning data, FLAN achieves improvements in generalization, human usability, and zero-shot reasoning over the base model. The paper also provides detailed information on how each of these aspects was evaluated.

Here is the image from the lecture slides that illustrates the fine-tuning tasks and datasets employed in training FLAN. The task selection expands on previous works by incorporating dialogue and program synthesis tasks from Muffin and integrating them with new Chain of Thought Reasoning tasks. It also includes subsets of other task collections, such as T0 and Natural Instructions v2. Some tasks were held-out during training, and they were later used to evaluate the model’s performance on unseen tasks.

Model Evaluation

- How can you formalize the improvement in performance of your fine-tuned model over the pre-trained model you started with?

- Let’s explore several metrics that are used by developers of large language models that you can use to assess the performance of your own models and compare to other models out in the world

LLM Evaluation Challenges

- In traditional machine learning, you can assess how well a model is doing by looking at its performance on training and validation data sets where the output is already known.

- You’re able to calculate simple metrics such as accuracy, which states the fraction of all predictions that are correct because the models are deterministic

- But with large language models where the output is non-deterministic and language-based evaluation is much more challenging

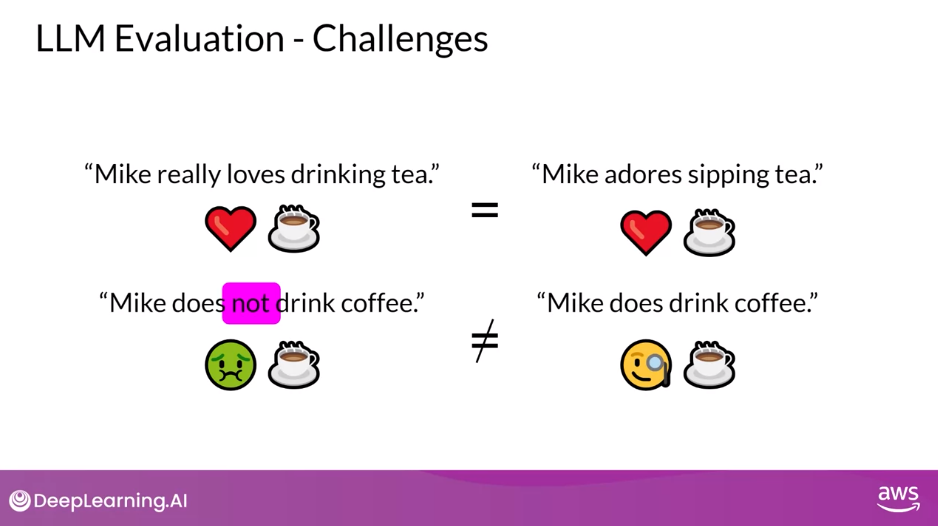

First Pair of Sentences

- Take, for example, the sentence, Mike really loves drinking tea.

- This is quite similar to Mike adores sipping tea.

- But how do you measure the similarity?

Second Pair of Sentences

- Let’s look at these other two sentences.

- Mike does not drink coffee, and Mike does drink coffee.

- There is only one word difference between these two sentences.

- However, the meaning is completely different.

- Now, for humans like us with squishy organic brains, we can see the similarities and differences.

- But when you train a model on millions of sentences, you need an automated, structured way to make measurements

LLM Evaluation Metrics

- ROUGE and BLEU, are two widely used evaluation metrics for different tasks.

- ROUGE (The Recall-Oriented Understudy for Gisting Evaluation) is primarily employed to assess the quality of automatically generated summaries by comparing them to human-generated reference summaries.

- BLEU (Bilingual Evaluation Understudy) is an algorithm designed to evaluate the quality of machine-translated text, again, by comparing it to human-generated translations.

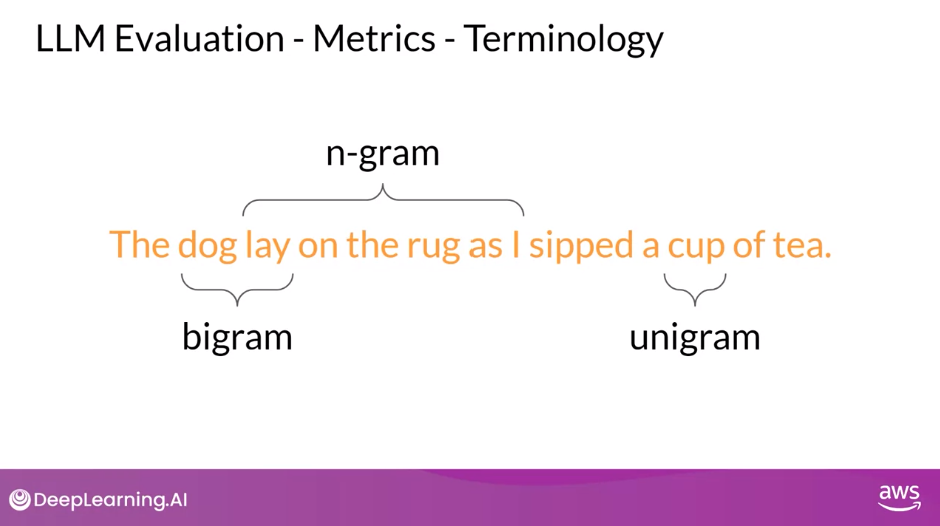

Terminology Review

- In the anatomy of language, a unigram is equivalent to a single word.

- A bigram is two words and n-gram is a group of n-words

ROUGE Score

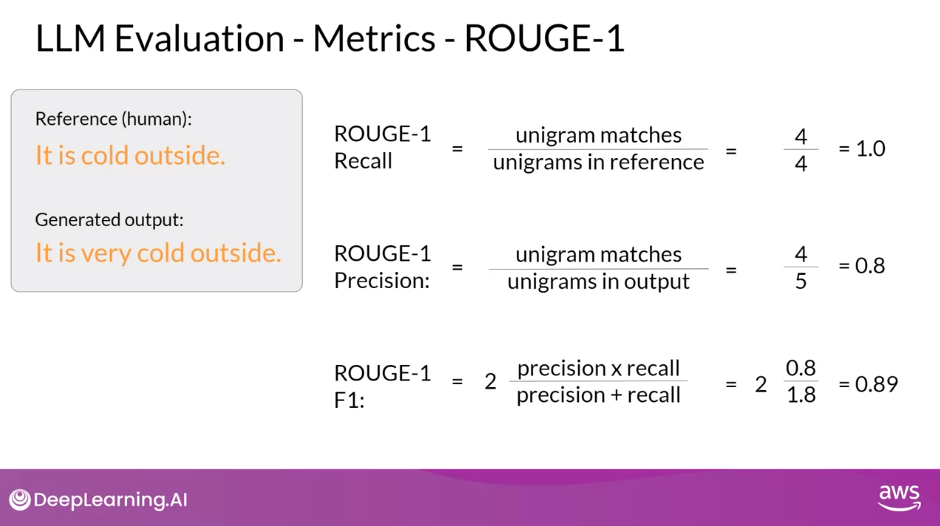

ROUGE-1

- To do so, let’s look at a human-generated reference sentence.

- Reference (human): It is cold outside

- Generated output: It is very cold outside

- You can perform simple metric calculations similar to other machine learning tasks using recall, precision, and F1.

- The recall metric measures the number of words or unigrams that are matched between the reference and the generated output divided by the number of words or unigrams in the reference.

- Gets a perfect score of one as all the generated words match words in the reference.

- Precision measures the unigram matches divided by the output size.

- The F1 score is the harmonic mean of both of these values.

- The recall metric measures the number of words or unigrams that are matched between the reference and the generated output divided by the number of words or unigrams in the reference.

- These are very basic metrics that only focused on individual words, hence the one in the name, and don’t consider the ordering of the words.

- It can be deceptive. It’s easily possible to generate sentences that score well but would be subjectively poor

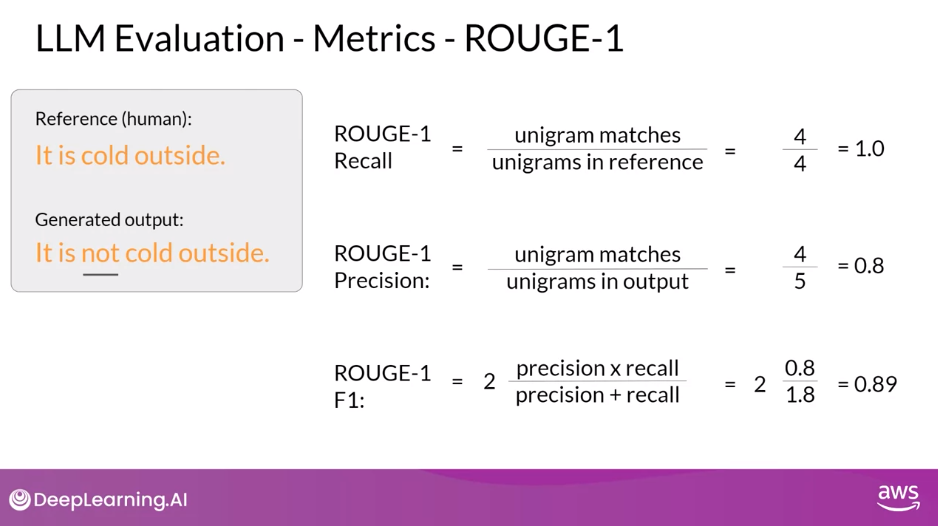

- Stop for a moment and imagine that the sentence generated by the model was different by just one word.

- Generated output: It is not cold outside.

- The scores would be the same

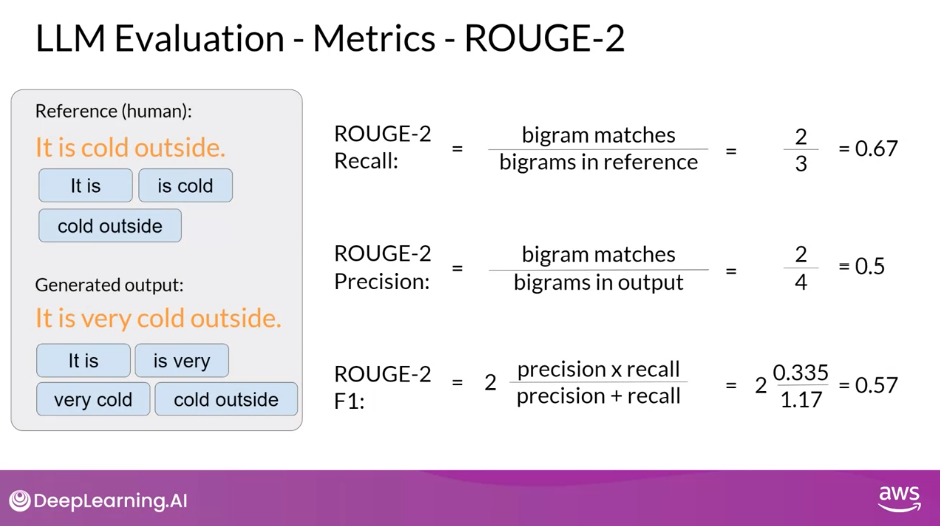

ROUGE-2

- You can get a slightly better score by taking into account bigrams or collections of two words at a time from the reference and generated sentence.

- By working with pairs of words you’re acknowledging in a very simple way, the ordering of the words in the sentence.

- By using bigrams, you’re able to calculate a ROUGE-2.

- Now, you can calculate the recall, precision, and F1 score using bigram matches instead of individual words.

- You’ll notice that the scores are lower than the ROUGE-1 scores.

- With longer sentences, they’re a greater chance that bigrams don’t match, and the scores may be even lower

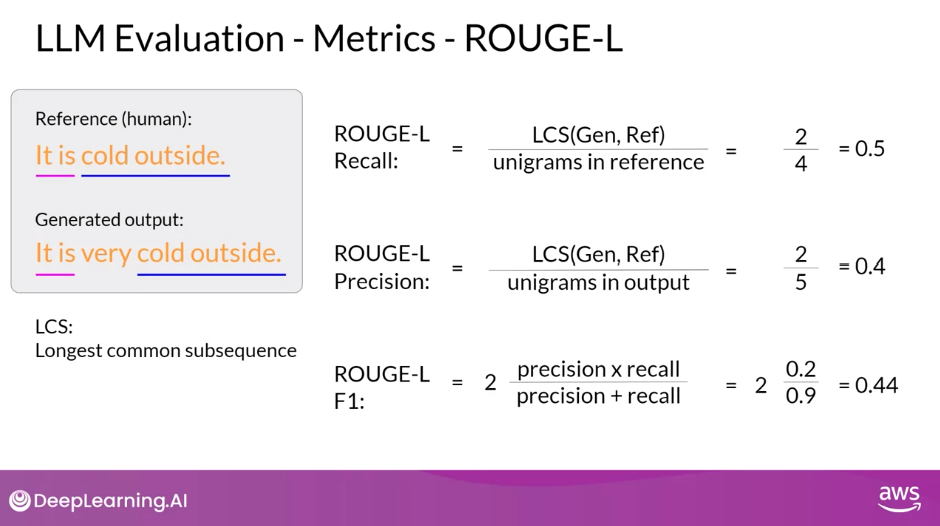

ROUGE-L

- Rather than continue on with ROUGE numbers growing bigger to n-grams of three or fours, let’s take a different approach.

- Instead, you’ll look for the Longest Common Subsequence (LCS) present in both the generated output and the reference output.

- In this case, the longest matching sub-sequences are, it is and cold outside, each with a length of two.

- You can now use the LCS value to calculate the recall precision and F1 score, where the numerator in both the recall and precision calculations is the length of the longest common subsequence, in this case, two.

- Collectively, these three quantities are known as the ROUGE-L score.

- As with all of the ROUGE scores, you need to take the values in context.

- You can only use the scores to compare the capabilities of models if the scores were determined for the same task.

- For example, summarization. ROUGE scores for different tasks are not comparable to one another

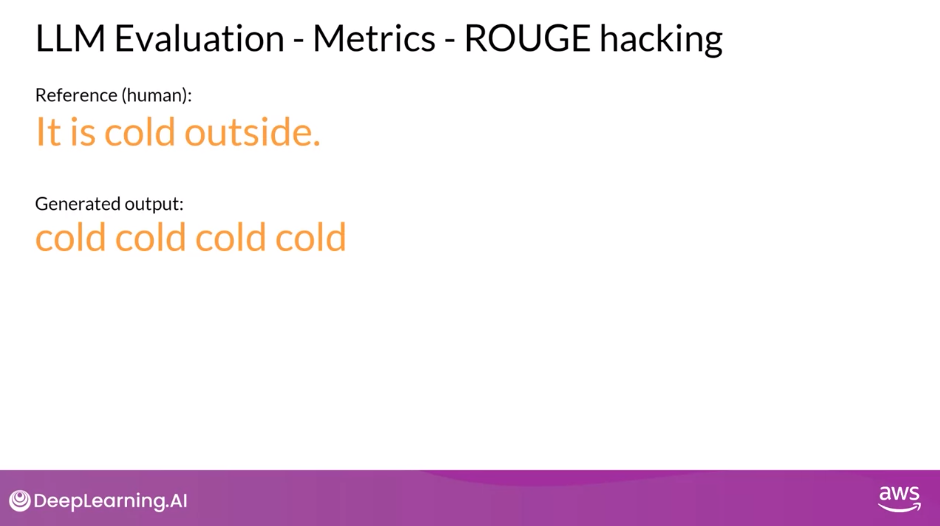

ROUGE Hacking

- As you’ve seen, a particular problem with simple ROUGE scores is that it’s possible for a bad completion to result in a good score.

- Generated output: cold, cold, cold, cold.

- As this generated output contains one of the words from the reference sentence, it will score quite highly, even though the same word is repeated multiple times.

- The ROUGE-1 precision score will be perfect

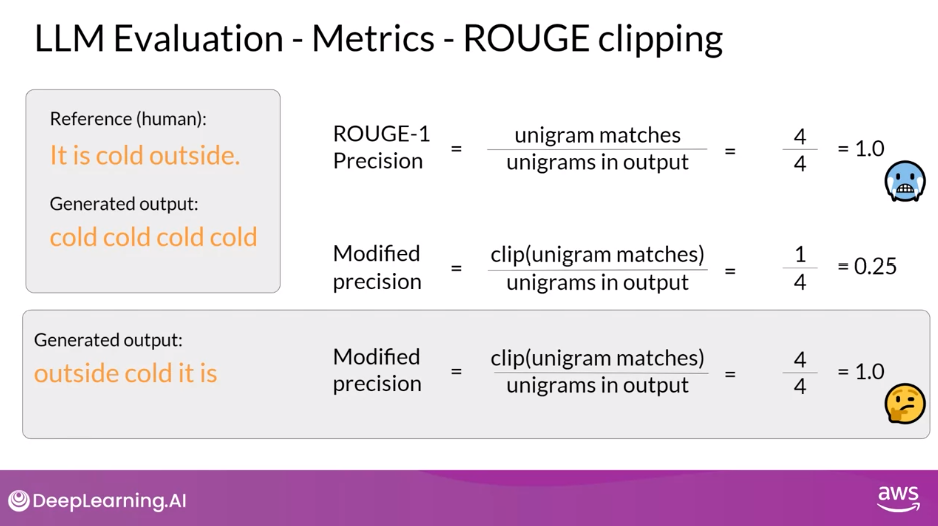

ROUGE Clipping

- One way you can counter this issue is by using a clipping function to limit the number of unigram matches to the maximum count for that unigram within the reference.

- In this case, there is one appearance of cold and the reference and so a modified precision with a clip on the unigram matches results in a dramatically reduced score.

- However, you’ll still be challenged if their generated words are all present, but just in a different order.

- For example, with this generated sentence, outside cold it is.

- This sentence was called perfectly even on the modified precision with the clipping function as all of the words and the generated output are present in the reference.

- Whilst using a different ROUGE score can help experimenting with a n-gram size that will calculate the most useful score will be dependent on the sentence, the sentence size, and your use case

Note: many language model libraries, for example, Hugging Face, include implementations of ROUGE score that you can use to easily evaluate the output of your model.

BLEU Score

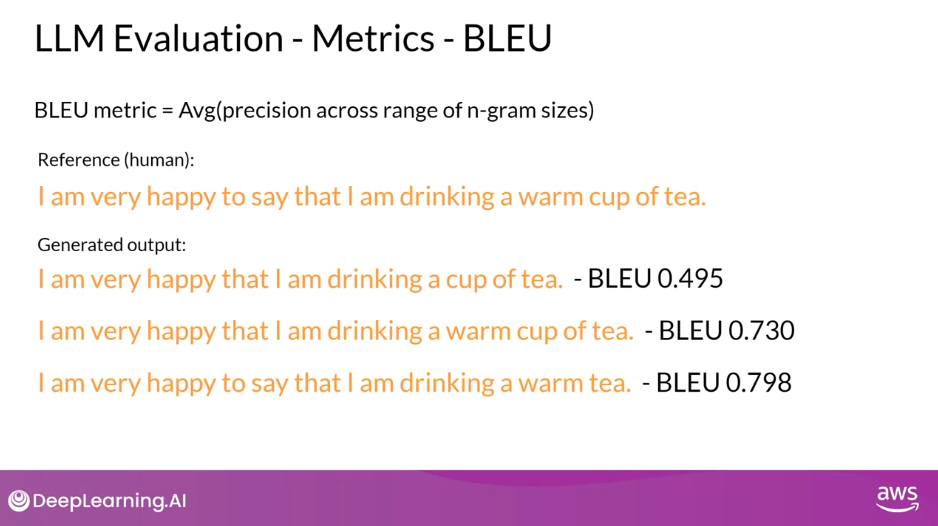

- BLEU (bilingual evaluation understudy) score is useful for evaluating the quality of machine-translated text

- The score itself is calculated using the average precision over multiple n-gram sizes, just like the ROUGE-1 score that we looked at before, but calculated for a range of n-gram sizes and then averaged.

- The BLEU score quantifies the quality of a translation by checking how many n-grams in the machine-generated translation match those in the reference translation.

- To calculate the score, you average precision across a range of different n-gram sizes.

- If you were to calculate this by hand, you would carry out multiple calculations and then average all of the results to find the BLEU score.

- For this example, let’s take a look at a longer sentence so that you can get a better sense of the scores value.

- The reference human-provided sentence is, I am very happy to say that I am drinking a warm cup of tea.

- Now, as you’ve seen these individual calculations in depth when you looked at ROUGE, I will show you the results of BLEU using a standard library.

- Calculating the BLEU score is easy with pre-written libraries from providers like Hugging Face and I’ve done just that for each of our candidate sentences.

- The first candidate is, I am very happy that I am drinking a cup of tea.

- The BLEU score is 0.495.

- As we get closer and closer to the original sentence, we get a score that is closer and closer to one

Summary: Evaluations Metrics

- Both ROUGE and BLEU are quite simple metrics and are relatively low-cost to calculate.

- You can use them for simple reference as you iterate over your models, but you shouldn’t use them alone to report the final evaluation of a large language model.

- Use ROUGE for diagnostic evaluation of summarization tasks and BLEU for translation tasks

For overall evaluation of your model’s performance, however, you will need to look at one of the evaluation benchmarks that have been developed by researchers

Benchmarks

- LLMs are complex, and simple evaluation metrics like the ROUGE and BLUE scores, can only tell you so much about the capabilities of your model.

- In order to measure and compare LLMs more holistically, you can make use of pre-existing datasets, and associated benchmarks that have been established by LLM researchers specifically for this purpose.

- Selecting the right evaluation dataset is vital, so that you can accurately assess an LLM’s performance, and understand its true capabilities.

- You’ll find it useful to select datasets that isolate specific model skills, like reasoning or common sense knowledge, and those that focus on potential risks, such as disinformation or copyright infringement.

- An important issue that you should consider is whether the model has seen your evaluation data during training.

- You’ll get a more accurate and useful sense of the model’s capabilities by evaluating its performance on data that it hasn’t seen before

- Benchmarks, such as GLUE, SuperGLUE, or HELM, cover a wide range of tasks and scenarios

- They do this by designing or collecting datasets that test specific aspects of an LLM

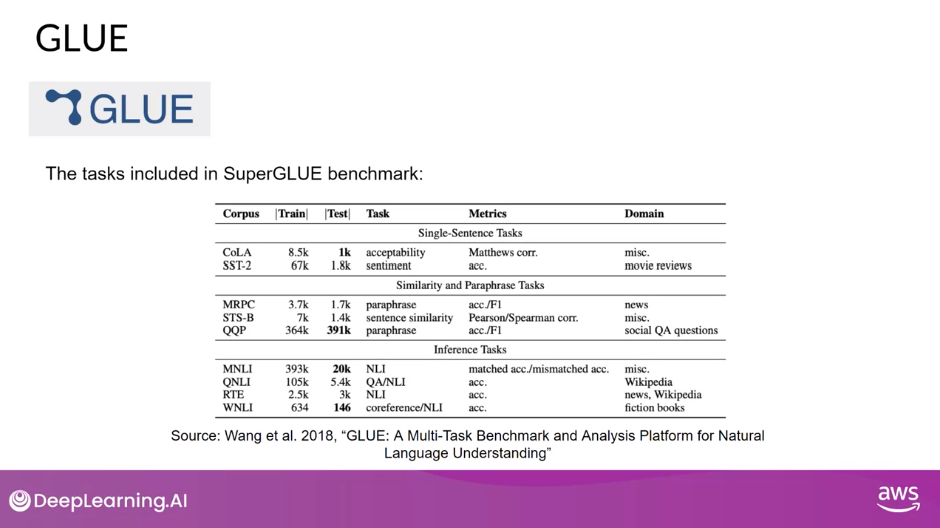

GLUE

- GLUE, or General Language Understanding Evaluation, was introduced in 2018.

- GLUE is a collection of natural language tasks, such as sentiment analysis and question-answering.

- GLUE was created to encourage the development of models that can generalize across multiple tasks, and you can use the benchmark to measure and compare the model performance

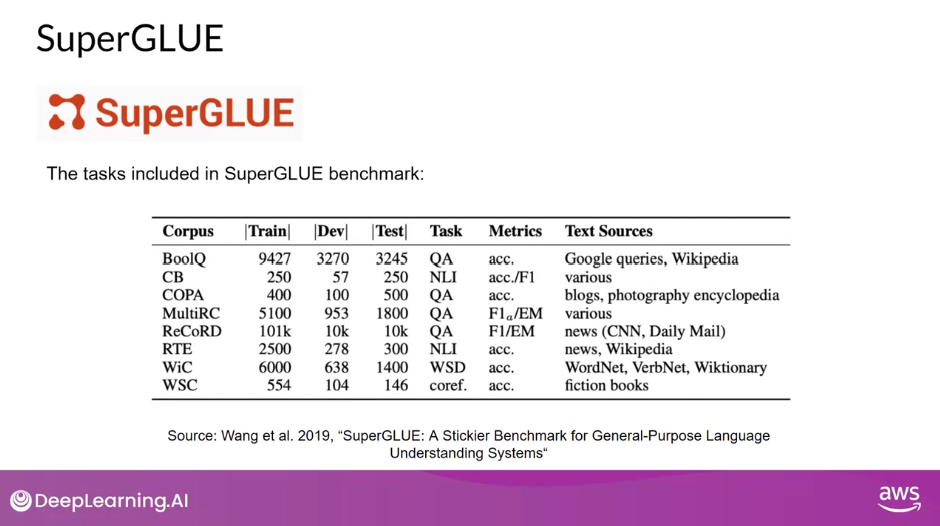

SuperGLUE

- As a successor to GLUE, SuperGLUE was introduced in 2019, to address limitations in its predecessor.

- It consists of a series of tasks, some of which are not included in GLUE, and some of which are more challenging versions of the same tasks.

- SuperGLUE includes tasks such as multi-sentence reasoning, and reading comprehension

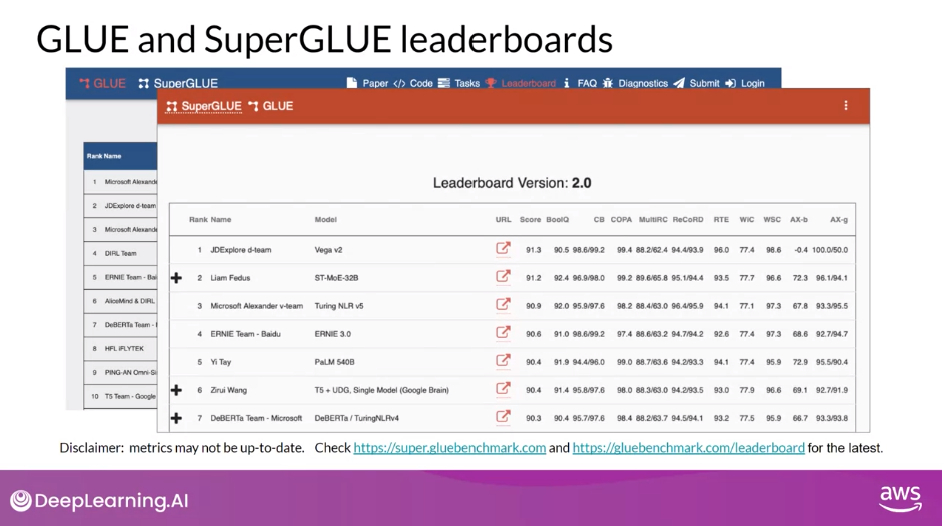

GLUE and SuperGLUE Leaderboards

- Both the GLUE and SuperGLUE benchmarks have leaderboards that can be used to compare and contrast evaluated models.

- The results page is another great resource for tracking the progress of LLMs

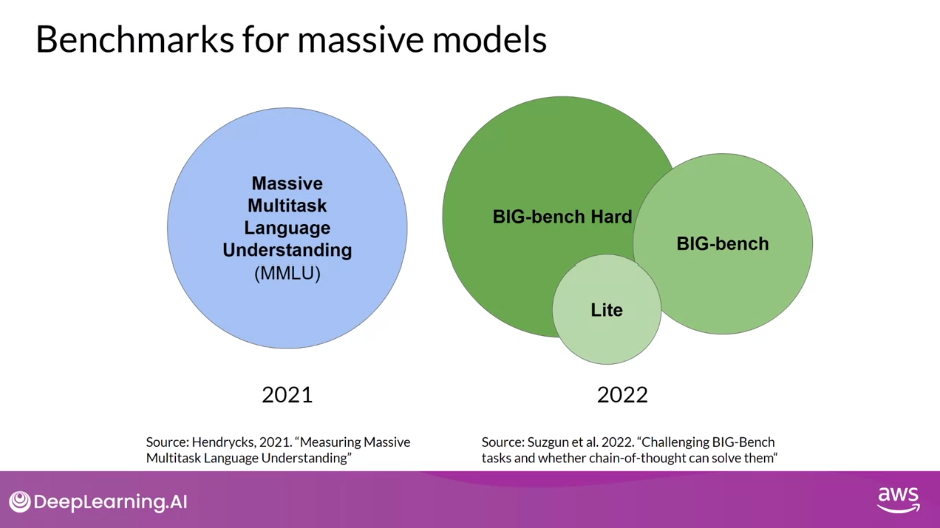

Benchmarks for Massive Models

- As models get larger, their performance against benchmarks such as SuperGLUE start to match human ability on specific tasks.

- That’s to say that models are able to perform as well as humans on the benchmarks tests, but subjectively we can see that they’re not performing at human level at tasks in general.

- There is essentially an arms race between the emergent properties of LLMs, and the benchmarks that aim to measure them

MMLU and BIG-bench

- Here are a couple of recent benchmarks that are pushing LLMs further.

- Massive Multitask Language Understanding, or MMLU, is designed specifically for modern LLMs.

- To perform well models must possess extensive world knowledge and problem-solving ability.

- Models are tested on elementary mathematics, US history, computer science, law, and more.

- In other words, tasks that extend way beyond basic language understanding.

- BIG-bench currently consists of 204 tasks, ranging through linguistics, childhood development, math, common sense reasoning, biology, physics, social bias, software development and more.

- BIG-bench comes in three different sizes, and part of the reason for this is to keep costs achievable, as running these large benchmarks can incur large inference costs

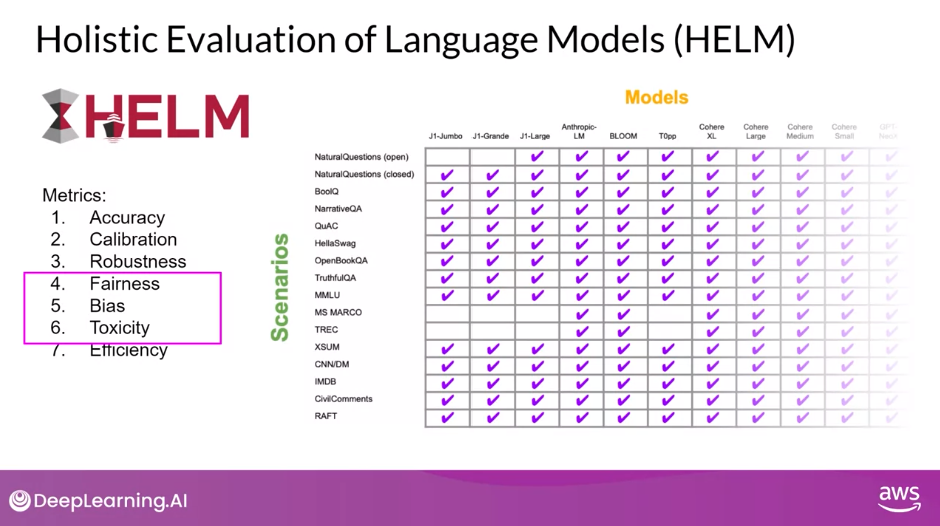

HELM

- A final benchmark you should know about is the Holistic Evaluation of Language Models, or HELM.

- The HELM framework aims to improve the transparency of models, and to offer guidance on which models perform well for specific tasks.

- HELM takes a multi-metric approach, measuring seven metrics across 16 core scenarios, ensuring that trade-offs between models and metrics are clearly exposed.

- One important feature of HELM is that it assesses on metrics beyond basic accuracy measures, like precision of the F1 score.

- The benchmark also includes metrics for fairness, bias, and toxicity, which are becoming increasingly important to assess as LLMs become more capable of human-like language generation, and in turn of exhibiting potentially harmful behavior.

- HELM is a living benchmark that aims to continuously evolve with the addition of new scenarios, metrics, and models

- You can take a look at the results page to browse the LLMs that have been evaluated, and review scores that are pertinent to your project’s needs.