Week 3 Part 2 - LLM-powered Applications

- Notes

- Model Optimizations for Deployment

- Generative AI Project Lifecycle Cheat Sheet

- Using the LLM in Applications

- Interacting with External Applications

- Helping LLMs Reason and Plan with Chain of Thought (CoT)

- Program-aided Language (PAL) Models

- LLMs Can Struggle with Mathematics

- PAL Models

- PAL Example

- LLM-powered Applications 2

- PAL Architecture

- ReAct: Combining Reasoning and Action

- ReAct: Synergizing Reasoning and Action in LLMs

- ReAct Instructions Define the Action Space

- Building Up the ReAct Prompt

- LangChain

- The Significance of Scale: Application Building

- Reading: ReAct: Reasoning and Action

- LLM Application Architectures

- Optional: AWS Sagemaker Jumpstart

On this page

- Notes

- Model Optimizations for Deployment

- Generative AI Project Lifecycle Cheat Sheet

- Using the LLM in Applications

- Interacting with External Applications

- Helping LLMs Reason and Plan with Chain of Thought (CoT)

- Program-aided Language (PAL) Models

- LLMs Can Struggle with Mathematics

- PAL Models

- PAL Example

- LLM-powered Applications 2

- PAL Architecture

- ReAct: Combining Reasoning and Action

- ReAct: Synergizing Reasoning and Action in LLMs

- ReAct Instructions Define the Action Space

- Building Up the ReAct Prompt

- LangChain

- The Significance of Scale: Application Building

- Reading: ReAct: Reasoning and Action

- LLM Application Architectures

- Optional: AWS Sagemaker Jumpstart

These notes were developed using lectures/material/transcripts from the DeepLearning.AI & AWS - Generative AI with Large Language Models course

Notes

- Model Optimizations for Deployment

- Generative AI Project Lifecycle Stage 4 - Application Integration

- Questions to ask:

- How the LLM will function in deployment

- What additional resources that the LLM may need

- How the model will be consumed

- Questions to ask:

- Model Optimizations to Improve Application Performance

- LLM inference challenges

- Compute requirements

- Storage requirements

- Latency

- Challenge is to reduce model size while maintaining model performance

- Techniques to reduce model size to improve model performance during inference without impacting accuracy

- Distillation - uses a larger model to train a smaller model, the smaller model will be used for inference

- In practice, not as effective for generative decoder models, more effective for encoder-only models such as BERT that have a lot of representation redundancy

- Post-Training Quantization (PTQ) - transforms a model’s weights to a lower precision representation such as 16-bit floating point or 8-bit integer

- Pruning - eliminate weights (that are very close or equal to zero) that are not contributing much to overall model performance

- Distillation - uses a larger model to train a smaller model, the smaller model will be used for inference

- LLM inference challenges

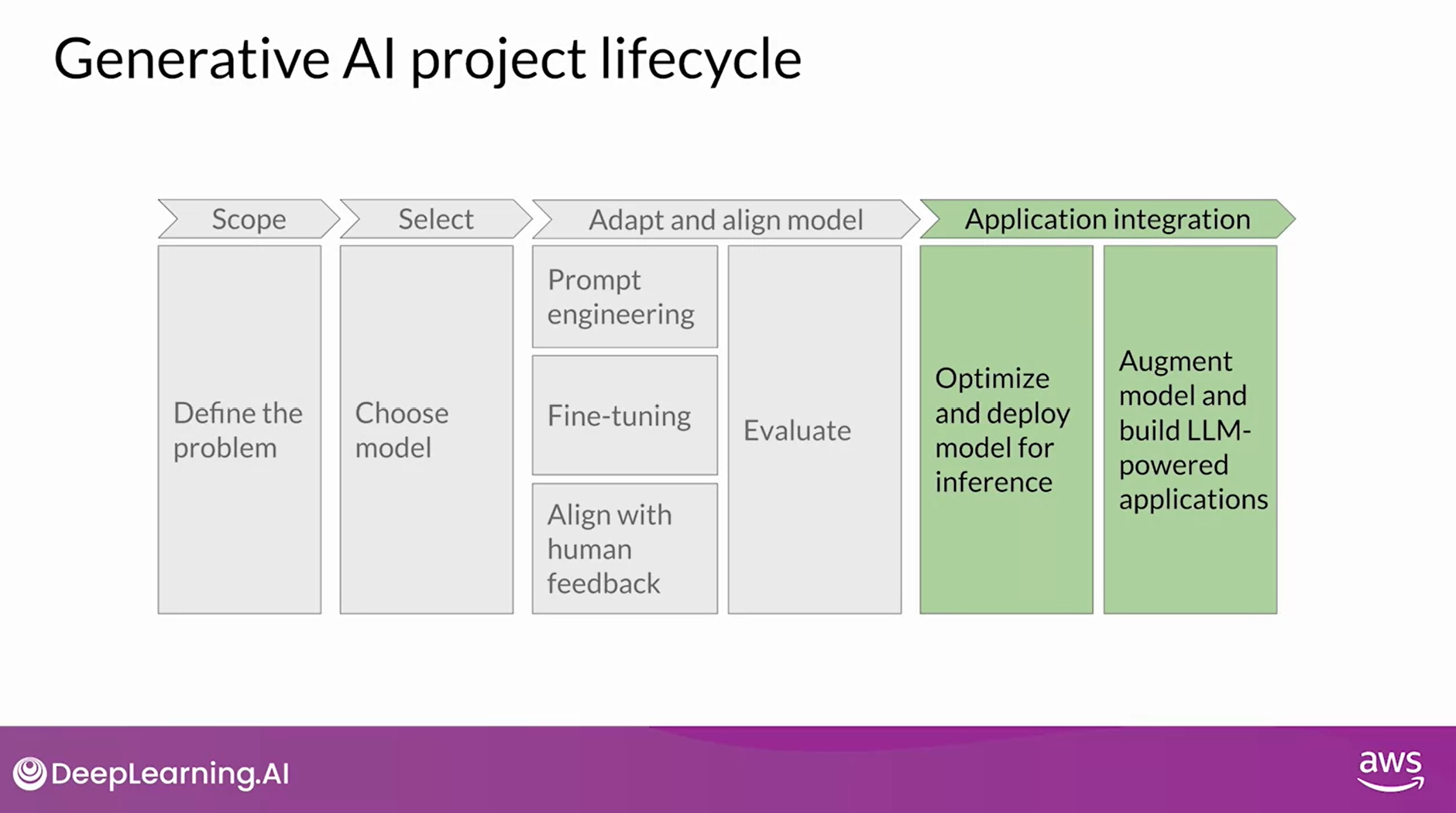

- Generative AI Project Lifecycle Stage 4 - Application Integration

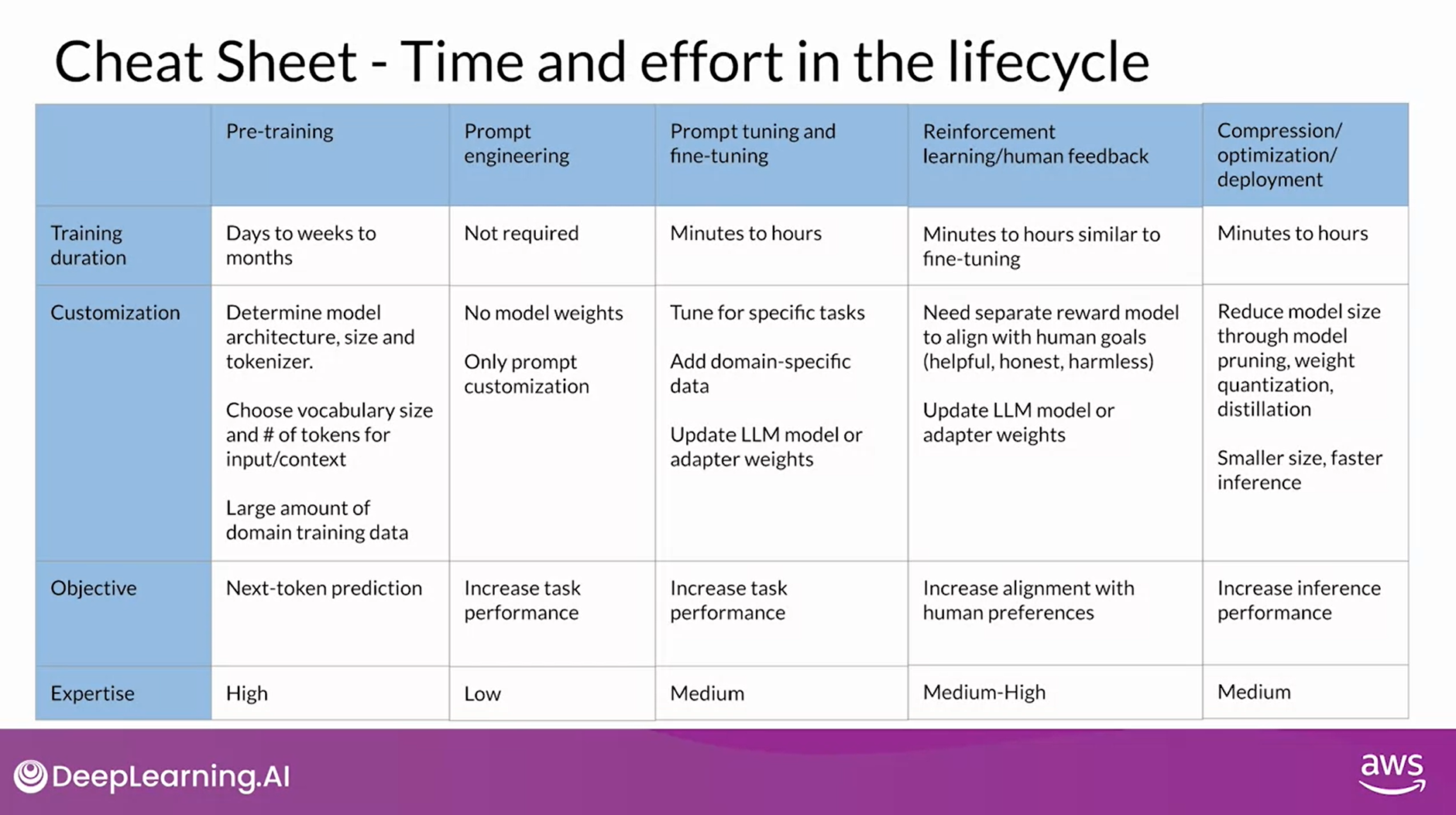

- Generative AI Project Lifecycle Cheat Sheet

- Pre-training

- Prompt Engineering

- Prompt Tuning and Fine-tuning

- Reinforcement Learning

- Compression/Optimization/Deployment

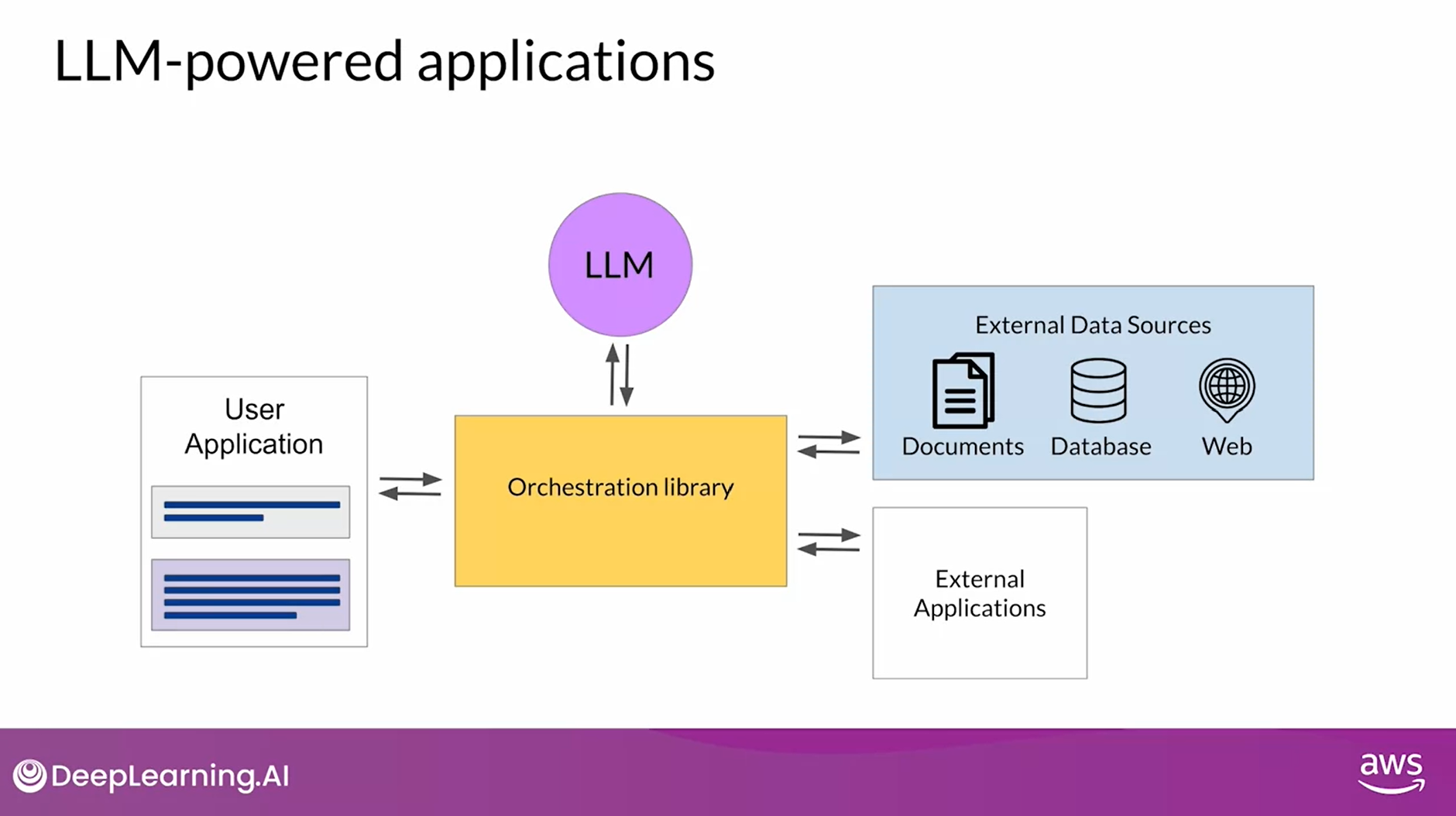

- Using the LLM in Applications

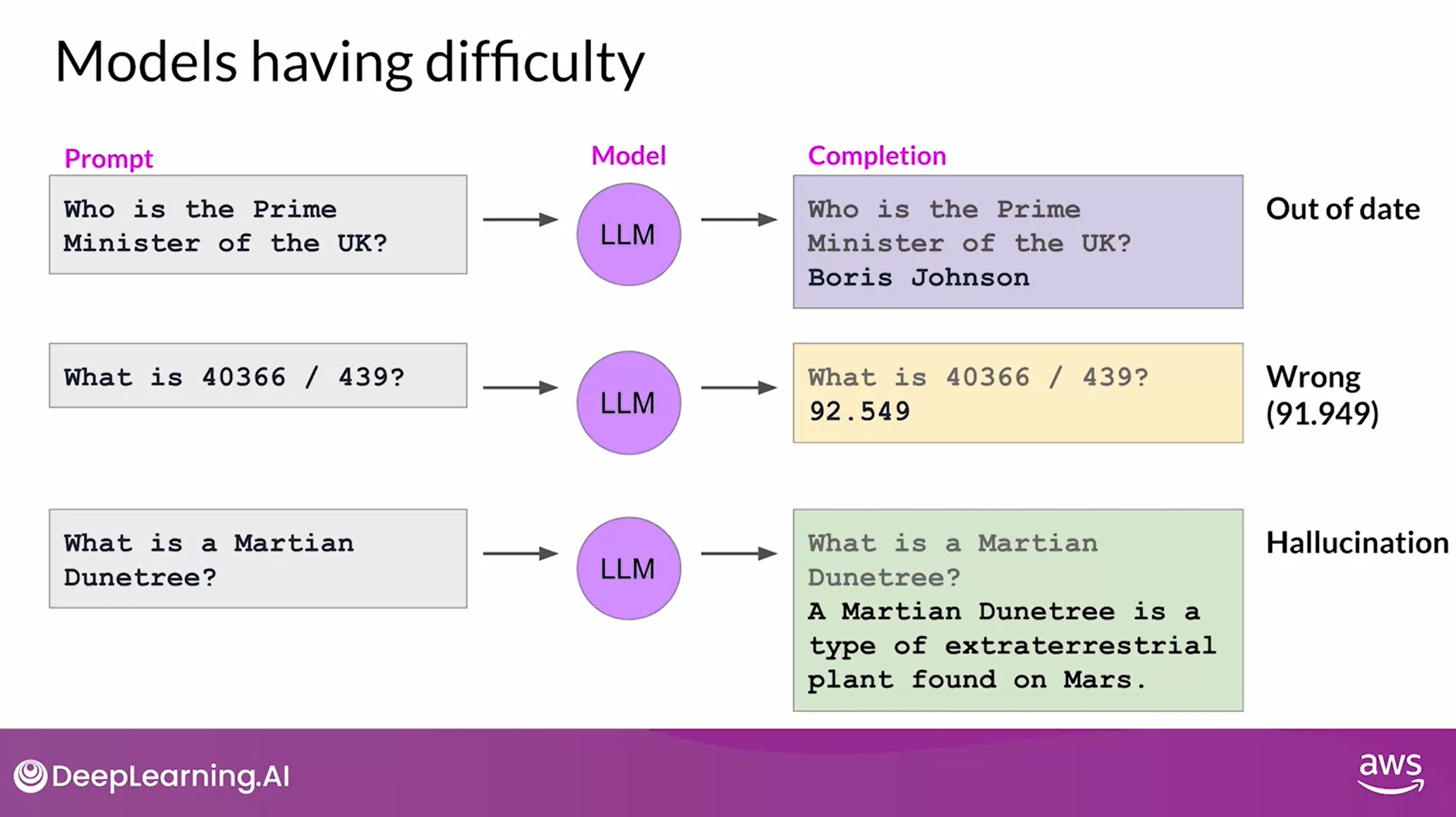

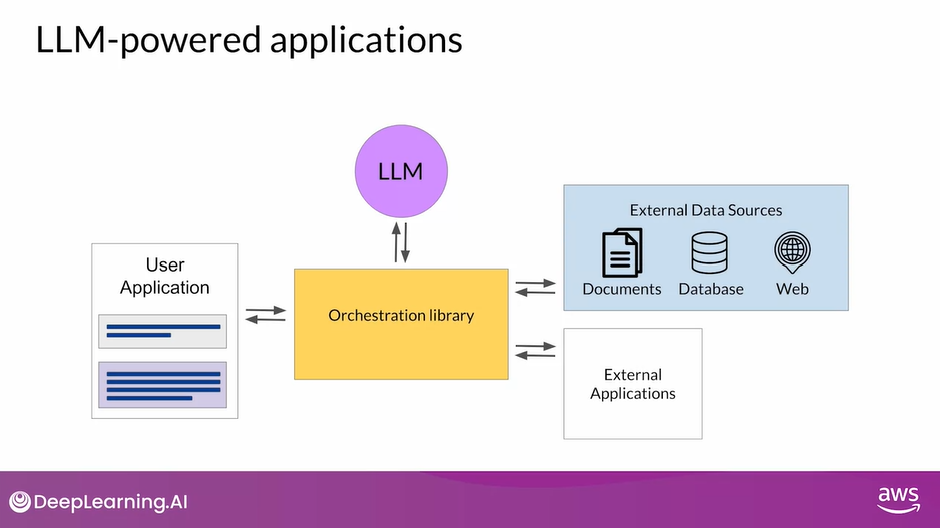

- LLM-powered Applications

- Problems

- Information may be out of date

- Struggle with complex math problems

- Hallucination

- Ways to overcome - connect them to external data sources and applications

- Retrieval Augmented Generation - published by Facebook in 2020

- Retriever - retrieves relevant information from an external corpus or knowledge base

- Encoder - encodes the query in the same format as the external documents

- External Data Source

- Data Preparation

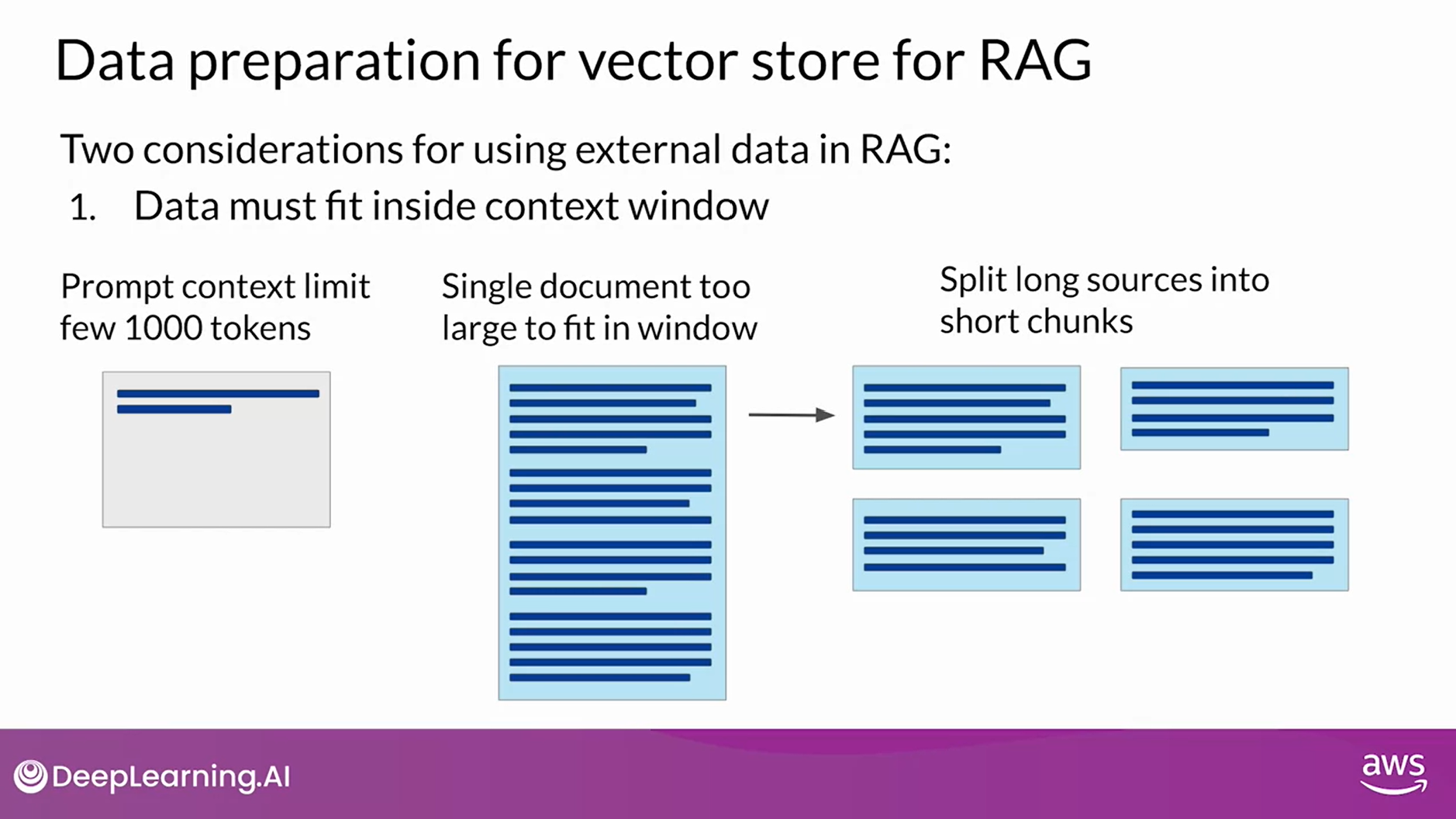

- Data must fit inside context window - text documents are broken into smaller chunks, each of which will fit in the context window of LLM

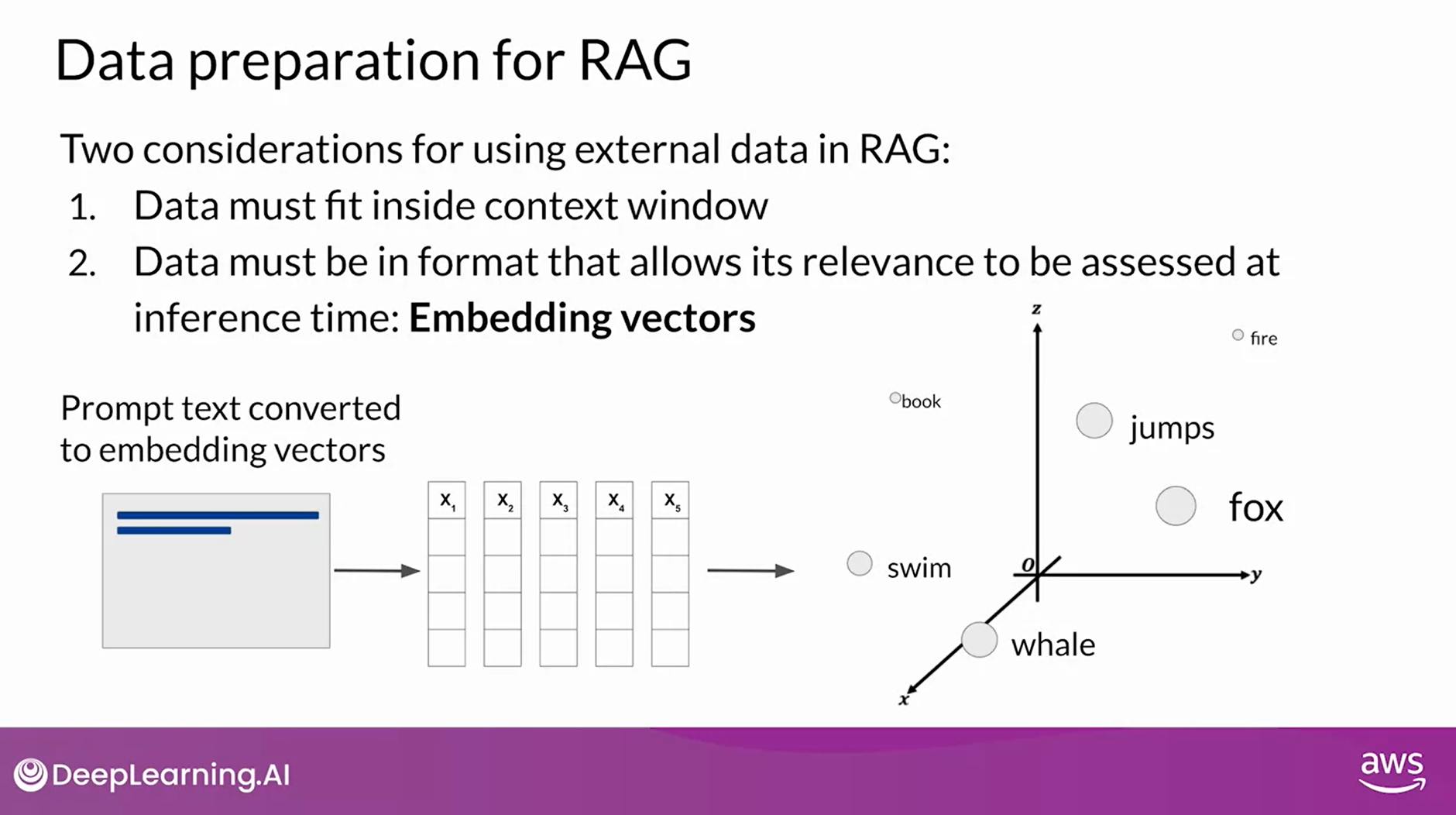

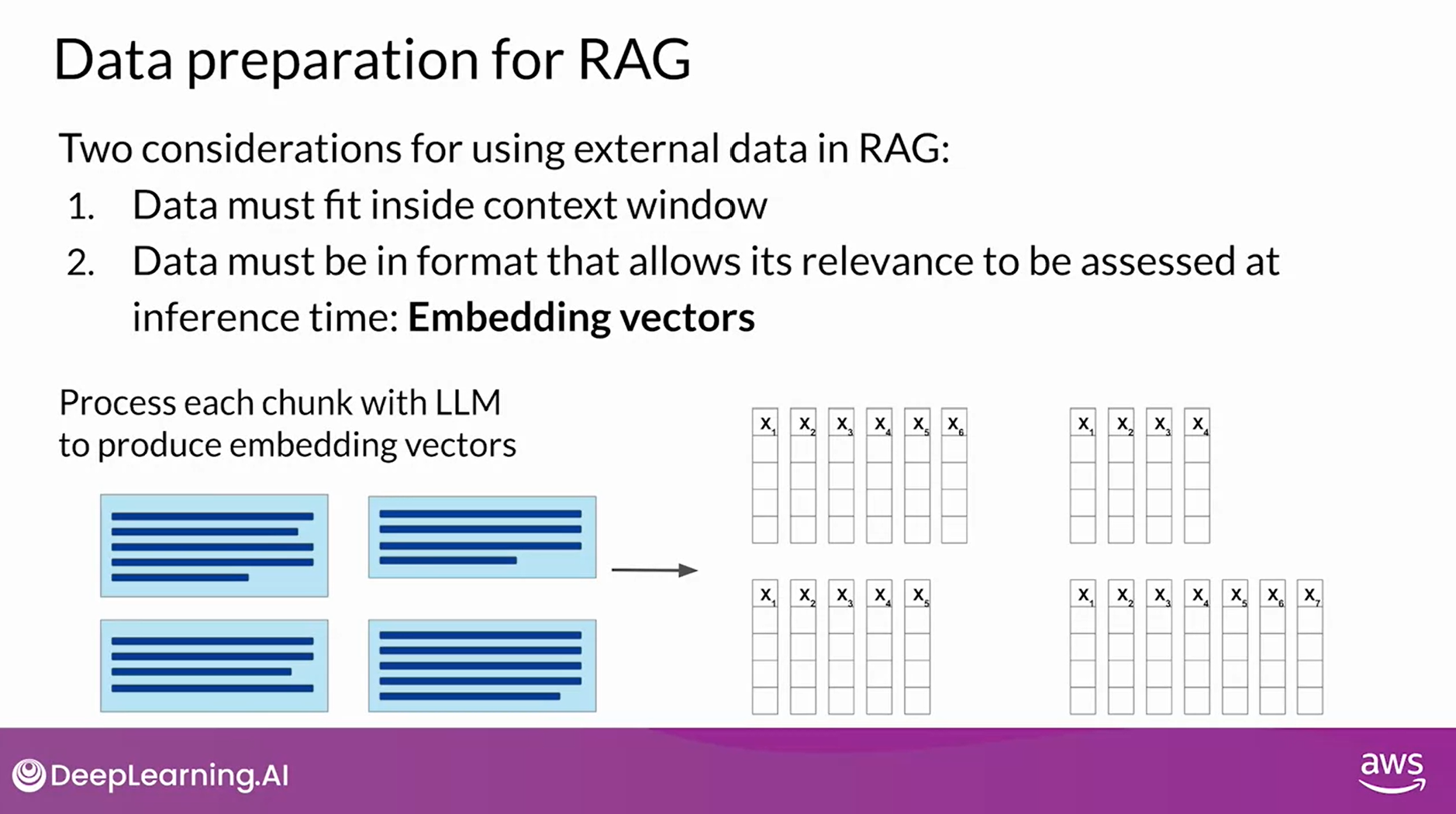

- Data must be in format that allows its relevance to be assessed at inference time: embedding vectors - allow the LLM to identify semantically related words through measures such as Cosine Similarity

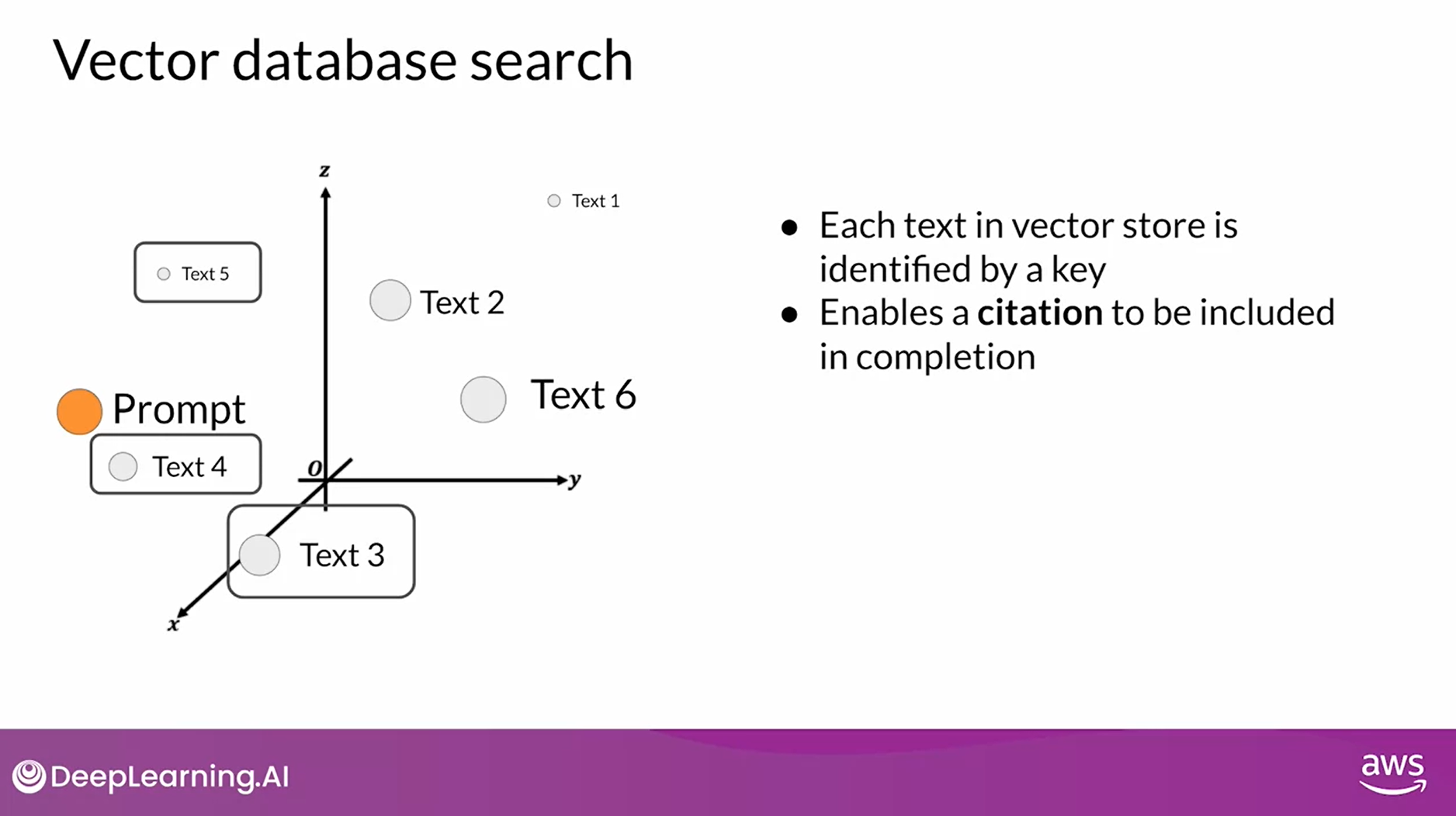

- Vector Database

- Store both the text representation as well as the embeddings

- Enable a fast and efficient kind of relevant search based on similarity

- Each vector is identified by a key

- Enables a citation to be included in completion

- Retriever - retrieves relevant information from an external corpus or knowledge base

- Retrieval Augmented Generation - published by Facebook in 2020

- Problems

- LLM-powered Applications

- Interacting with External Applications

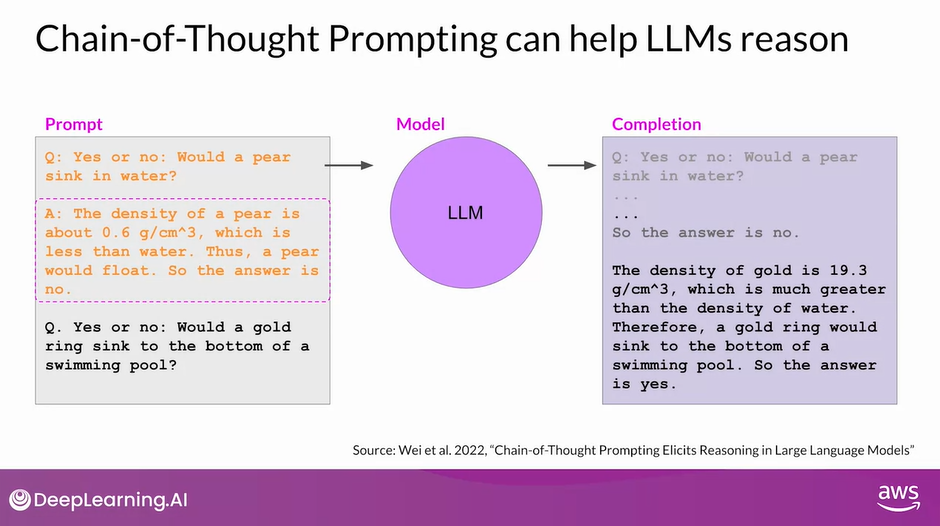

- Helping LLMs Reason and Plan with Chain of Thought (CoT)

- It works by including a series of intermediate reasoning steps into any examples that you use for one or few-shot inference

- By structuring the examples in this way, you’re essentially teaching the model how to reason through the task to reach a solution

- It is a powerful technique that improves the ability of the model to reason through problems

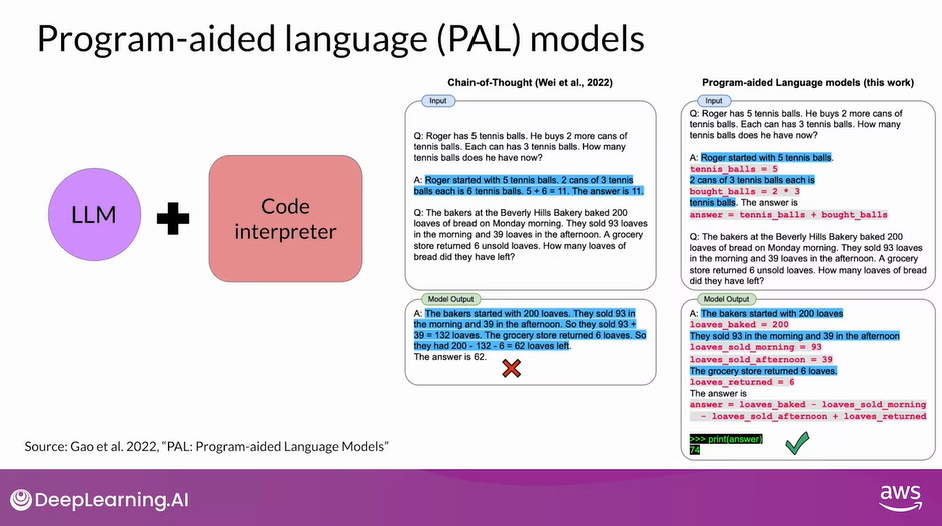

- Program-aided Language Models (PAL)

- Presented by researchers at Carnegie Mellon University in 2022

- Pairs an LLM with an external code interpreter to carry out calculations

- The method makes use of Chain-of-Thought (CoT) prompting to generate executable Python scripts

- ReAct: Combining Reasoning and Action

- ReAct - a prompting strategy that combines Chain-of-Thought (CoT) reasoning with action planning

- Proposed by researches at Princeton and Google in 2022

- LangChain framework - provides you with modular pieces that contain the components necessary to work with LLMs

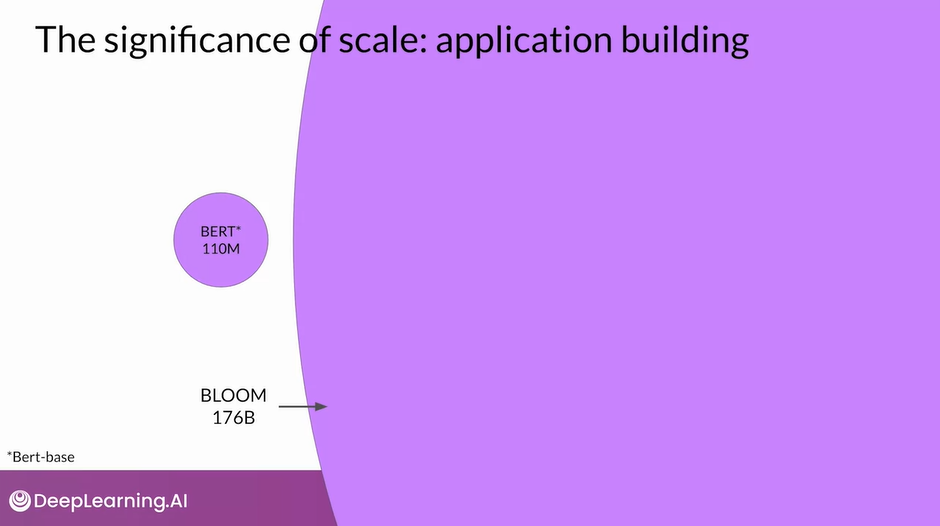

- Significance of Scale: Application Building

- Larger models are more capable

- Start with a larger model, collect a lot of user data in deployment, and use it to train and fine-tune a smaller model that you can switch to at a later time

- ReAct - a prompting strategy that combines Chain-of-Thought (CoT) reasoning with action planning

- Reading: ReAct - Reasoning and Action

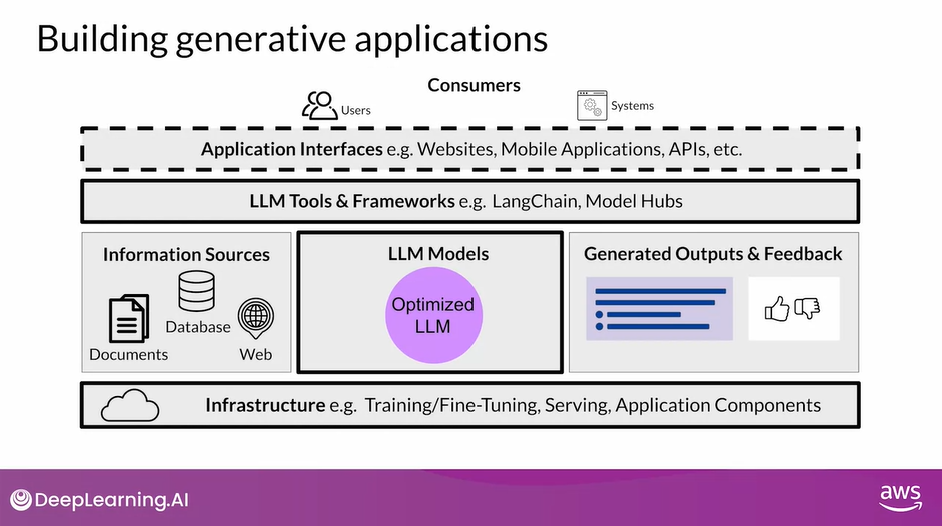

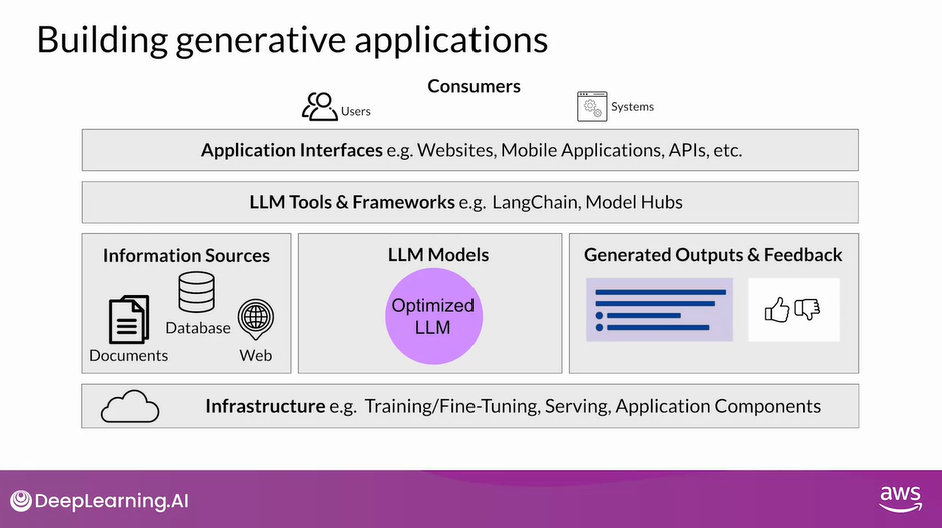

- LLM Application Architectures

- Infrastructure - provides the compute, storage, and network to serve up your LLMs, as well as to host your application components

- LLM Models

- Information Sources - required by RAG

- Gather Outputs & Feedback - capture and store the outputs, gather feedback that may be useful for additional fine-tuning, alignment, or evaluation

- LLM Tools & Frameworks - LangChain, Model Hubs, etc.

- Application Interfaces - Web interface or REST API

Model Optimizations for Deployment

Generative AI Project Lifecycle Stage 4 - Application Integration.

There are a number of important questions to ask at this stage.

- The first set is related to how your LLM will function in deployment.

- So how fast do you need your model to generate completions?

- What compute budget do you have available?

- And are you willing to trade off model performance for improved inference speed or lower storage?

- The second set of questions is tied to additional resources that your model may need.

- Do you intend for your model to interact with external data or other applications?

- And if so, how will you connect to those resources?

- Lastly, there’s the question of how your model will be consumed.

- What will the intended application or API interface that your model will be consumed through look like?

Let’s start by exploring a few methods that can be used to optimize your model before deploying it for inference

Model Optimizations to Improve Application Performance

- Large language models present inference challenges in terms of

- computing and storage requirements, as well as ensuring

- low latency for consuming applications

- These challenges persist whether you’re deploying on premises or to the cloud, and become even more of an issue when deploying to edge devices.

- One of the primary ways to improve application performance is to reduce the size of the LLM.

- This can allow for quicker loading of the model, which reduces inference latency.

- However, the challenge is to reduce the size of the model while still maintaining model performance.

- Some techniques work better than others for generative models, and there are tradeoffs between accuracy and performance.

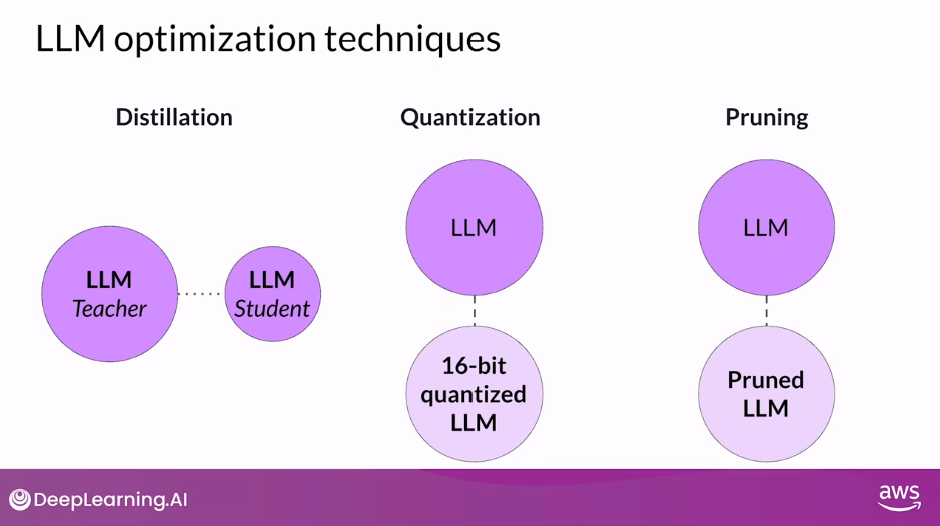

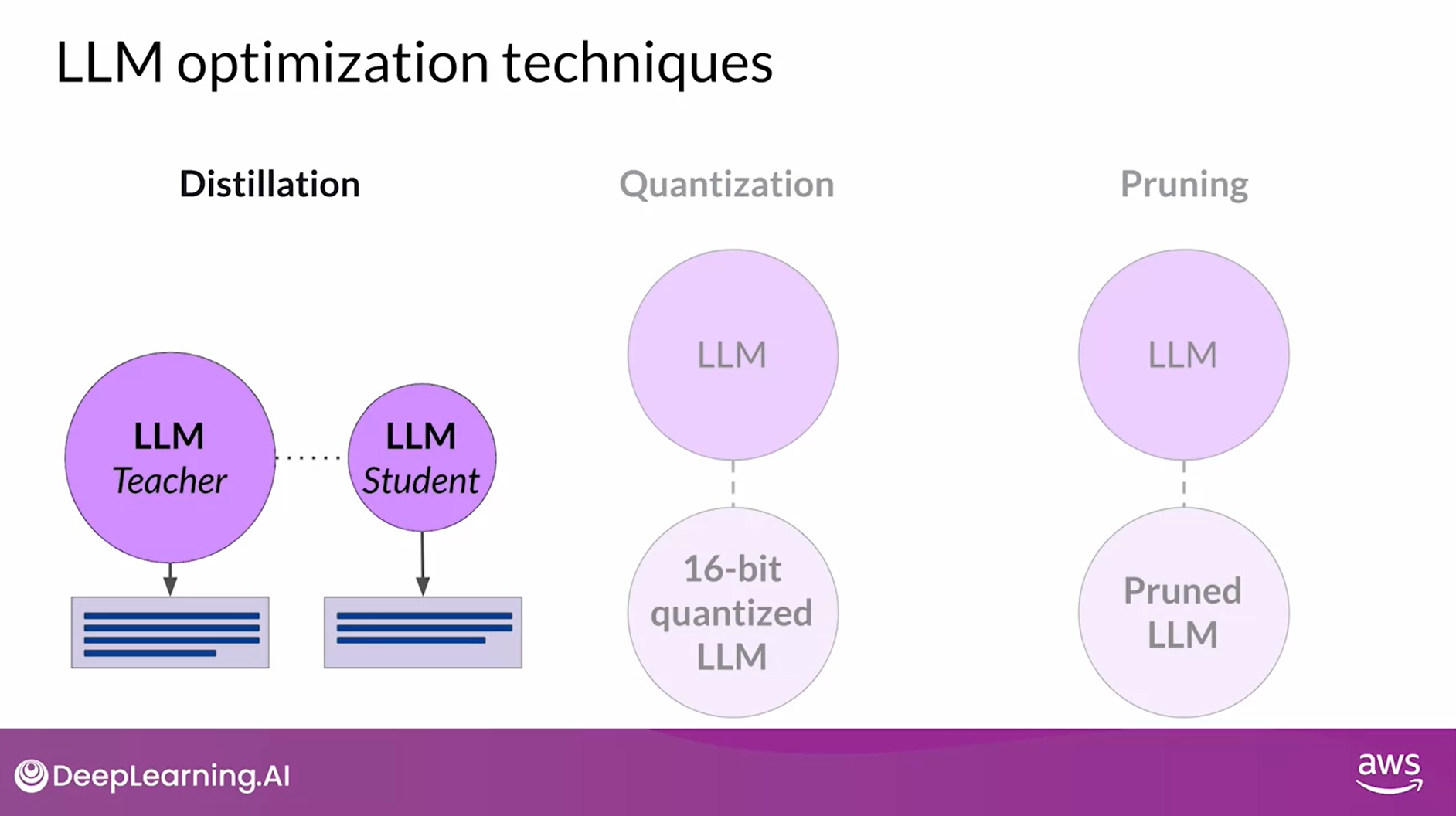

Three LLM Optimization Techniques

Three Techniques to reduce model size while maintaining model performance

- Distillation uses a larger model, the teacher model, to train a smaller model, the student model. You then use the smaller model for inference to lower your storage and compute budget.

- Similar to Quantization Aware Training (QAT), Post Training Quantization transforms a model’s weights to a lower precision representation, such as a 16- bit floating point or eight bit integer. This reduces the memory footprint of your model.

- Model Pruning, removes redundant model parameters that contribute little to the model’s performance

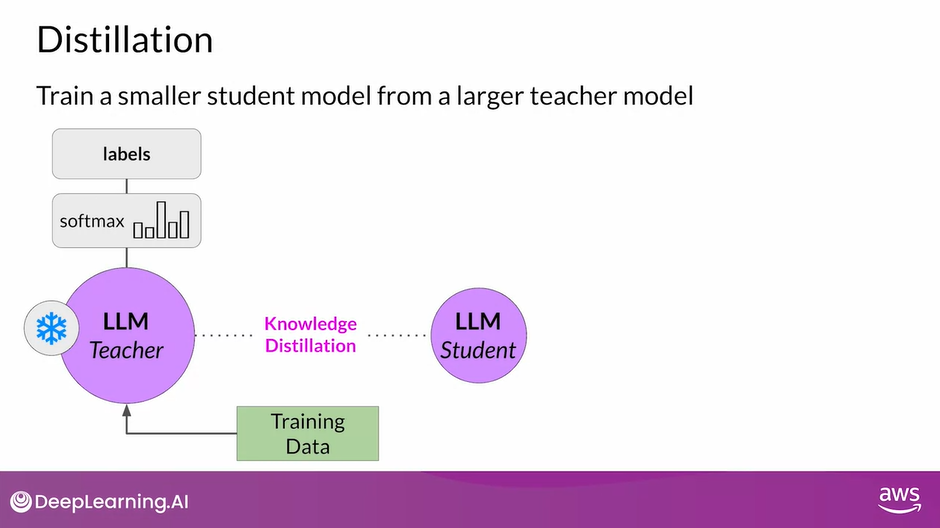

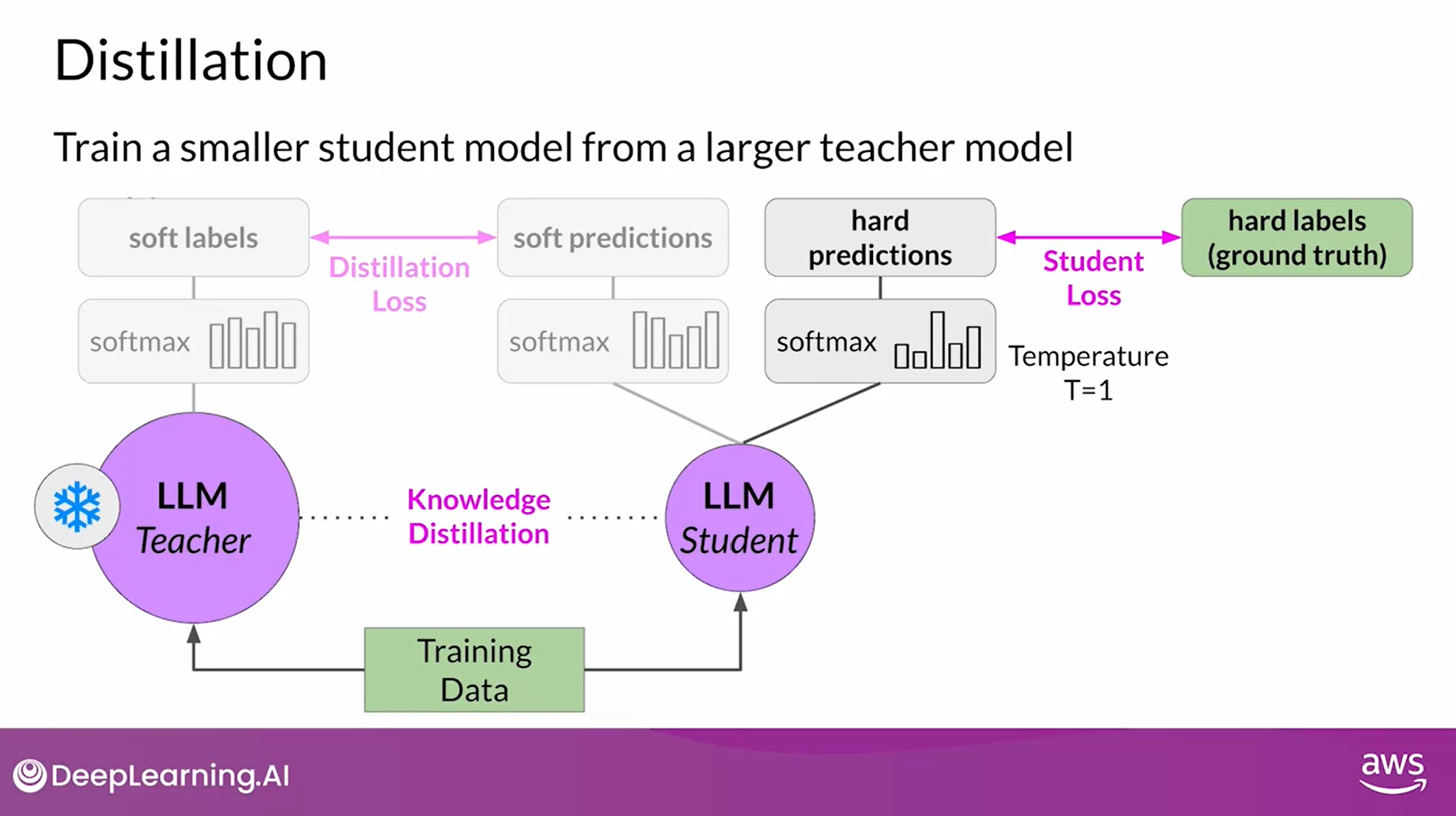

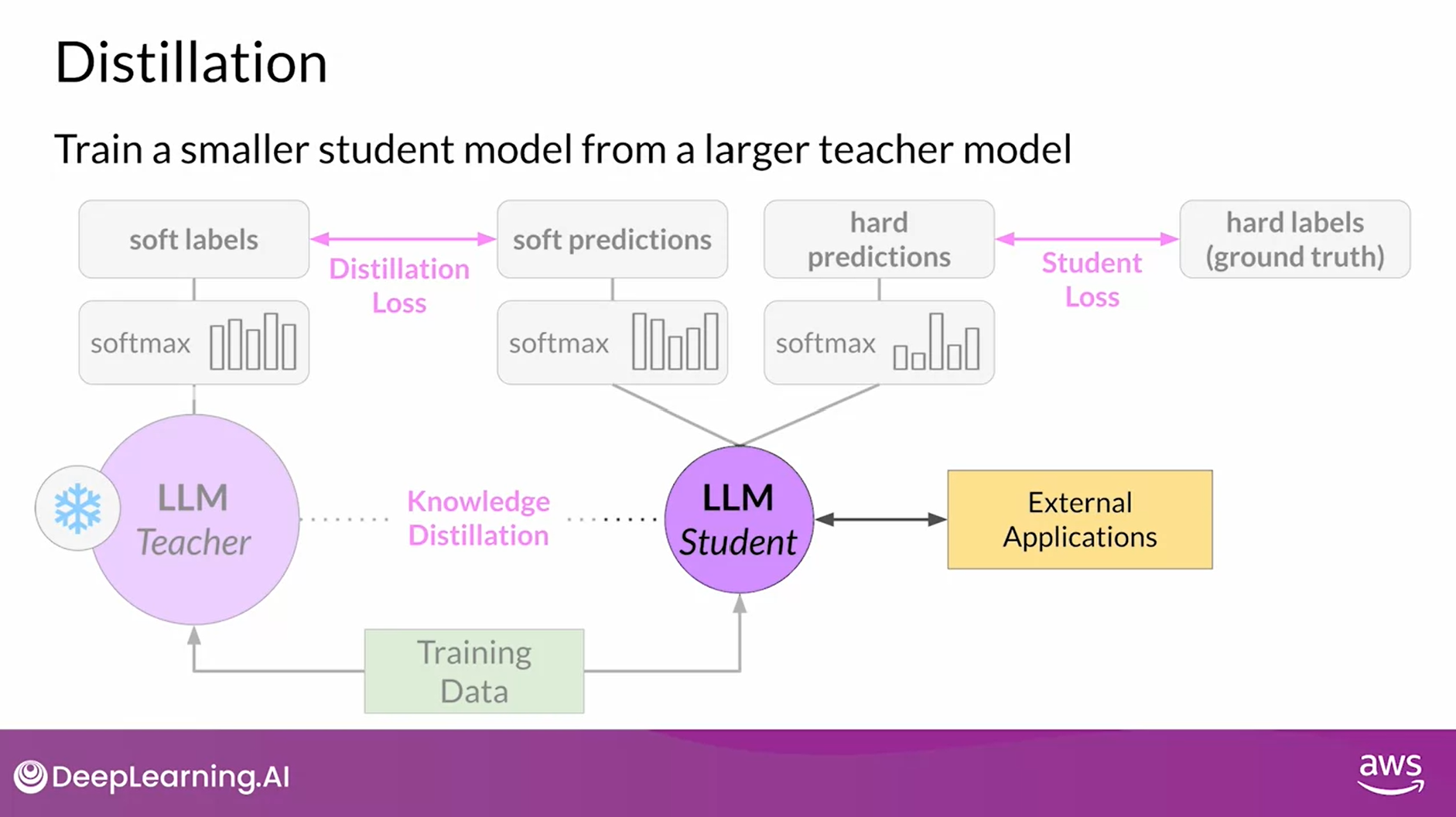

Distillation

- A technique that focuses on having a larger teacher model train a smaller student model

- The student model learns to statistically mimic the behavior of the teacher model, either just in the final prediction layer or in the model’s hidden layers as well

- You start with your fine-tuned LLM as your teacher model and create a smaller LLM for your student model

- You freeze the teacher model’s weights and use it to generate completions for your training data

- At the same time, you generate completions for the training data using your student model

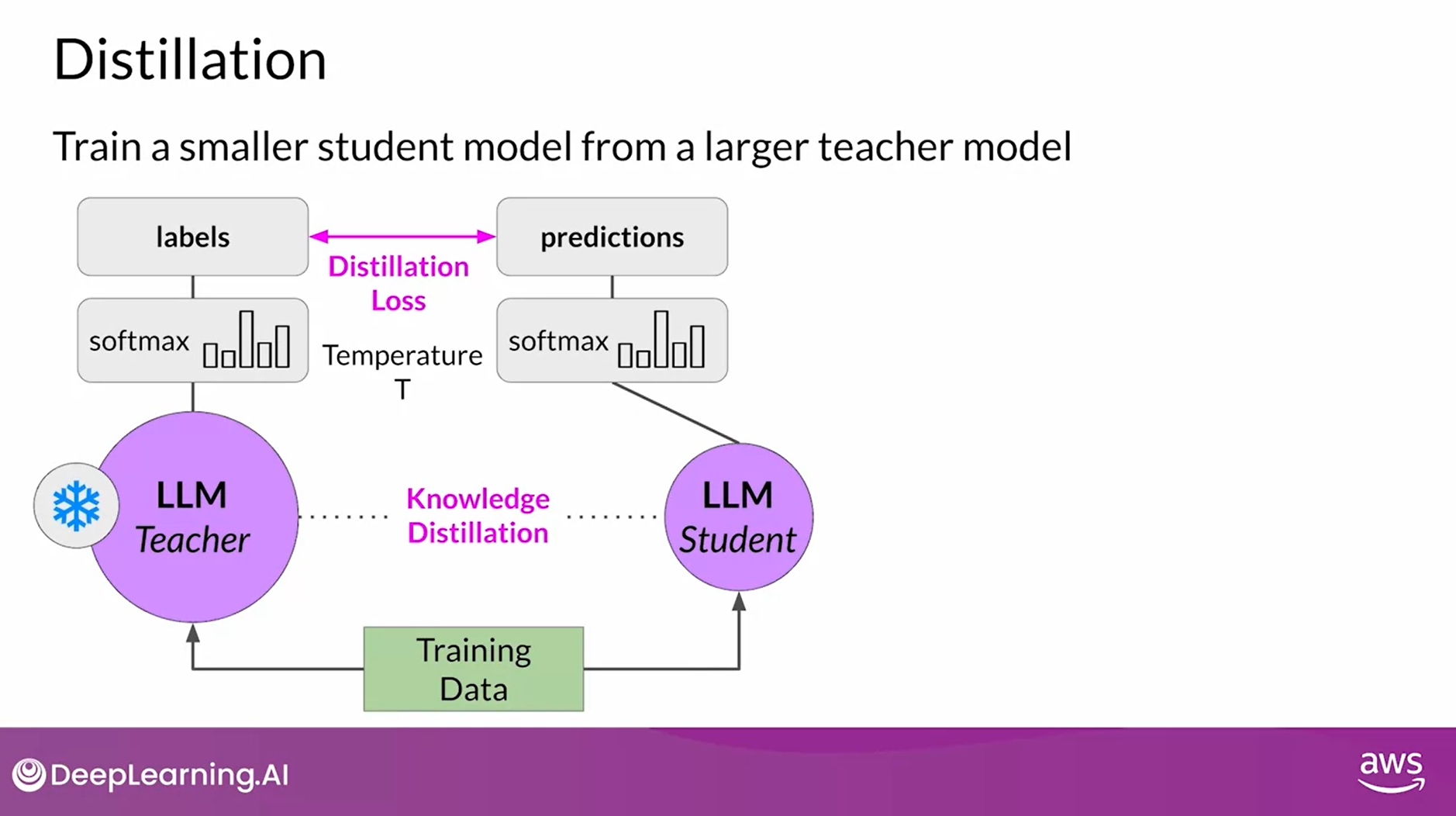

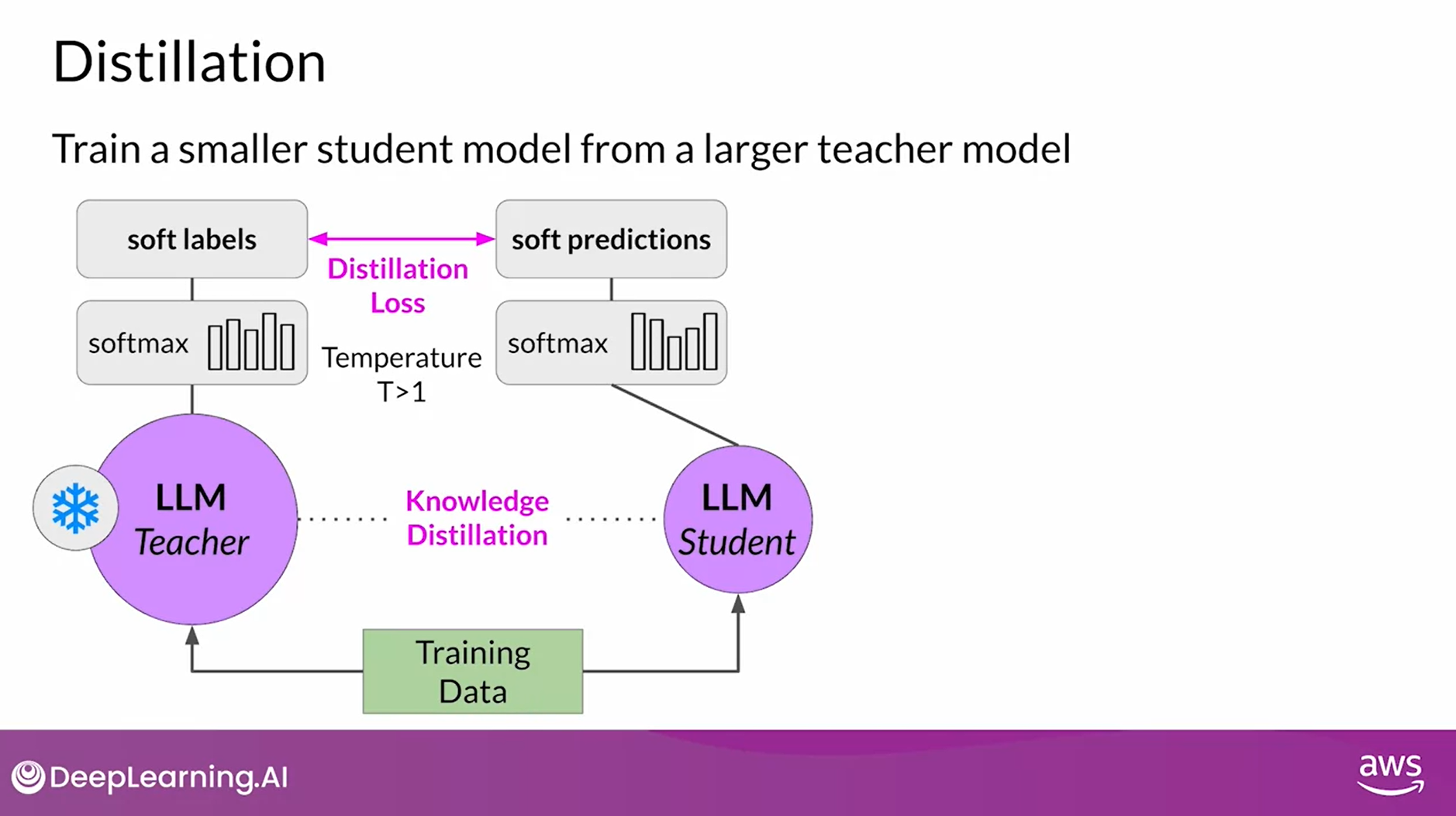

- The knowledge distillation between teacher and student model is achieved by minimizing a loss function called the distillation loss

- To calculate this loss, distillation uses the probability distribution over tokens that is produced by the teacher model’s softmax layer

- Now, the teacher model is already fine-tuned on the training data

- So the probability distribution likely closely matches the ground truth data and won’t have much variation in tokens

- That’s why Distillation applies a little trick adding a temperature parameter to the softmax function

- As you learned in lesson one, a higher temperature increases the creativity of the language the model generates

- With a temperature parameter greater than one, the probability distribution becomes broader and less strongly peaked

- This softer distribution provides you with a set of tokens that are similar to the ground truth tokens

- In the context of Distillation, the teacher model’s output is often referred to as soft labels and the student model’s predictions as soft predictions

- In parallel, you train the student model to generate the correct predictions based on your ground truth training data

- Here, you don’t vary the temperature setting and instead use the standard softmax function

- Distillation refers to the student model outputs as the hard predictions and hard labels

- The loss between these two is the student loss

- The combined distillation and student losses are used to update the weights of the student model via back propagation

- The key benefit of distillation methods is that the smaller student model can be used for inference in deployment instead of the teacher model

- In practice, distillation is not as effective for generative decoder models

- It’s typically more effective for encoder-only models, such as BERT that have a lot of representation redundancy

- Note that with Distillation, you’re training a second, smaller model to use during inference.

- You aren’t reducing the model size of the initial LLM in any way

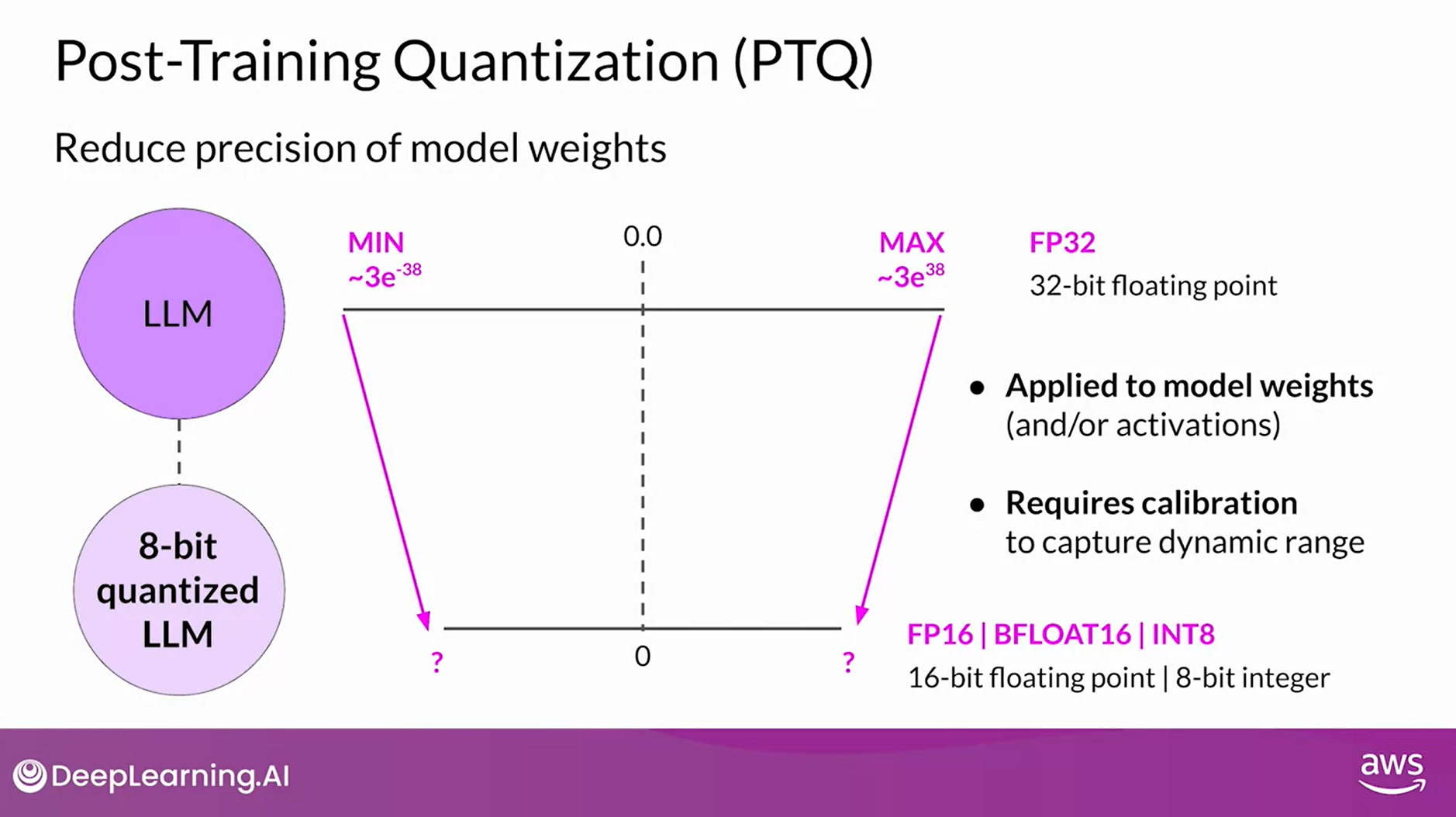

Post-Training Quantization (PTQ)

- You were introduced to the second method, quantization, back in week one in the context of training, specifically Quantization Aware Training (QAT).

- However, after a model is trained, you can perform Post Training Quantization (PTQ) to optimize it for deployment.

- PTQ transforms a model’s weights to a lower precision representation, such as 16-bit floating point or 8-bit integer.

- To reduce the model size and memory footprint, as well as the compute resources needed for model serving, quantization can be applied to just the model weights or to both weights and activation layers.

- In general, quantization approaches that include the activations can have a higher impact on model performance.

- Quantization also requires an extra calibration step to statistically capture the dynamic range of the original parameter values

- As with other methods, there are tradeoffs because sometimes Quantization results in a small percentage reduction in model evaluation metrics

- However, that reduction can often be worth the cost savings and performance gains

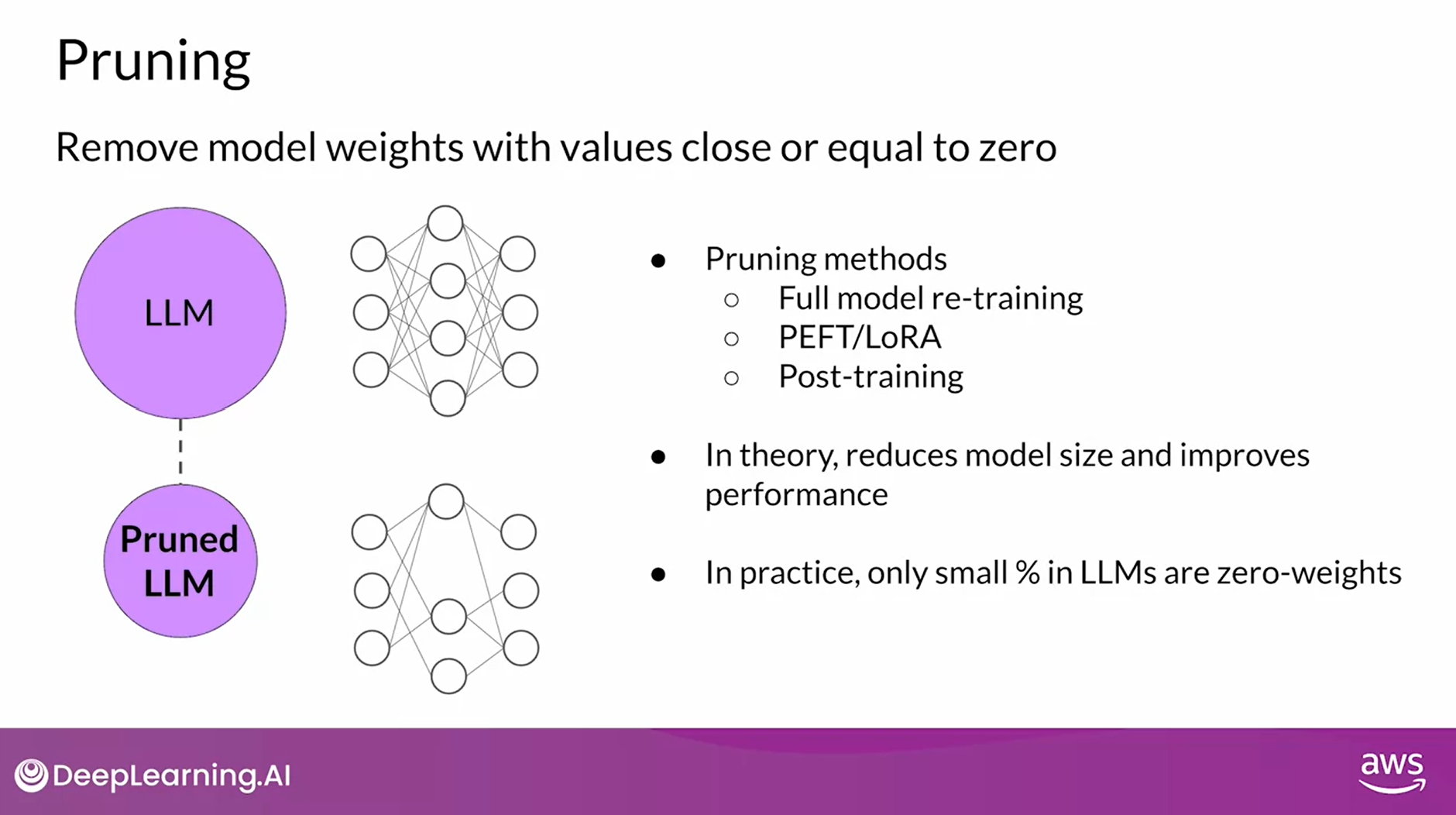

Pruning

- At a high level, the goal is to reduce model size for inference by eliminating weights that are not contributing much to overall model performance

- These are the weights with values very close to or equal to zero

- Note that some pruning methods require full retraining of the model, while others fall into the category of parameter efficient fine-tuning, such as LoRA.

- There are also methods that focus on Post-training Pruning

- In theory, this reduces the size of the model and improves performance

- In practice, however, there may not be much impact on the size and performance if only a small percentage of the model weights are close to zero

- Quantization, Distillation and Pruning all aim to reduce model size to improve model performance during inference without impacting accuracy

- Optimizing your model for deployment will help ensure that your application functions well and provides your users with the best possible experience sense

Generative AI Project Lifecycle Cheat Sheet

Pre-training

- Pre-training a large language model can be a huge effort. This stage is the most complex you’ll face because of

- the model architecture decisions,

- the large amount of training data required, and

- the expertise needed.

- Remember though, that in general, you will start your development work with an existing foundation model. You’ll probably be able to skip this stage.

Prompt Engineering

- If you’re working with a foundation model, you’ll likely start to assess the model’s performance through prompt engineering, which requires less technical expertise, and no additional training of the model.

Prompt Tuning and Fine-tuning

- If your model isn’t performing as you need, you’ll next think about prompt tuning and fine tuning. Depending on your use case, performance goals, and compute budget, the methods you’ll try could range from full fine-tuning to parameter efficient fine tuning techniques like LoRA or prompt tuning. Some level of technical expertise is required for this work. But since fine-tuning can be very successful with a relatively small training dataset, this phase could potentially be completed in a single day.

Reinforcement Learning

- Aligning your model using Reinforcement Learning from Human Feedback (RLHF) can be done quickly, once you have your trained reward model. You’ll likely see if you can use an existing reward model for this work, as you saw in this week’s lab. However, if you have to train a reward model from scratch, it could take a long time because of the effort involved to gather human feedback.

Compression/Optimization/Deployment

- Finally, optimization techniques, typically fall in the middle in terms of complexity and effort, but can proceed quite quickly assuming the changes to the model don’t impact performance too much. After working through all of these steps, you have hopefully trained and tuned a great LLM that is working well for your specific use case, and is optimized for deployment.

Using the LLM in Applications

- There are some broader challenges with large language models that can’t be solved by training alone

- Problems

- Information may be out of date

- Struggle with complex math problems

- Hallucination

- Learn about some techniques that you can use to help your LLM overcome these issues by connecting to external data sources and applications

- Your application must manage the passing of user input to the large language model and the return of completions

- This is often done through some type of orchestration library

- This layer can enable some powerful technologies that augment and enhance the performance of the LLM at runtime

- By providing access to external data sources or connecting to existing APIs of other applications

- One implementation example is LangChain

Let’s start by considering how to connect LLMs to external data sources

Retrieval Augmented Generation

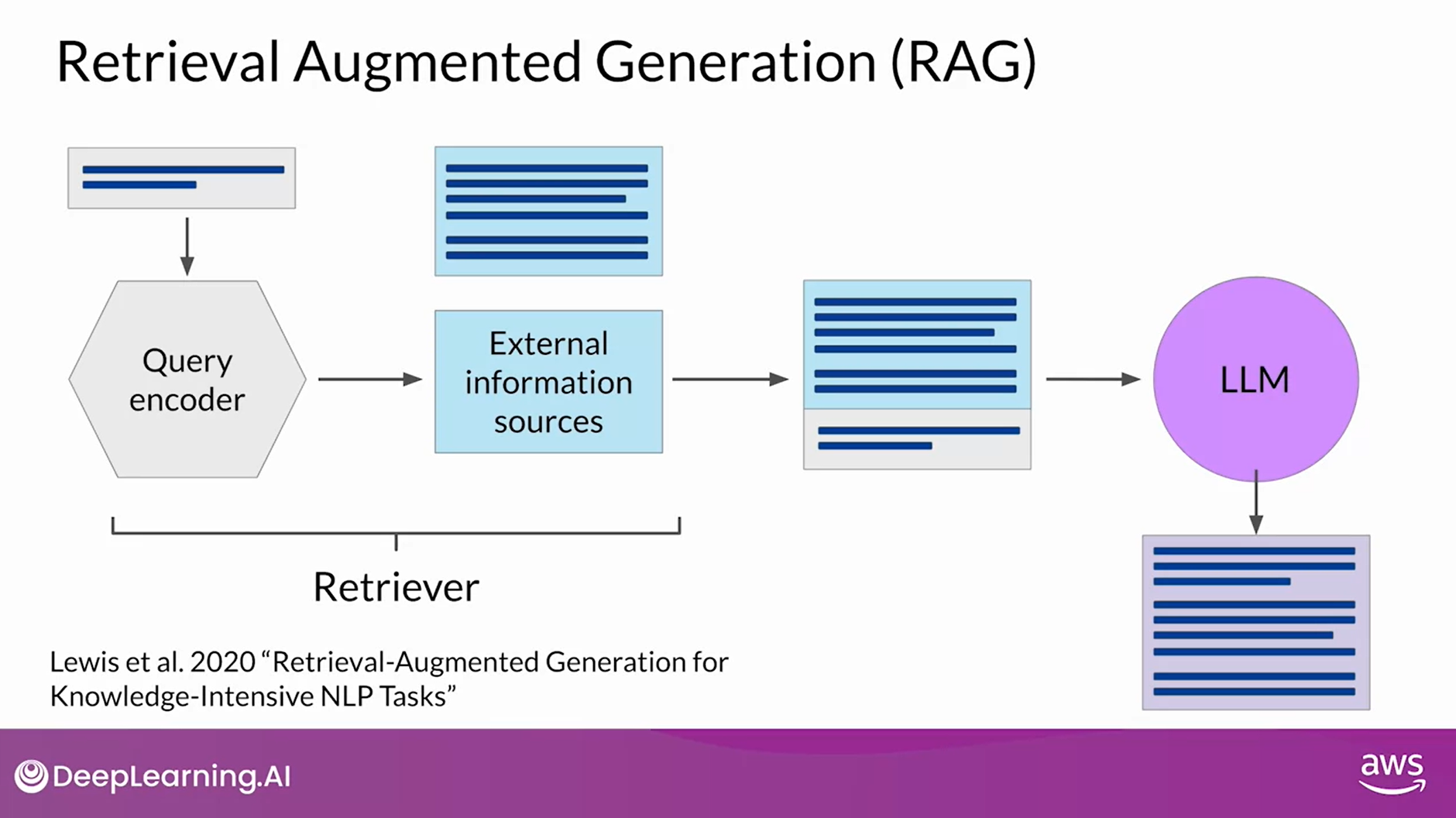

- Retrieval Augmented Generation (RAG) is a framework for building LLM powered systems that make use of external data sources and applications to overcome some of the limitations of these models.

- RAG is a great way to overcome the knowledge cutoff issue and help the model update its understanding of the world.

- While you could retrain the model on new data, this would quickly become very expensive and require repeated retraining to regularly update the model with new knowledge.

- A more flexible and less expensive way to overcome knowledge cutoffs is to give your model access to additional external data at inference time.

- RAG is useful in any case where you want the language model to have access to data that it may not have seen.

- This could be new information documents not included in the original training data, or proprietary knowledge stored in your organization’s private databases.

- Providing your model with external information, can improve both the relevance and accuracy of its completions

- Retrieval Augmented Generation isn’t a specific set of technologies, but rather a framework for providing LLMs access to data they did not see during training.

- A number of different implementations exist, and the one you choose will depend on the details of your task and the format of the data you have to work with.

- Here you’ll walk through the implementation discussed in one of the earliest papers on RAG by researchers at Facebook, originally published in 2020.

- At the heart of this implementation is a model component called the Retriever, which consists of a query encoder and an external data source.

- The encoder takes the user’s input prompt and encodes it into a form that can be used to query the data source.

- In the Facebook paper, the external data is a vector store, which we’ll discuss in more detail shortly. But it could instead be a SQL database, CSV files, or other data storage format.

- These two components are trained together to find documents within the external data that are most relevant to the input query.

- The Retriever returns the best single or group of documents from the data source and combines the new information with the original user query.

- The new expanded prompt is then passed to the language model, which generates a completion that makes use of the data

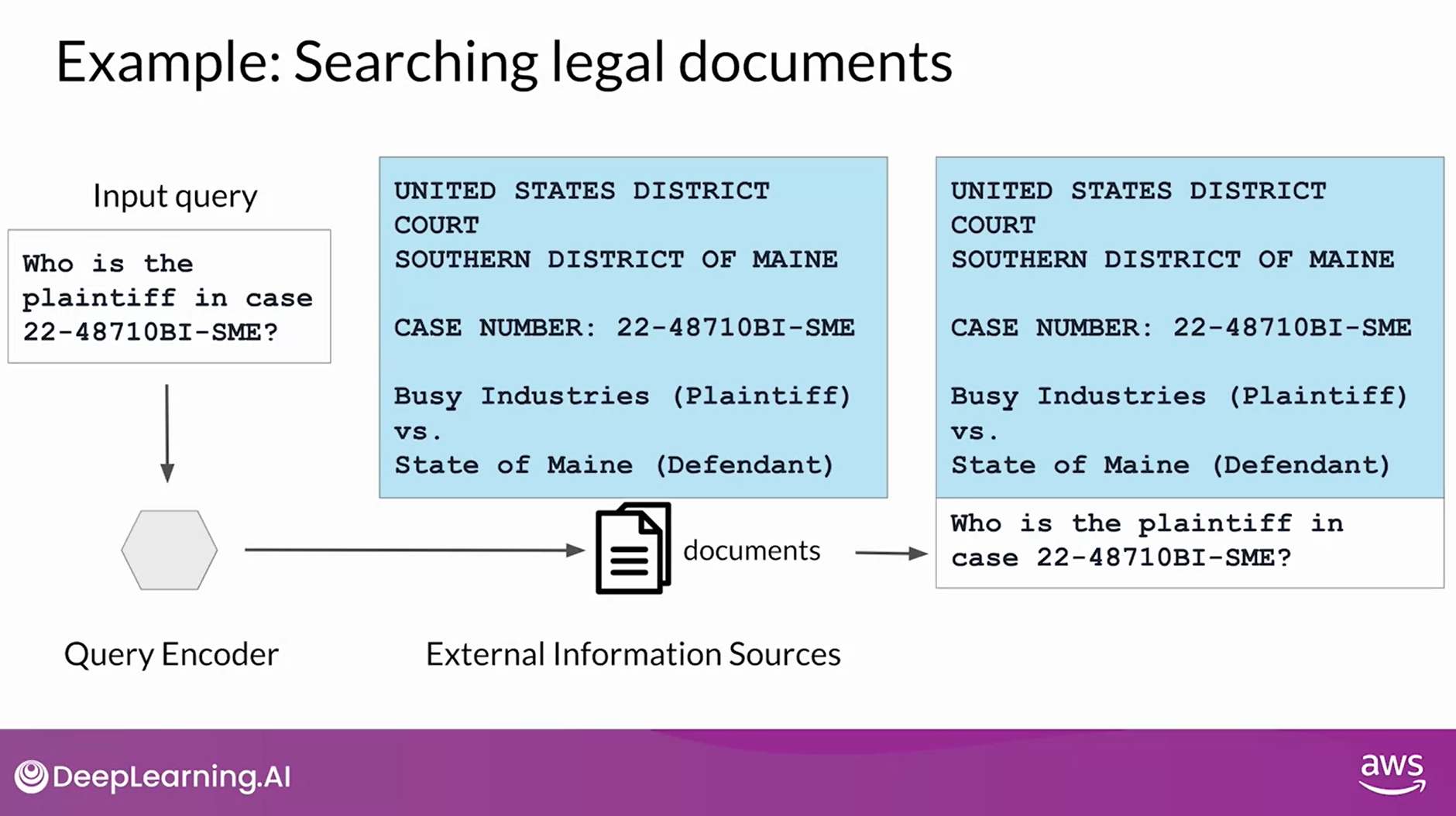

RAG Example: Searching Legal Documents

- Imagine you are a lawyer using a large language model to help you in the discovery phase of a case.

- A RAG architecture can help you ask questions of a corpus of documents, for example, previous court filings.

- Here you ask the model about the plaintiff named in a specific case number

- The prompt is passed to the query encoder, which encodes the data in the same format as the external documents.

- And then searches for a relevant entry in the corpus of documents.

- Having found a piece of text that contains the requested information, the Retriever then combines the new text with the original prompt.

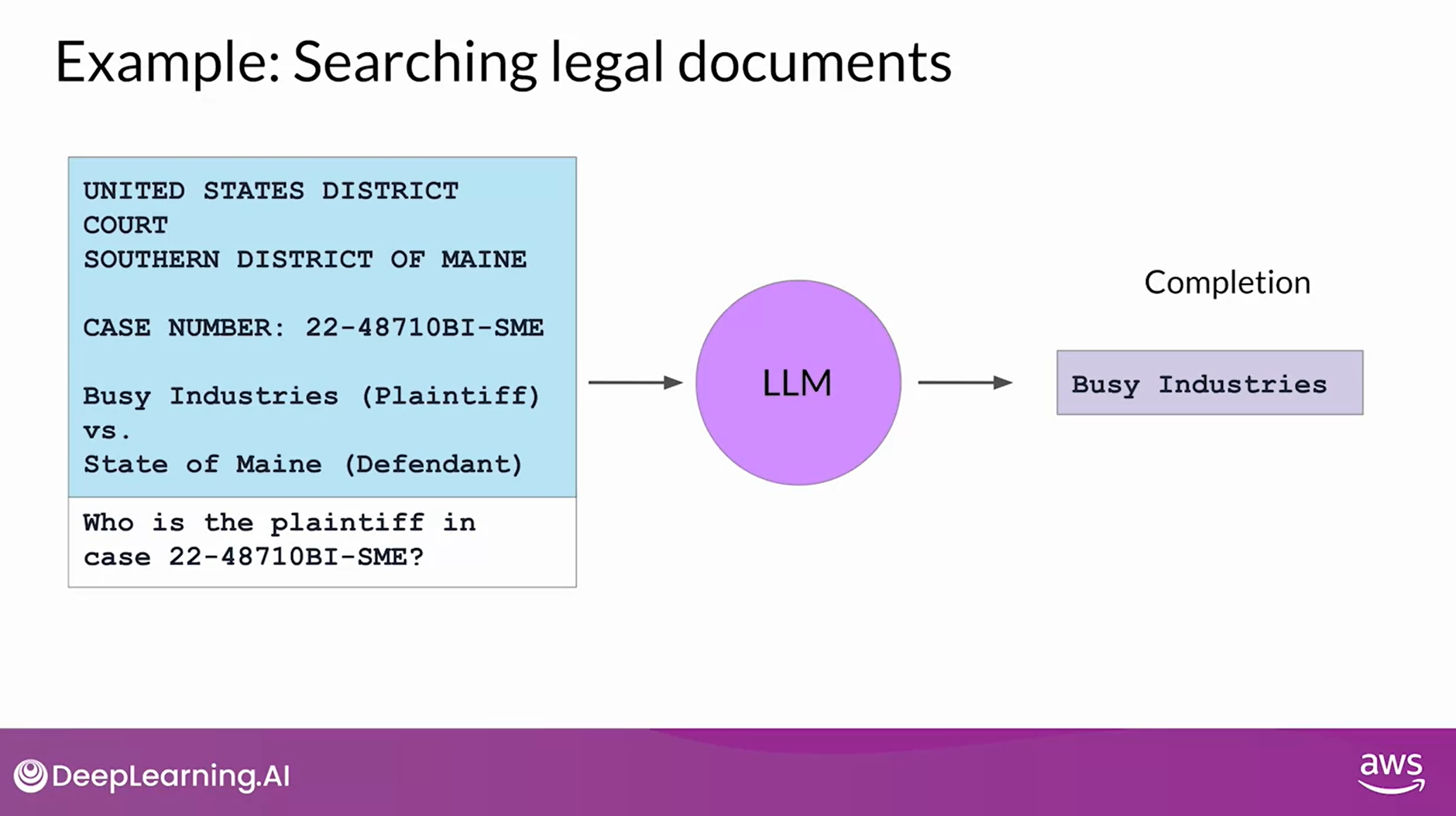

- The expanded prompt that now contains information about the specific case of interest is then passed to the LLM.

- The model uses the information in the context of the prompt to generate a completion that contains the correct answer.

- The use case you have seen here is quite simple and only returns a single piece of information that could be found by other means

- But imagine the power of RAG to be able to generate summaries of filings or identify specific people, places and organizations within the full corpus of the legal documents.

- Allowing the model to access information contained in this external data set greatly increases its utility for this specific use case

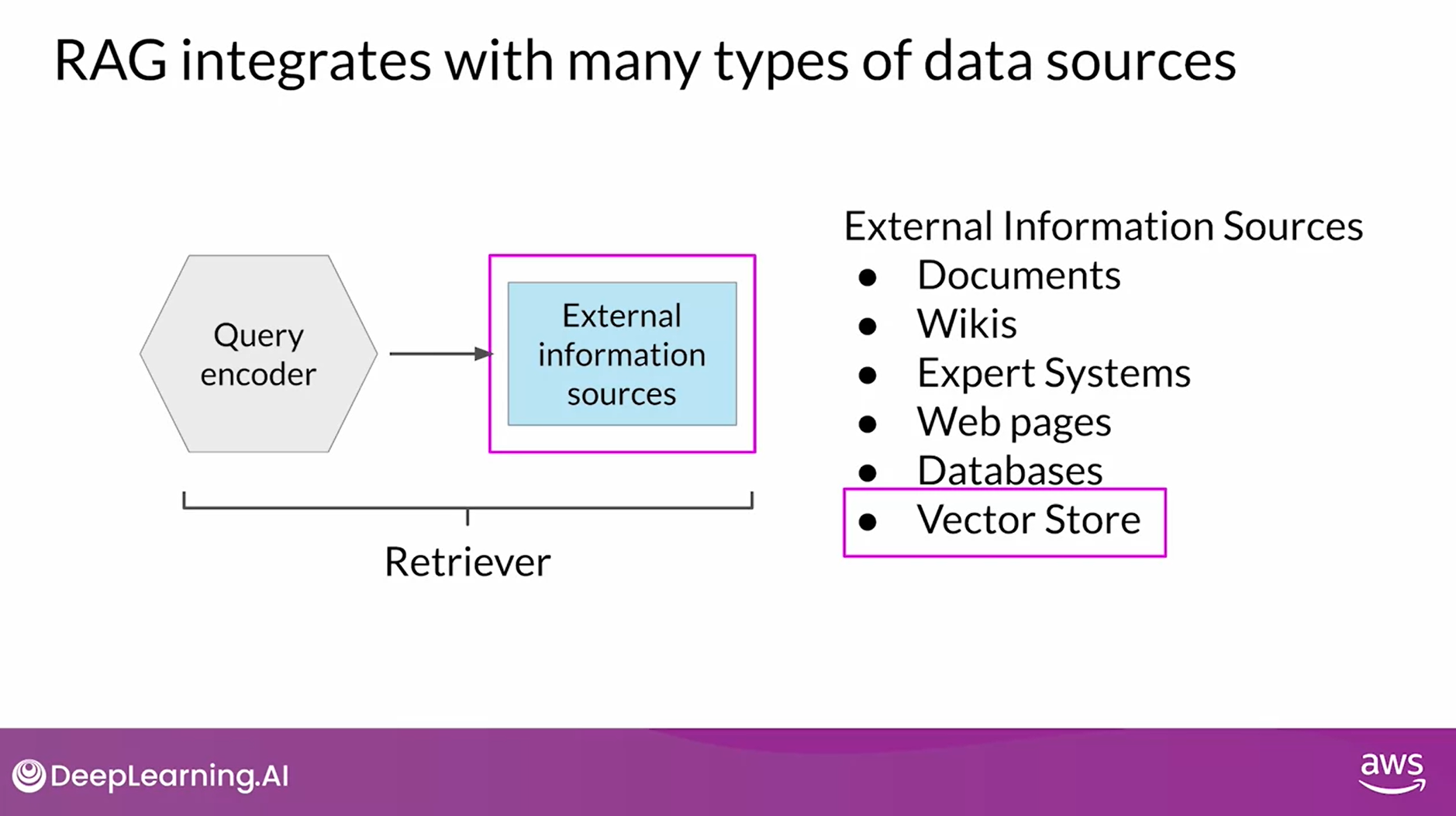

RAG Integrates with Many Types of Data Sources

- In addition to overcoming knowledge cutoffs, RAG also helps you avoid the problem of the model hallucinating when it doesn’t know the answer

- RAG architectures can be used to integrate multiple types of external information sources.

- You can augment large language models with access to local documents, including private wikis and expert systems.

- RAG can also enable access to the Internet to extract information posted on web pages, for example, Wikipedia.

- By encoding the user input prompt as a SQL query, RAG can also interact with databases.

- Another important data storage strategy is a Vector Store, which contains vector representations of text.

- This is a particularly useful data format for language models, since internally they work with vector representations of language to generate text.

- Vector stores enable a fast and efficient kind of relevant search based on similarity

Note: implementing RAG is a little more complicated than simply adding text into the LLM

Data Preparation

- Data must fit inside context window

- Most text sources are too long to fit into the limited context window of the model, which is still at most just a few thousand tokens.

- Instead, the external data sources are chopped up into many chunks, each of which will fit in the context window.

- Packages like LangChain can handle this work for you

- Data must be in format that allows its relevance to be assessed at inference time - embedding vectors

- Recall that LLMs don’t work directly with text, but instead create vector representations of each token in an embedding space.

- These embedding vectors allow the LLM to identify semantically related words through measures such as cosine similarity

- RAG methods take the small chunks of external data and process them through the large language model, to create embedding vectors for each.

- These new representations of the data can be stored in structures called vector stores, which allow for fast searching of datasets and efficient identification of semantically related text

Vector Database Search

- Vector databases are a particular implementation of a vector store where each vector is also identified by a key.

- This can allow, for instance, the text generated by RAG to also include a citation for the document from which it was received

- You’ve seen how access to external data sources can help a model overcome limits to its internal knowledge.

- By providing up to date relevant information and avoiding hallucinations, you can greatly improve the experience of using your application for your users.

- Next, we’ll explore a technique that can improve a model’s ability to reason and make plans important steps when using an LLM to power an application

Question: How does Retrieval Augmented Generation (RAG) enhance generation-based models?

By making external knowledge available to the model

Correct

The retriever component retrieves relevant information from an external corpus or knowledge base, which is then used by the model to generate more informed and contextually relevant responses. This incorporation of external knowledge enhances the quality and relevance of the generated content.

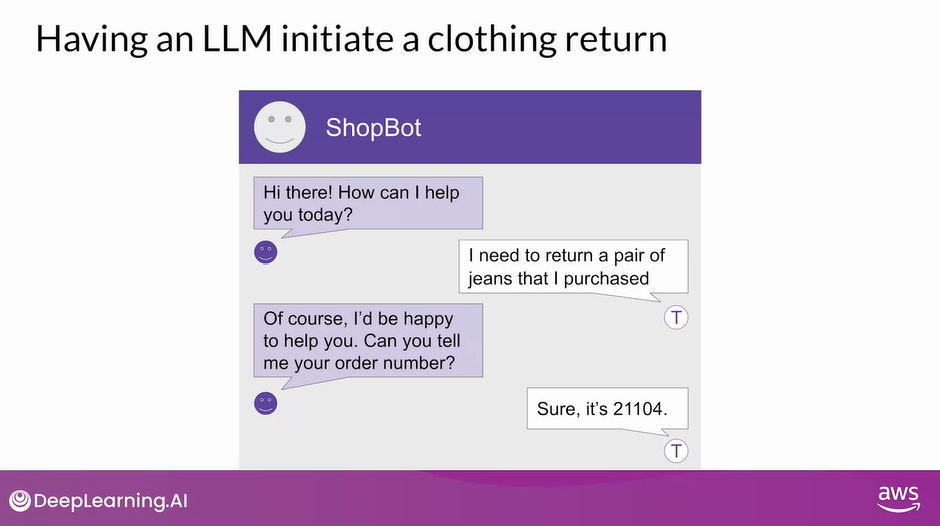

Interacting with External Applications

- In the previous section, you saw how LLMs can interact with external datasets.

- Now let’s take a look at how they can interact with external applications.

- To motivate the types of problems and use cases that require this kind of augmentation of the LLM, you’ll revisit the customer service bot example you saw earlier in the course.

- During this walkthrough of one customer’s interaction with ShopBot, you’ll take a look at the integrations that you’d need to allow the app to process a return requests from end to end.

- In this conversation, the customer has expressed that they want to return some genes that they purchased.

- ShopBot responds by asking for the order number, which the customer then provides

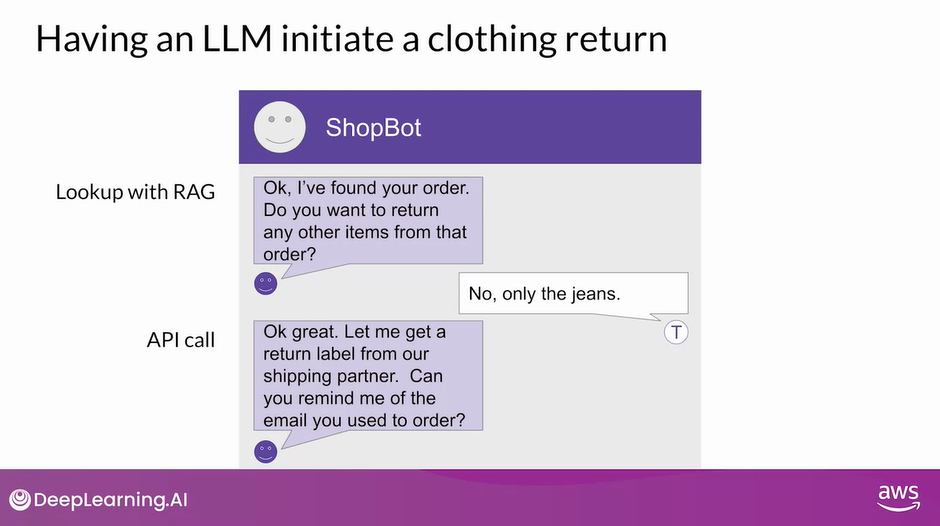

- ShopBot then looks up the order number in the transaction database.

- One way it could do this is by using a rag implementation of the kind you saw earlier in the previous video.

- In this case here, you would likely be retrieving data through a SQL query to a back-end order database rather than retrieving data from a corpus of documents.

- Once ShopBot has retrieved the customers order, the next step is to confirm the items that will be returned.

- The bot ask the customer if they’d like to return anything other than the genes.

- After the user states their answer, the bot initiates a request to the company’s shipping partner for a return label.

- The body uses the shippers Python API to request the label ShopBot is going to email the shipping label to the customer.

- It also asks them to confirm their email address

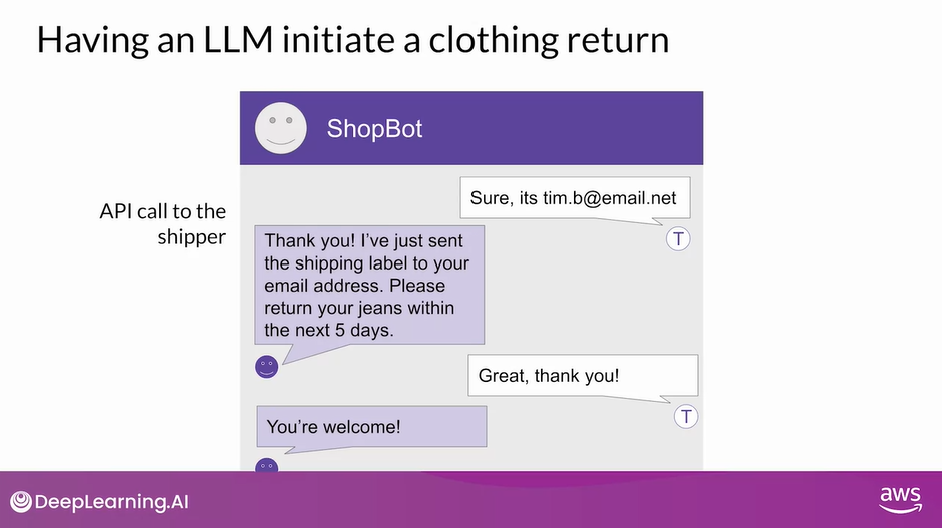

- The customer responds with their email address and the bot includes this information in the API call to the shipper.

- Once the API request is completed, the Bartlett’s the customer know that the label has been sent by email, and the conversation comes to an end.

- This short example illustrates just one possible set of interactions that you might need an LLM to be capable of to power and application

LLM-powered Applications

- In general, connecting LLMs to external applications allows the model to interact with the broader world, extending their utility beyond language tasks.

- As the shop bought example showed, LLMs can be used to trigger actions when given the ability to interact with APIs.

- LLMs can also connect to other programming resources.

- For example, a Python interpreter that can enable models to incorporate accurate calculations into their outputs.

- It’s important to note that prompts and completions are at the very heart of these workflows.

- The actions that the app will take in response to user requests will be determined by the LLM, which serves as the application’s reasoning engine

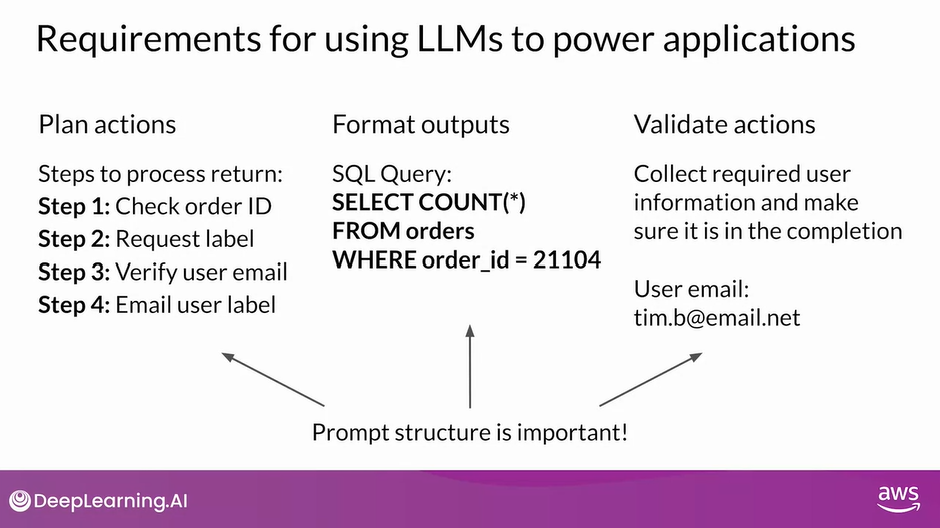

Requirements for Using LLMs to Power Applications

- In order to trigger actions, the completions generated by the LLM must contain certain important information.

- First, the model needs to be able to generate a set of instructions so that the application knows what actions to take.

- These instructions need to be understandable and correspond to allowed actions

- In the ShopBot example for instance, the important steps were;

- checking the order ID,

- requesting a shipping label,

- verifying the user email, and

- emailing the user the label.

- Second, the completion needs to be formatted in a way that the broader application can understand. This could be as simple as a specific sentence structure or as complex as writing a script in Python or generating a SQL command.

- For example, here is a SQL query that would determine whether an order is present in the database of all orders

- Lastly, the model may need to collect information that allows it to validate an action.

- For example, in the ShopBot conversation, the application needed to verify the email address the customer used to make the original order.

- Any information that is required for validation needs to be obtained from the user and contained in the completion so it can be passed through to the application.

- Structuring the prompts in the correct way is important for all of these tasks and can make a huge difference in the quality of a plan generated or the adherence to a desired output format specification

Helping LLMs Reason and Plan with Chain of Thought (CoT)

- It is important that LLMs can reason through the steps that an application must take, to satisfy a user request.

- Unfortunately, complex reasoning can be challenging for LLMs, especially for problems that involve multiple steps or mathematics.

- These problems exist even in large models that show good performance at many other tasks

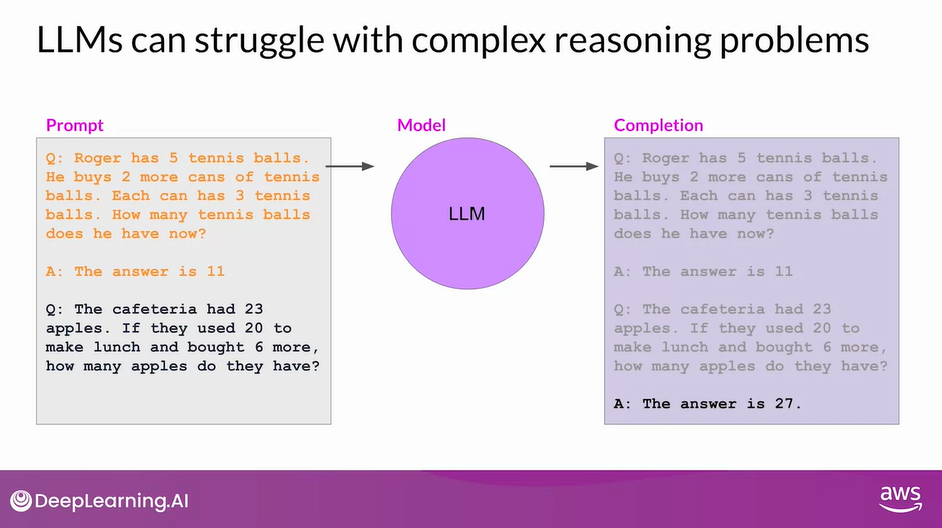

LLMs Can Struggle with Complex Reasoning Problems

- Here’s one example where an LLM has difficulty completing the task.

- You’re asking the model to solve a simple multi-step math problem, to determine how many apples a cafeteria has after using some to make lunch, and then buying some more.

- Your prompt includes a similar example problem, complete with the solution, to help the model understand the task through one-shot inference.

- If you like, you can pause the video here for a moment and solve the problem yourself.

- After processing the prompt, the model generates the completion shown here, stating that the answer is 27.

- This answer is incorrect, as you found out if you solve the problem.

- The cafeteria actually only has nine apples remaining

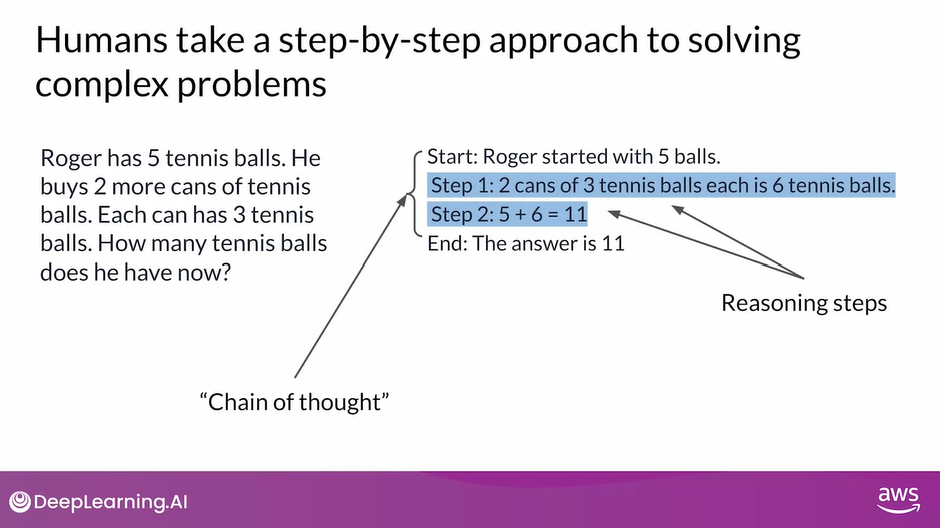

- Researchers have been exploring ways to improve the performance of large language models on reasoning tasks, like the one you just saw.

- One strategy that has demonstrated some success is prompting the model to think more like a human, by breaking the problem down into steps.

- What do I mean by thinking more like a human?

- Well, here is the one-shot example problem from the prompt on the previous slide.

- The task here is to calculate how many tennis balls Roger has after buying some new ones.

- One way that a human might tackle this problem is as follows.

- Begin by determining the number of tennis balls Roger has at the start.

- Then note that Roger buys two cans of tennis balls. Each can contains three balls, so he has a total of six new tennis balls.

- Next, add these 6 new balls to the original 5, for a total of 11 balls.

- Then finish by stating the answer.

- These intermediate calculations form the reasoning steps that a human might take, and the full sequence of steps illustrates the chain of thought that went into solving the problem

Asking the model to mimic this behavior is known as Chain-of-Thought (CoT) prompting.

It works by including a series of intermediate reasoning steps into any examples that you use for one or few-shot inference.

By structuring the examples in this way, you’re essentially teaching the model how to reason through the task to reach a solution

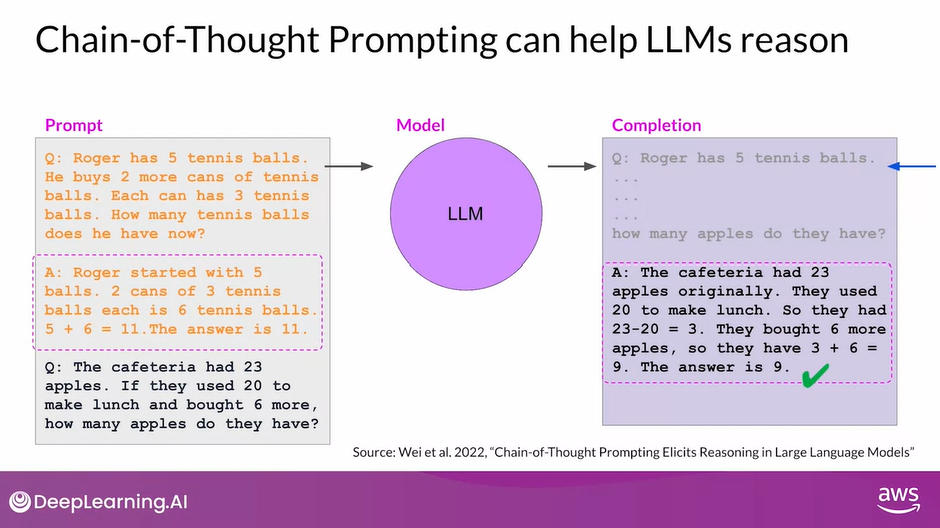

Here’s the same apples problem you saw a couple of slides ago, now reworked as a chain of thought prompt.

- The story of Roger buying the tennis balls is still used as the one-shot example.

- But this time you include intermediate reasoning steps in the solution text.

- These steps are basically equivalent to the ones a human might take, that you saw just a few minutes ago.

- You then send this chain of thought prompt to the large language model, which generates a completion.

- Notice that the model has now produced a more robust and transparent response that explains its reasoning steps, following a similar structure as the one-shot example.

- The model now correctly determines that nine apples are left.

- Thinking through the problem has helped the model come to the correct answer.

- One thing to note is that while the input prompt is shown here in a condensed format to save space, the entire prompt is actually included in the output

- You can use chain of thought prompting to help LLMs improve their reasoning of other types of problems too, in addition to arithmetic

- Here’s an example of a simple physics problem, where the model is being asked to determine if a gold ring would sink to the bottom of a swimming pool.

- The chain of thought prompt included as the one-shot example here, shows the model how to work through this problem, by reasoning that a pair would flow because it’s less dense than water.

- When you pass this prompt to the LLM, it generates a similarly structured completion.

- The model correctly identifies the density of gold, which it learned from its training data, and then reasons that the ring would sink because gold is much more dense than water

- Chain-of-Thought prompting is a powerful technique that improves the ability of your model to reason through problems.

- While this can greatly improve the performance of your model, the limited math skills of LLMs can still cause problems if your task requires accurate calculations, like totaling sales on an e-commerce site, calculating tax, or applying a discount

- In the next lesson, you’ll explore a technique that can help you overcome this problem, by letting your LLM talk to a program that is much better at math

Program-aided Language (PAL) Models

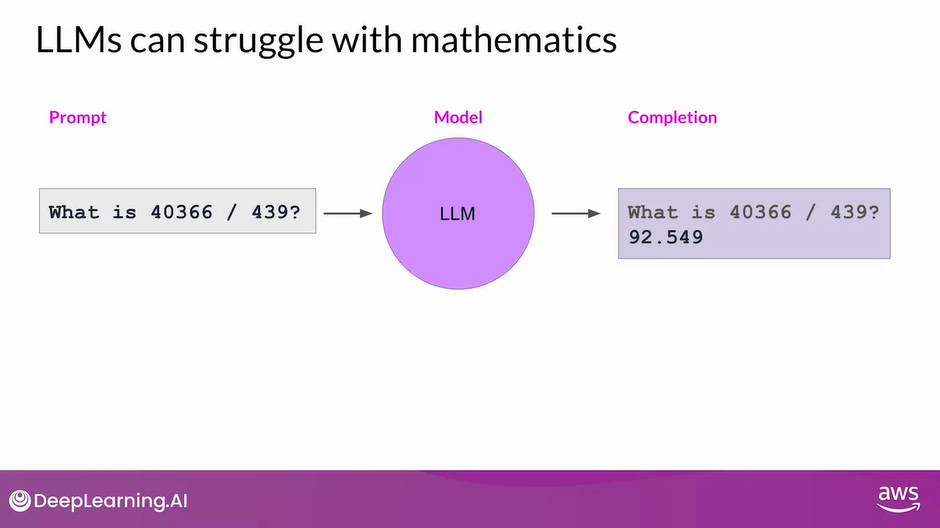

- The ability of LLMs to carry out arithmetic and other mathematical operations is limited.

- While you can try using Chain-of-Thought (CoT) prompting to overcome this, it will only get you so far.

- Even if the model correctly reasons through a problem, it may still get the individual math operations wrong, especially with larger numbers or complex operations

LLMs Can Struggle with Mathematics

- Here’s the example you saw earlier where the LLM tries to act like a calculator but gets the answer wrong.

- Remember, the model isn’t actually doing any real math here.

- It is simply trying to predict the most probable tokens that complete the prompt.

- The model getting math wrong can have many negative consequences depending on your use case, like charging customers the wrong total or getting the measurements for a recipe incorrect

PAL Models

- You can overcome this limitation by allowing your model to interact with external applications that are good at math, like a Python interpreter.

- One interesting framework for augmenting LLMs in this way is called program-aided language models, or PAL for short.

- This work first presented by Luyu Gao and collaborators at Carnegie Mellon University in 2022, pairs an LLM with an external code interpreter to carry out calculations.

- The method makes use of Chain-of-Though (CoT) prompting to generate executable Python scripts.

- The scripts that the model generates are passed to an interpreter to execute.

- The image on the right here is taken from the paper and show some example prompts and completions

- The strategy behind PAL is to have the LLM generate completions where reasoning steps are accompanied by computer code. This code is then passed to an interpreter to carry out the calculations necessary to solve the problem. You specify the output format for the model by including examples for one or few short inference in the prompt

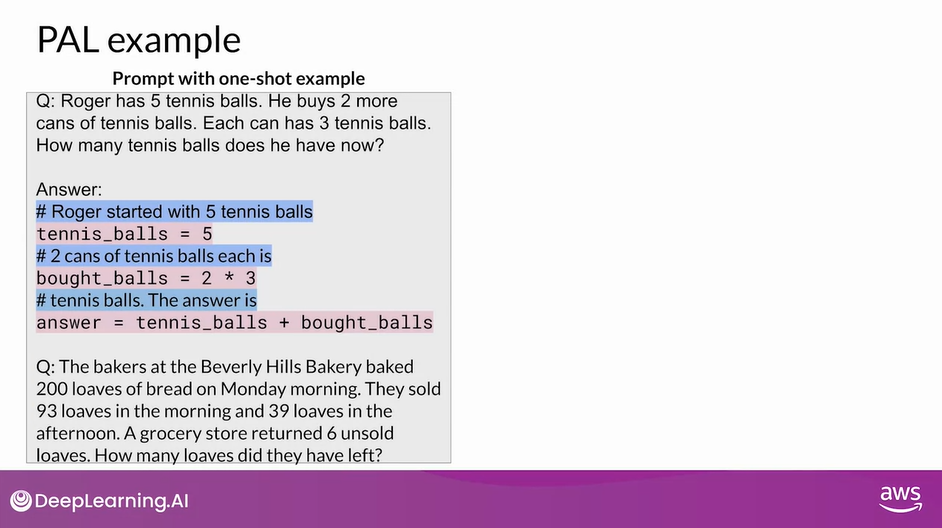

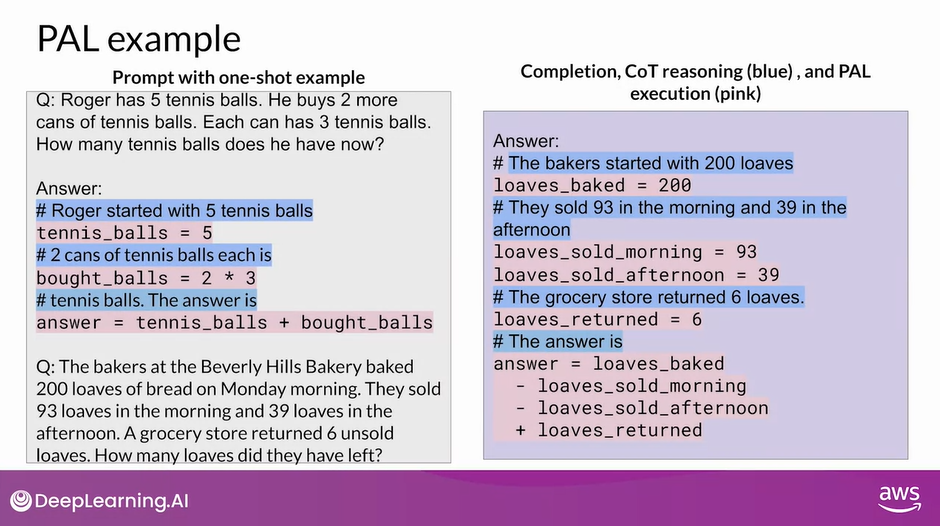

PAL Example

- Let’s take a closer look at how these example prompts are structured.

- You’ll continue to work with the story of Roger buying tennis balls as the one-shot example. The setup here should now look familiar. This is a chain of thought example.

- You can see the reasoning steps written out in words on the lines highlighted in blue.

- What differs from the prompts you saw before is the inclusion of lines of Python code shown in pink.

- These lines translate any reasoning steps that involve calculations into code. Variables are declared based on the text in each reasoning step.

- Their values are assigned either directly, as in the first line of code here, or as calculations using numbers present in the reasoning text as you see in the second Python line.

- The model can also work with variables it creates in other steps, as you see in the third line

- Note that the text of each reasoning step begins with a pound sign, so that the line can be skipped as a comment by the Python interpreter

- The prompt here ends with the new problem to be solved. In this case, the objective is to determine how many loaves of bread a bakery has left after a day of sales and after some loaves are returned from a grocery store partner

- On the right, you can see the completion generated by the LLM.

- Again, the chain of thought reasoning steps are shown in blue and the Python code is shown in pink.

- As you can see, the model creates a number of variables to track the loaves baked, the loaves sold in each part of the day, and the loaves returned by the grocery store.

- The answer is then calculated by carrying out arithmetic operations on these variables.

- The model correctly identifies whether terms should be added or subtracted to reach the correct total

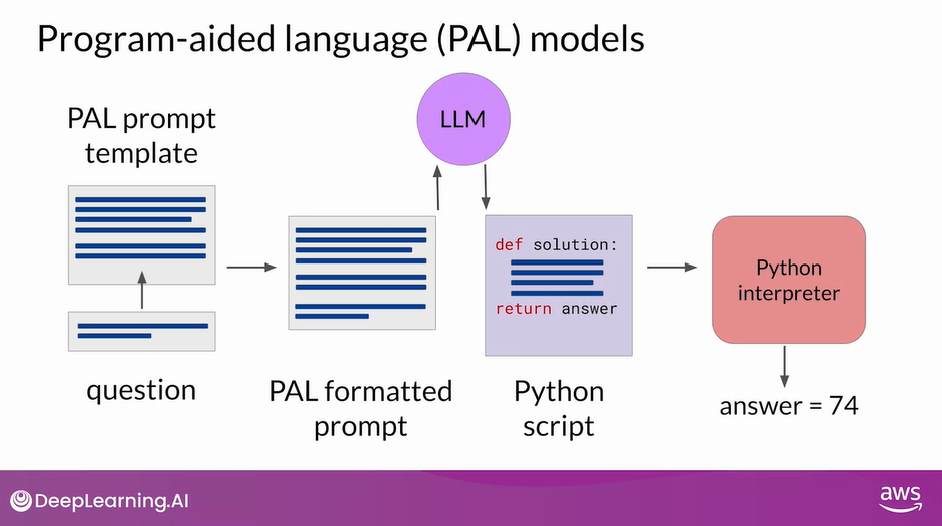

- Now that you know how to structure examples that will tell the LLM to write Python scripts based on its reasoning steps, let’s go over how the PAL framework enables an LLM to interact with an external interpreter.

- To prepare for inference with PAL, you’ll format your prompt to contain one or more examples.

- Each example should contain a question followed by reasoning steps in lines of Python code that solve the problem

- Next, you will append the new question that you’d like to answer to the prompt template.

- Your resulting PAL formatted prompt now contains both the example and the problem to solve.

- Next, you’ll pass this combined prompt to your LLM, which then generates a completion that is in the form of a Python script having learned how to format the output based on the example in the prompt.

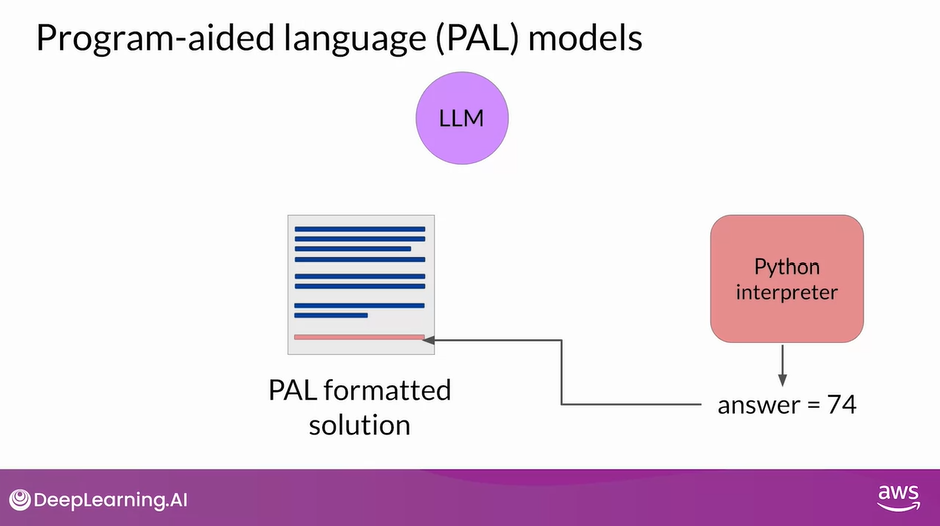

- You can now hand off the script to a Python interpreter, which you’ll use to run the code and generate an answer. For the bakery example script you saw on the previous slide, the answer is 74

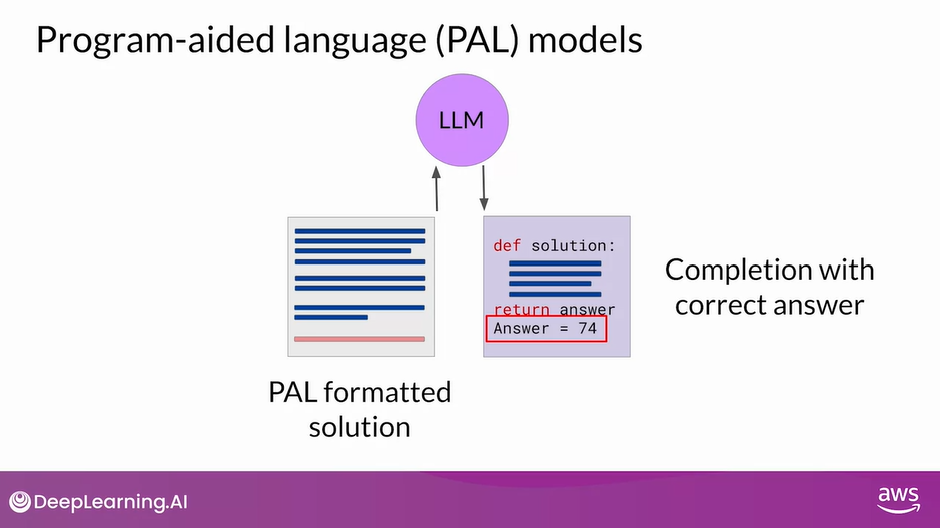

- You’ll now append the text containing the answer, which you know is accurate because the calculation was carried out in Python to the PAL formatted prompt you started with.

- By this point you have a prompt that includes the correct answer in context

Now when you pass the updated prompt to the LLM, it generates a completion that contains the correct answer.

Given the relatively simple math in the bakery bread problem, it’s likely that the model may have gotten the answer correct just with Chain-of-Thought prompting

But for more complex math, including arithmetic with large numbers, trigonometry or calculus, PAL is a powerful technique that allows you to ensure that any calculations done by your application are accurate and reliable

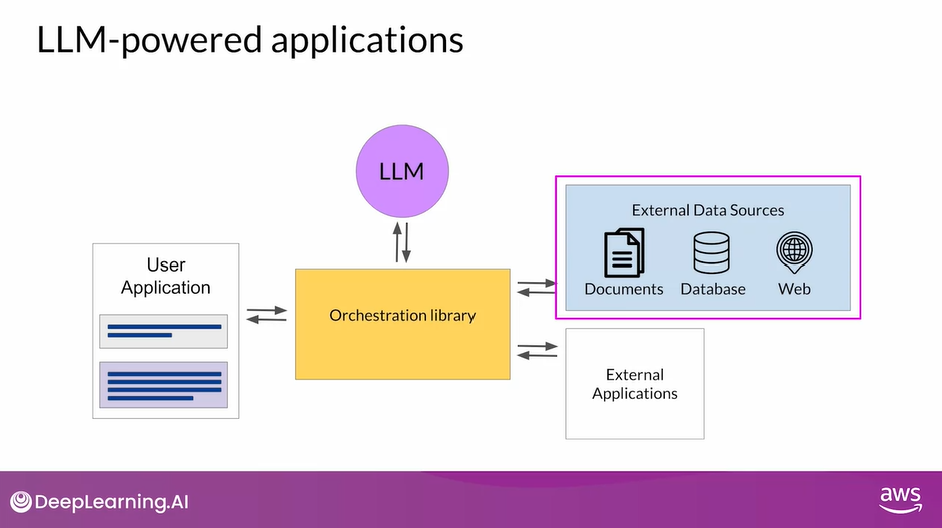

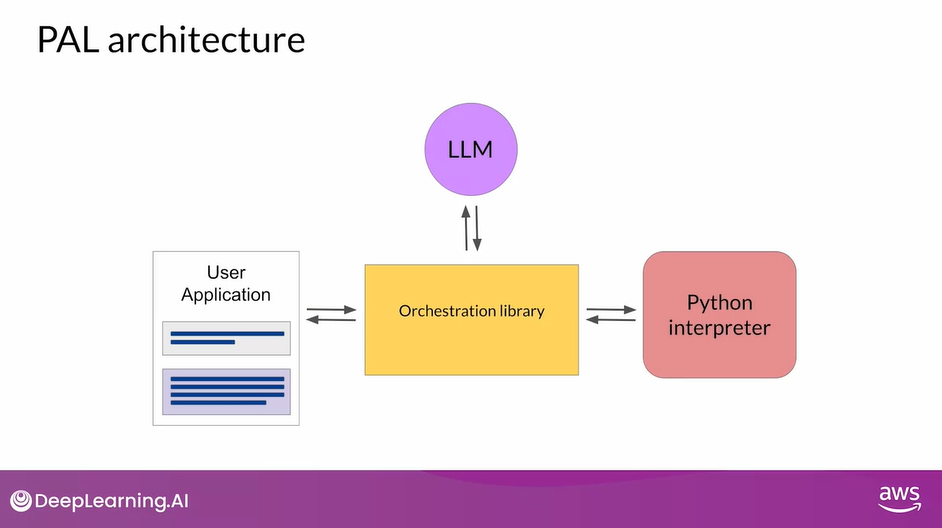

LLM-powered Applications 2

- You might be wondering how to automate this process so that you don’t have to pass information back and forth between the LLM, and the interpreter by hand.

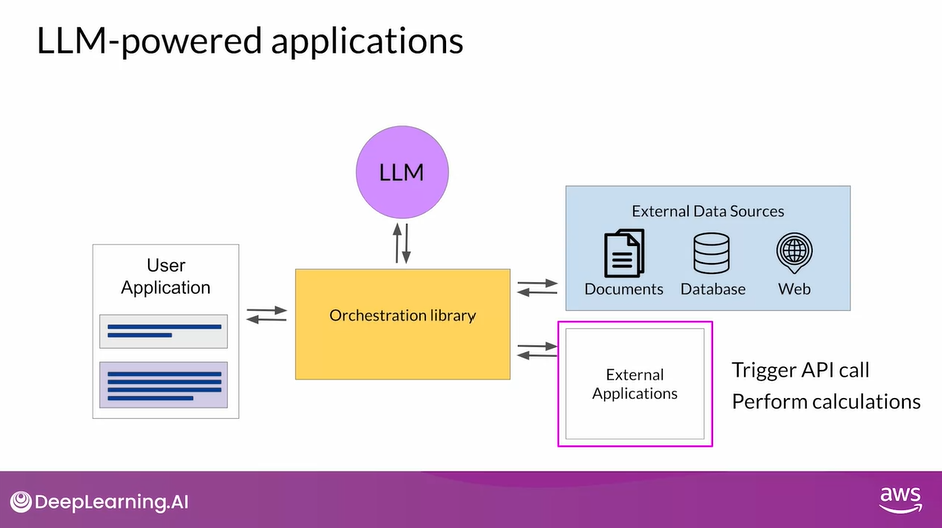

- This is where the orchestrator that you saw earlier comes in.

- The orchestrator shown here as the yellow box is a technical component that can manage the flow of information and the initiation of calls to external data sources or applications.

- It can also decide what actions to take based on the information contained in the output of the LLM

PAL Architecture

- Remember, the LLM is your application’s reasoning engine.

- Ultimately, it creates the plan that the orchestrator will interpret and execute.

- In PAL there’s only one action to be carried out, the execution of Python code.

- The LLM doesn’t really have to decide to run the code, it just has to write the script which the orchestrator then passes to the external interpreter to run

- However, most real-world applications are likely to be more complicated than the simple PAL architecture.

- Your use case may require interactions with several external data sources.

- As you saw in the shop bought example, you may need to manage multiple decision points, validation actions, and calls to external applications

ReAct: Combining Reasoning and Action

- ReAct that can help LLMs plan out and execute workflows

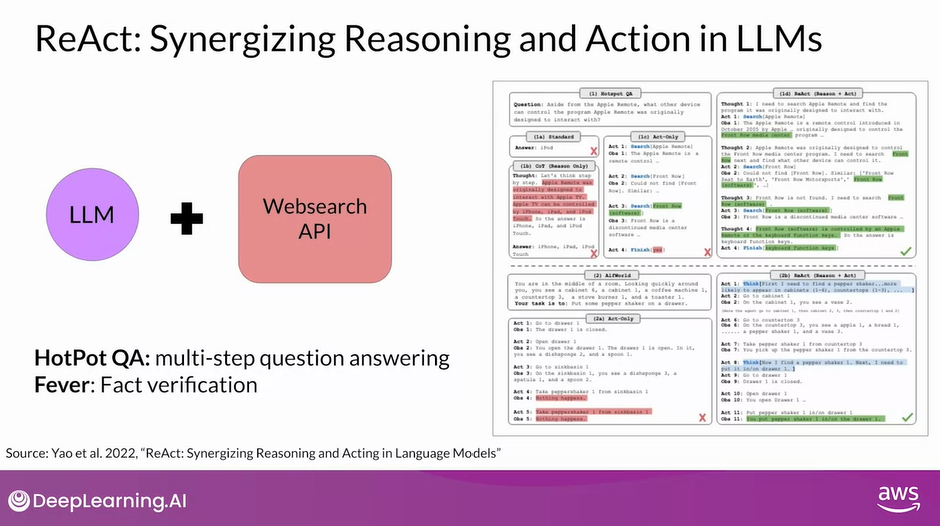

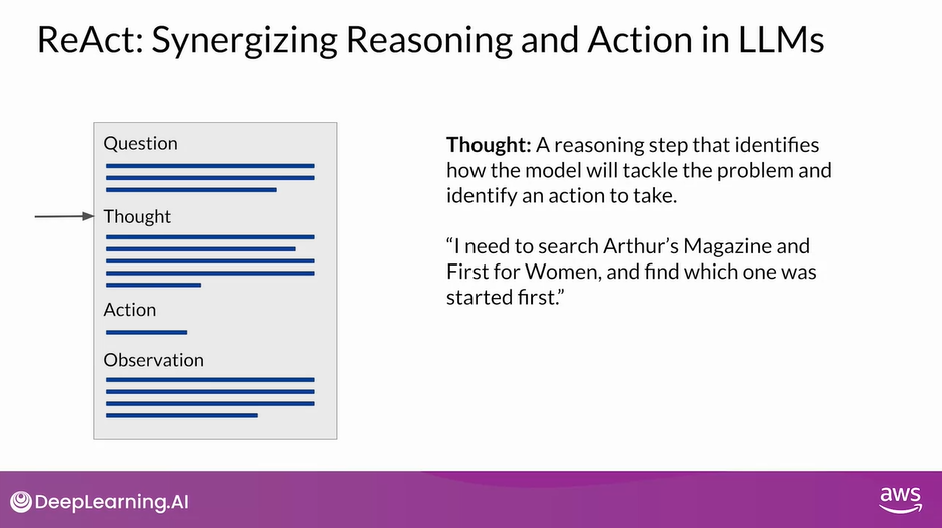

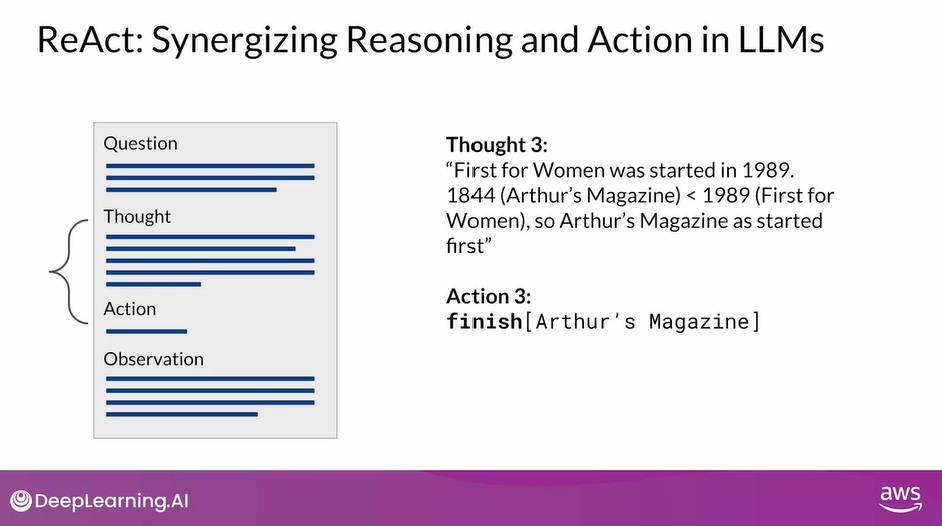

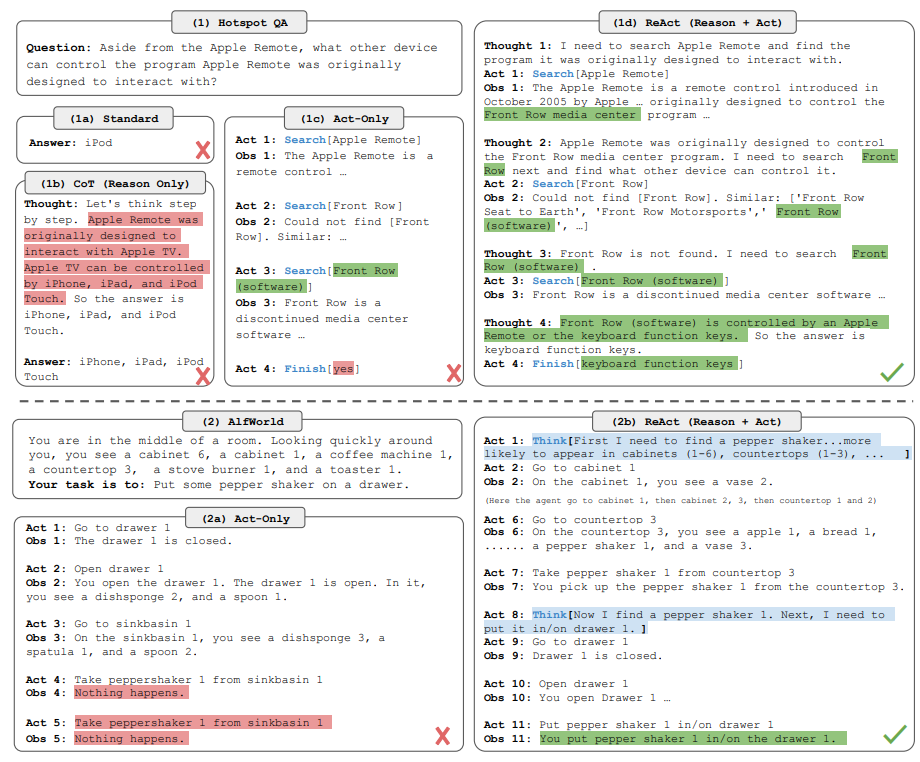

ReAct: Synergizing Reasoning and Action in LLMs

- ReAct is a prompting strategy that combines Chain-of-Thought (CoT) reasoning with action planning.

- The framework was proposed by researchers at Princeton and Google in 2022.

- The paper develops a series of complex prompting examples based on problems from

- HotPot QA, a multi-step question answering benchmark, that requires reasoning over two or more Wikipedia passages and

- Fever, a benchmark that uses Wikipedia passages to verify facts.

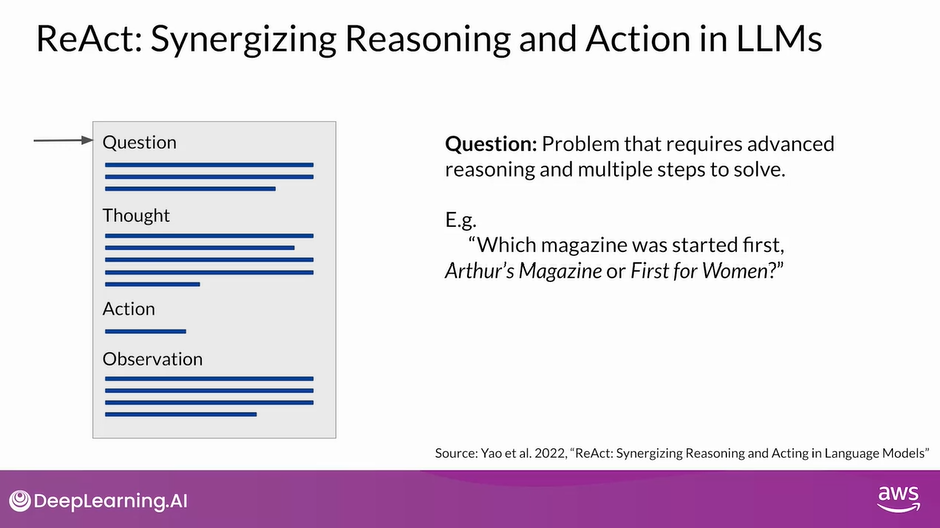

- ReAct uses structured examples to show a large language model how to reason through a problem and decide on actions to take that move it closer to a solution.

- The example prompts start with a question that will require multiple steps to answer.

- In this example, the goal is to determine which of two magazines was created first.

- The example then includes a related thought, action, observation trio of strings.

- The thought is a reasoning step that demonstrates to the model how to tackle the problem and identify an action to take.

- In the newspaper publishing example, the prompt specifies that the model will search for both magazines and determine which one was published first.

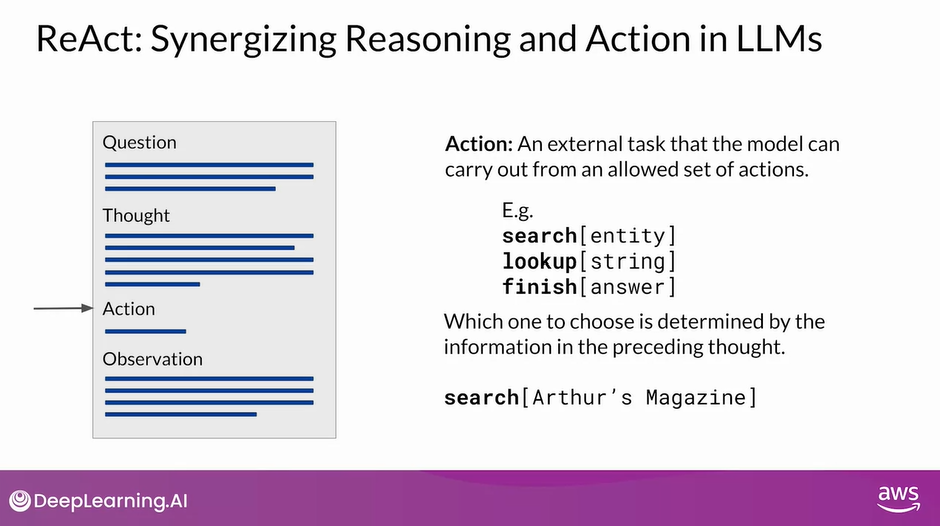

- In order for the model to interact with an external application or data source, it has to identify an action to take from a pre-determined list.

- In the case of the ReAct framework, the authors created a small Python API to interact with Wikipedia.

- The three allowed actions are search, which looks for a Wikipedia entry about a particular topic lookup, which searches for a string on a Wikipedia page.

- And finish, which the model carries out when it decides it has determined the answer.

- As you saw on the previous slide, the thought in the prompt identified two searches to carry out one for each magazine.

- In this example, the first search will be for Arthur’s magazine.

- The action is formatted using the specific square bracket notation you see here, so that the model will format its completions in the same way.

- The Python interpreter searches for this code to trigger specific API actions.

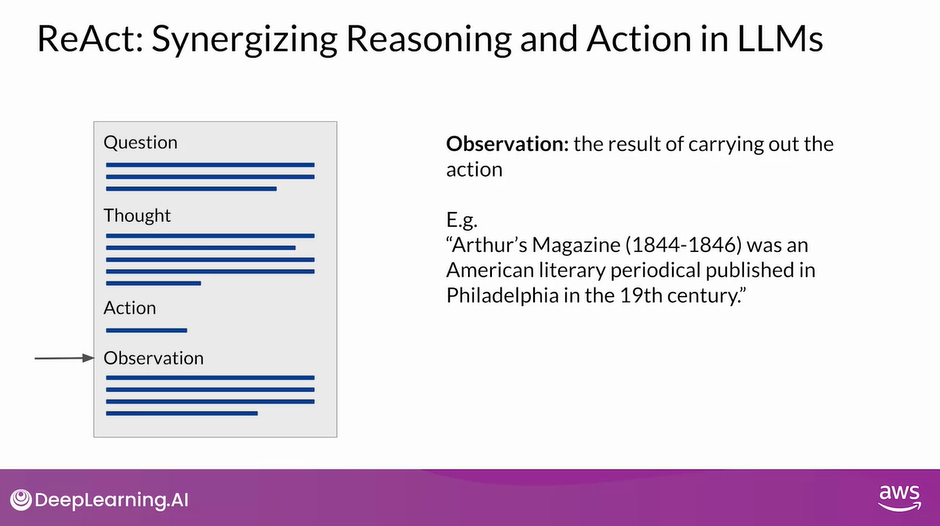

- The last part of the prompt template is the observation, this is where the new information provided by the external search is brought into the context of the prompt for the model to interpret.

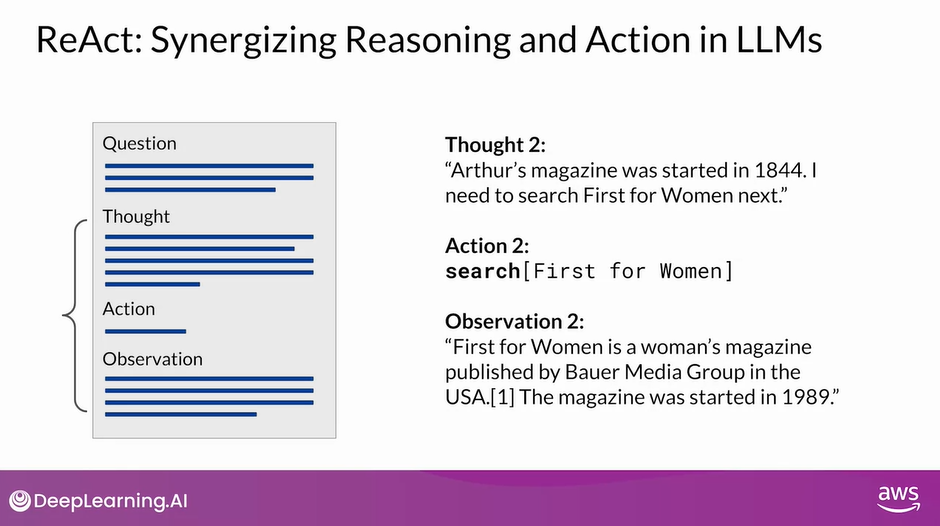

- The prompt then repeats the cycle as many times as is necessary to obtain the final answer.

- In the second thought, the prompt states the start year of Arthur’s magazine and identifies the next step needed to solve the problem.

- The second action is to search for first for women, and the second observation includes text that states the start date of the publication, in this case 1989.

- At this point, all the information required to answer the question is known.

- The third thought states the start year of first for women and then gives the explicit logic used to determine which magazine was published first.

- The final action is to finish the cycle and pass the answer back to the user

Note: In the ReAct framework, the LLM can only choose from a limited number of actions that are defined by a set of instructions that is pre-pended to the example prompt text

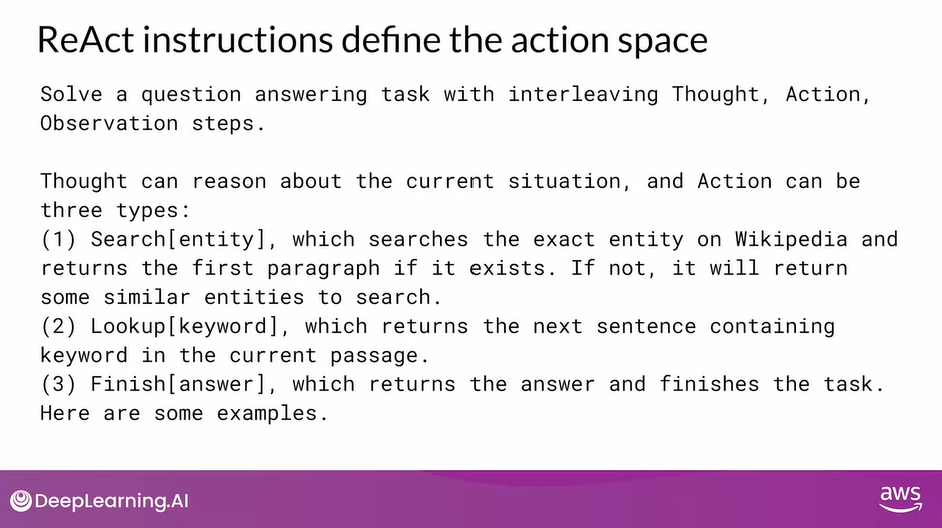

ReAct Instructions Define the Action Space

- The full text of the instructions is shown here.

- First, the task is defined, telling the model to answer a question using the prompt structure you just explored in detail.

- Next, the instructions give more detail about what is meant by thought and then specifies that the action step can only be one of three types.

- The first is the search action, which looks for Wikipedia entries related to the specified entity.

- The second is the lookup action, which retrieves the next sentence that contains the specified keyword.

- The last action is finish, which returns the answer and brings the task to an end.

- It is critical to define a set of allowed actions when using LLMs to plan tasks that will power applications.

- LLMs are very creative, and they may propose taking steps that don’t actually correspond to something that the application can do.

- The final sentence in the instructions lets the LLM know that some examples will come next in the prompt text

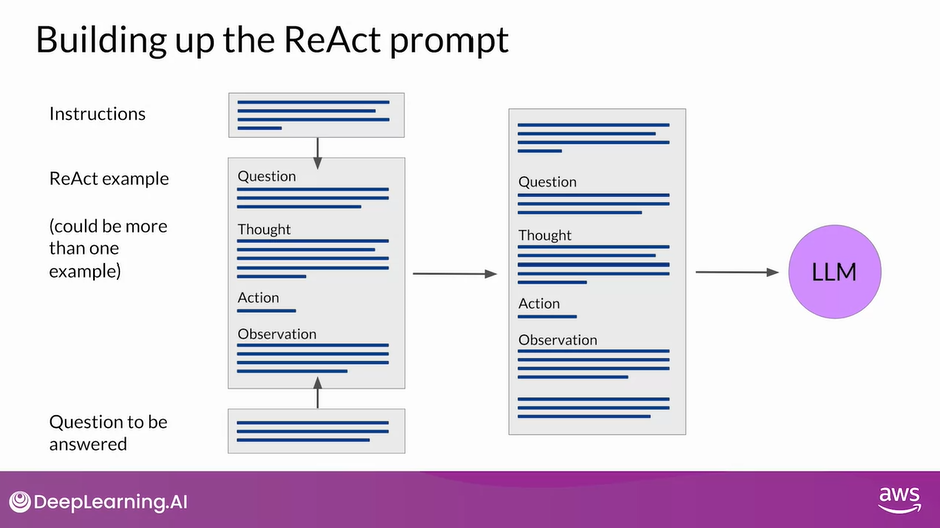

Building Up the ReAct Prompt

- Okay, so let’s put all the pieces together, for inference.

- You’ll start with the ReAct example prompt.

- Note that depending on the LLM you’re working with, you may find that you need to include more than one example and carry out future inference.

- Next, you’ll prepend the instructions at the beginning of the example and then insert the question you want to answer at the end.

- The full prompt now includes all of these individual pieces, and it can be passed to the LLM for inference

- The ReAct framework shows one way to use LLMs to power an application through reasoning and action planning.

- This strategy can be extended for your specific use case by creating examples that work through the decisions and actions that will take place in your application

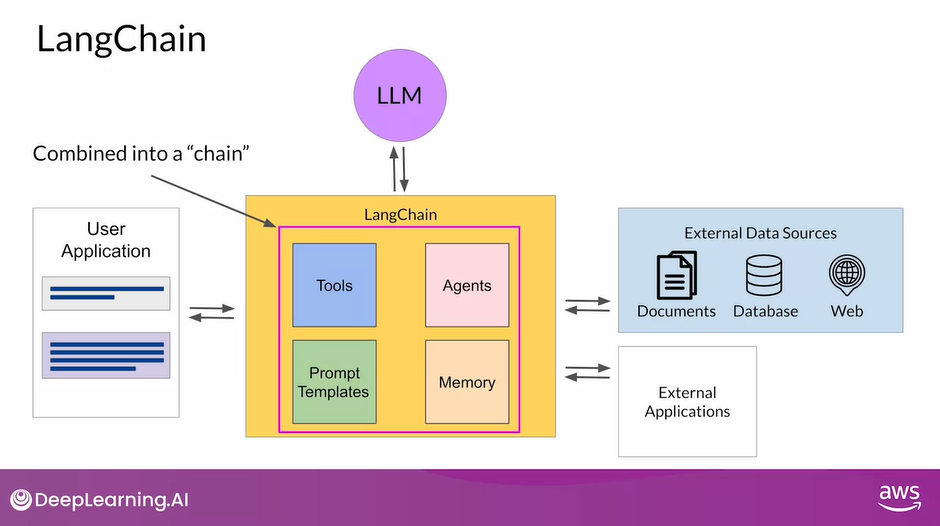

LangChain

- The LangChain framework provides you with modular pieces that contain the components necessary to work with LLMs.

- These components include prompt templates for many different use cases that you can use to format both input examples and model completions and memory that you can use to store interactions with an LLM.

- The framework also includes pre-built tools that enable you to carry out a wide variety of tasks, including calls to external datasets and various APIs.

- Connecting a selection of these individual components together results in a chain.

- The creators of LangChain have developed a set of predefined chains that have been optimized for different use cases, and you can use these off the shelf to quickly get your app up and running

- Sometimes your application workflow could take multiple paths depending on the information the user provides. In this case, you can’t use a pre-determined chain, but instead we’ll need the flexibility to decide which actions to take as the user moves through the workflow

- LangChain defines another construct, known as an Agent, that you can use to interpret the input from the user and determine which tool or tools to use to complete the task.

- LangChain currently includes agents for both PAL and ReAct, among others.

- Agents can be incorporated into chains to take an action or plan and execute a series of actions

The Significance of Scale: Application Building

- The ability of the model to reason well and plan actions depends on its scale.

- Larger models are generally your best choice for techniques that use advanced prompting, like PAL or ReAct.

- Smaller models may struggle to understand the tasks in highly structured prompts and may require you to perform additional fine tuning to improve their ability to reason and plan. This could slow down your development process.

- If you start with a large, capable model and collect lots of user data in deployment, you may be able to use it to train and fine-tune a smaller model that you can switch to at a later time

Reading: ReAct: Reasoning and Action

This paper introduces ReAct, a novel approach that integrates verbal reasoning and interactive decision making in large language models (LLMs). While LLMs have excelled in language understanding and decision making, the combination of reasoning and acting has been neglected. ReAct enables LLMs to generate reasoning traces and task-specific actions, leveraging the synergy between them. The approach demonstrates superior performance over baselines in various tasks, overcoming issues like hallucination and error propagation. ReAct outperforms imitation and reinforcement learning methods in interactive decision making, even with minimal context examples. It not only enhances performance but also improves interpretability, trustworthiness, and diagnosability by allowing humans to distinguish between internal knowledge and external information.

In summary, ReAct bridges the gap between reasoning and acting in LLMs, yielding remarkable results across language reasoning and decision making tasks. By interleaving reasoning traces and actions, ReAct overcomes limitations and outperforms baselines, not only enhancing model performance but also providing interpretability and trustworthiness, empowering users to understand the model’s decision-making process.

Image: The figure provides a comprehensive visual comparison of different prompting methods in two distinct domains. The first part of the figure (1a) presents a comparison of four prompting methods: Standard, Chain-of-thought (CoT, Reason Only), Act-only, and ReAct (Reason+Act) for solving a HotpotQA question. Each method’s approach is demonstrated through task-solving trajectories generated by the model (Act, Thought) and the environment (Obs). The second part of the figure (1b) focuses on a comparison between Act-only and ReAct prompting methods to solve an AlfWorld game. In both domains, in-context examples are omitted from the prompt, highlighting the generated trajectories as a result of the model’s actions and thoughts and the observations made in the environment. This visual representation enables a clear understanding of the differences and advantages offered by the ReAct paradigm compared to other prompting methods in diverse task-solving scenarios.

LLM Application Architectures

Infrastructure

- To begin, let’s bring everything you’ve seen so far in the lesson together and look at the building blocks for creating LLM powered applications. You’ll require several key components to create end-to-end solutions for your applications, starting with the infrastructure layer. This layer provides the compute, storage, and network to serve up your LLMs, as well as to host your application components. You can make use of your on-premises infrastructure for this or have it provided for you via on-demand and pay-as-you-go Cloud services

LLM Models

- Next, you’ll include the large language models you want to use in your application. These could include foundation models, as well as the models you have adapted to your specific task. The models are deployed on the appropriate infrastructure for your inference needs. Taking into account whether you need real-time or near-real-time interaction with the model

Information Sources

- You may also have the need to retrieve information from external sources, such as those discussed in the Retrieval Augmented Generation (RAG) section

Gather Outputs & Feedback

- Your application will return the completions from your large language model to the user or consuming application. Depending on your use case, you may need to implement a mechanism to capture and store the outputs. For example, you could build the capacity to store user completions during a session to augment the fixed contexts window size of your LLM. You can also gather feedback from users that may be useful for additional fine-tuning, alignment, or evaluation as your application matures

LLM Tools & Frameworks

- Next, you may need to use additional tools and frameworks for large language models that help you easily implement some of the techniques discussed in this course. As an example, you can use LangChain built-in libraries to implement techniques like PAL, ReAct or Chain-of-Thought (CoT) prompting. You may also utilize model hubs which allow you to centrally manage and share models for use in applications

Application Interfaces

In the final layer, you typically have some type of user interface that the application will be consumed through, such as a website or a REST API. This layer is where you’ll also include the security components required for interacting with your application

At a high level, this architecture stack represents the various components to consider as part of your generative AI applications. Your users, whether they are human end-users or other systems that access your application through its APIs, will interact with this entire stack

As you can see, the model is typically only one part of the story in building end-to-end generative AI applications

Summary

- This week, you saw how to align your models with human preferences, such as helpfulness, harmlessness, and honesty (HHH) by fine-tuning using a technique called Reinforcement Learning with Human Feedback (RLHF).

- Given the popularity of RLHF, there are many existing RL reward models and human alignment datasets available, enabling you to quickly start aligning your models.

- In practice, RLHF is a very effective mechanism that you can use to improve the alignment of your models, reduce the toxicity of their responses, and let you use your models more safely in production.

- You also saw important techniques to optimize your model for inference by reducing the size of the model through distillation, quantization, or pruning.

- This minimizes the amount of hardware resources needed to serve your LLMs in production.

- Lastly, you explored ways that you can help your model perform better in deployment through structured prompts and connections to external data sources and applications.

- LLMs can play an amazing role as the reasoning engine in an application, exploiting their intelligence to power exciting, useful applications.

- Frameworks like LangChain are making it possible to quickly build, deploy, and test LLM-powered applications, and it’s a very exciting time for developers.

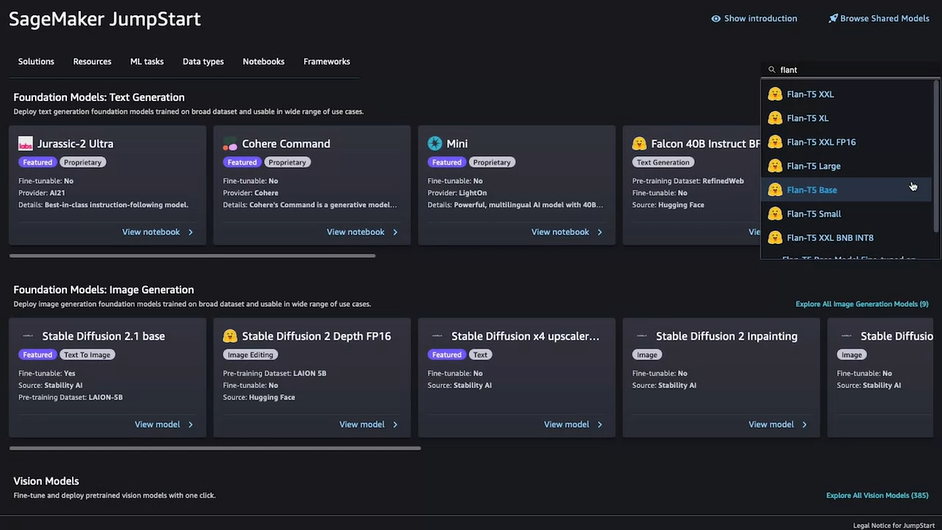

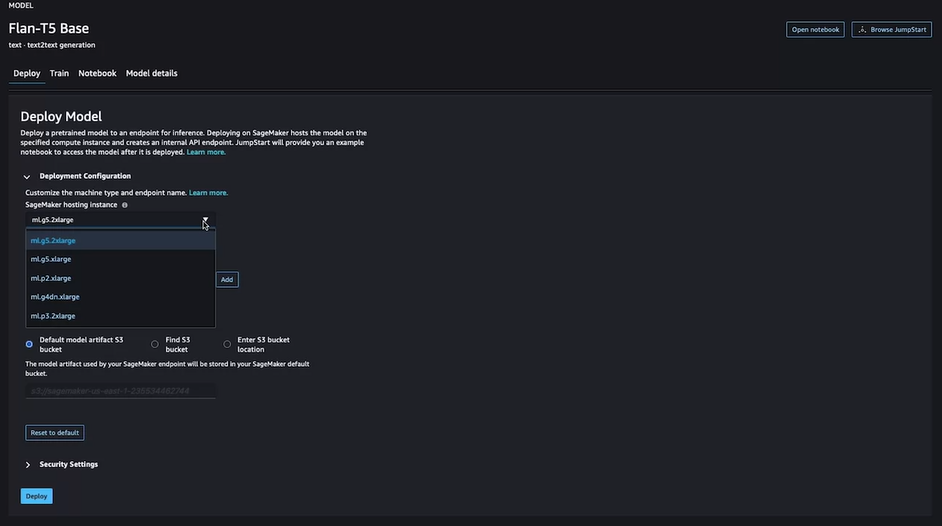

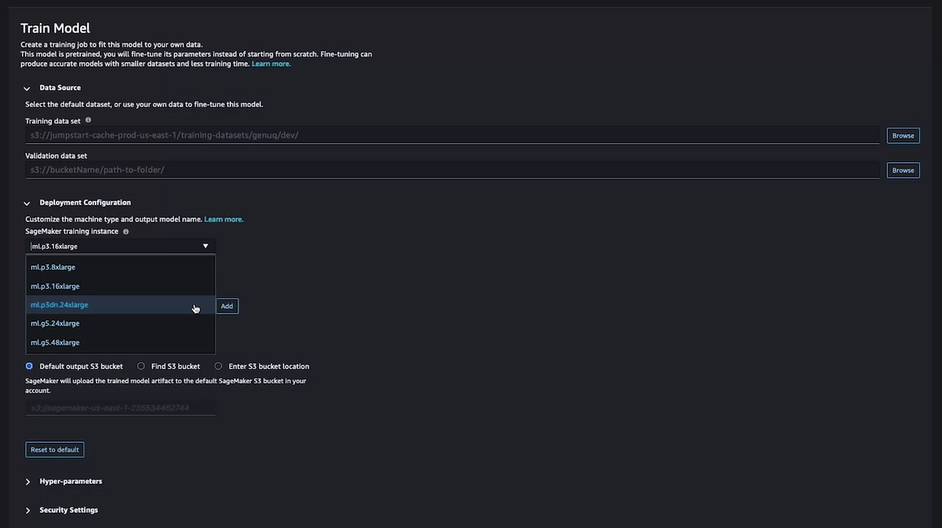

Optional: AWS Sagemaker Jumpstart